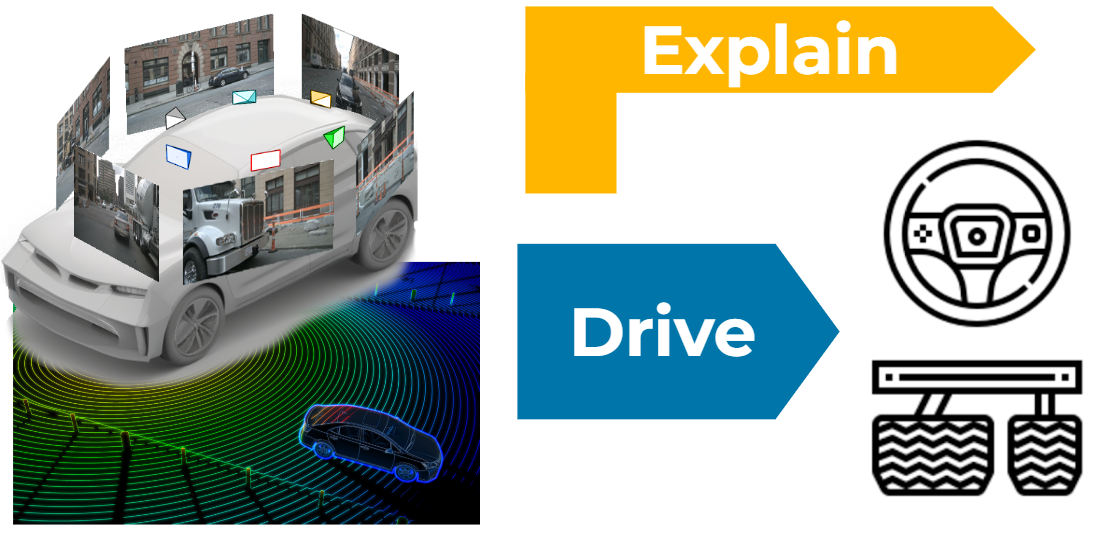

Multi-sensor scene understanding

-

Multi-sensor perception

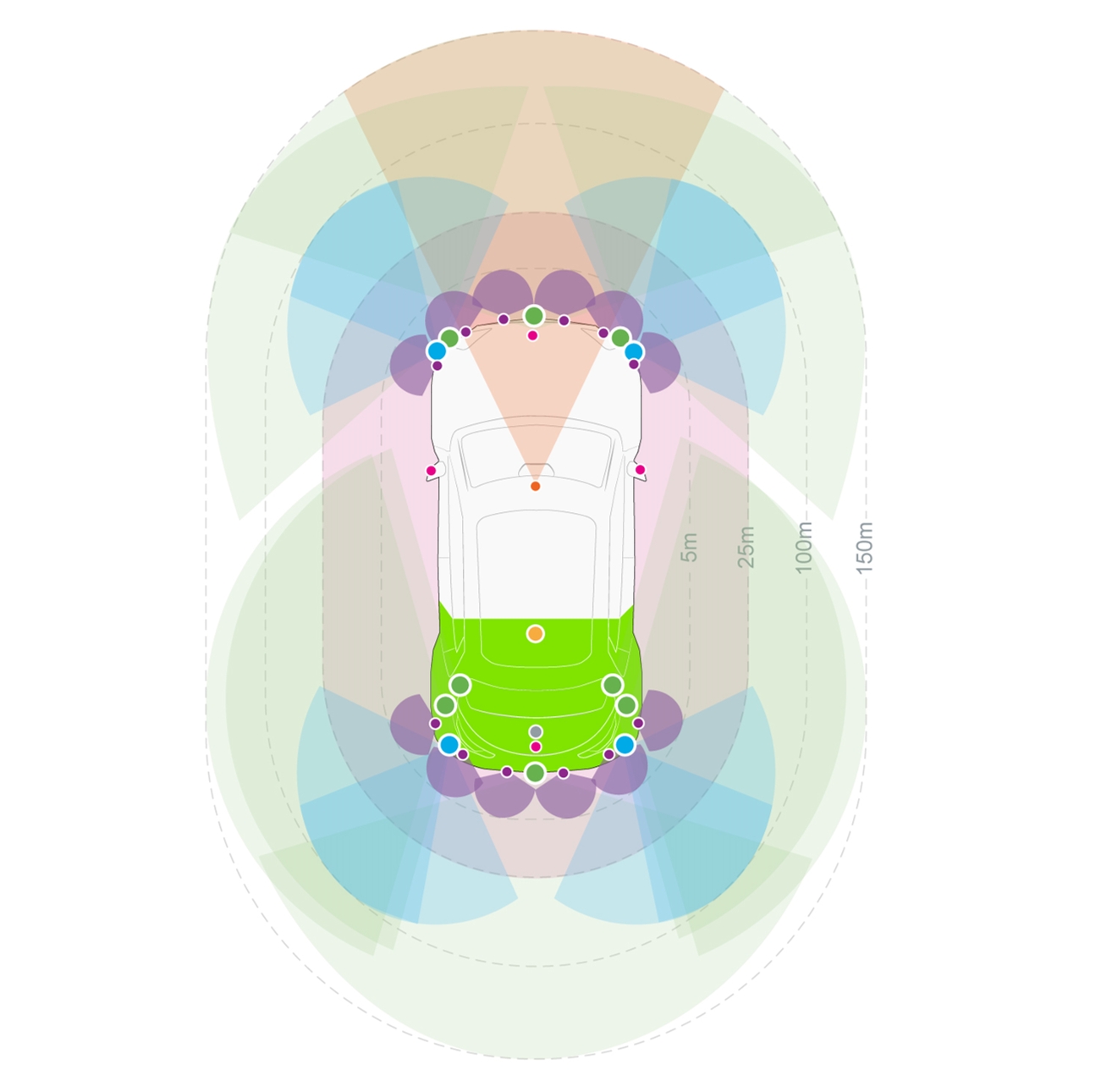

Automated driving relies first on a diverse range of sensors, like fish-eye and pinhole camera rigs, LiDARs, microphones, radars, and ultrasonics. Exploiting at best the outputs of each of these sensors at any instant is fundamental to understand the complex environment of the vehicle and gain robustness -

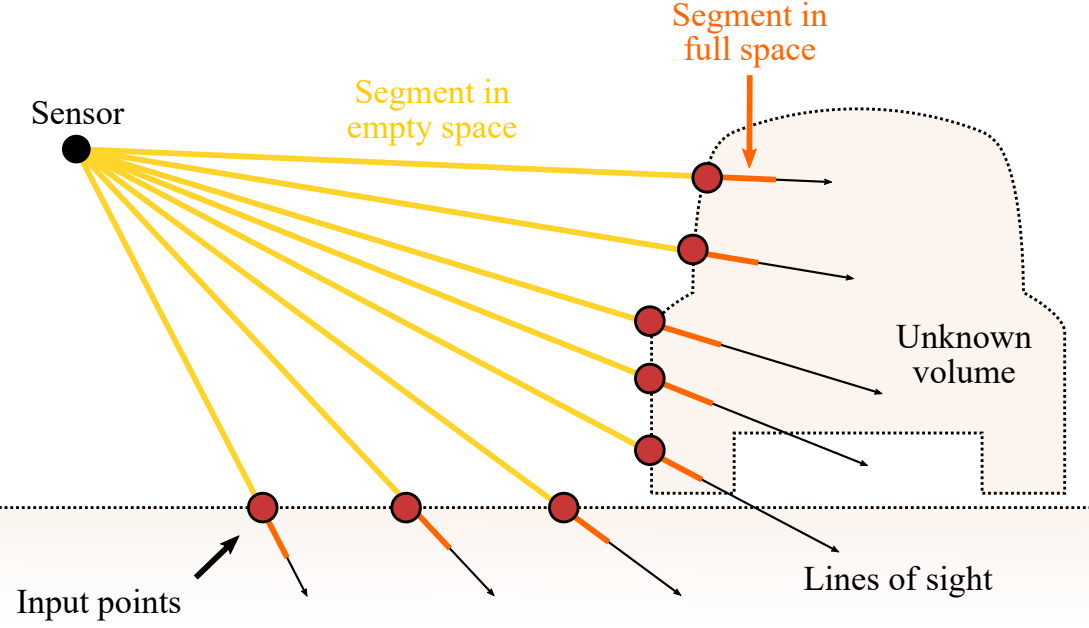

3D perception

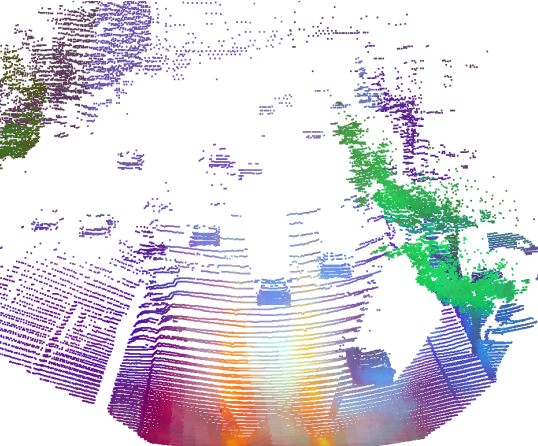

LiDAR scanners and other sensors deliver information about the 3D world around the vehicle. Making sense of this information in terms of drivable space and important objects in 3D is required to develop robust driving system that plan and act safely. -

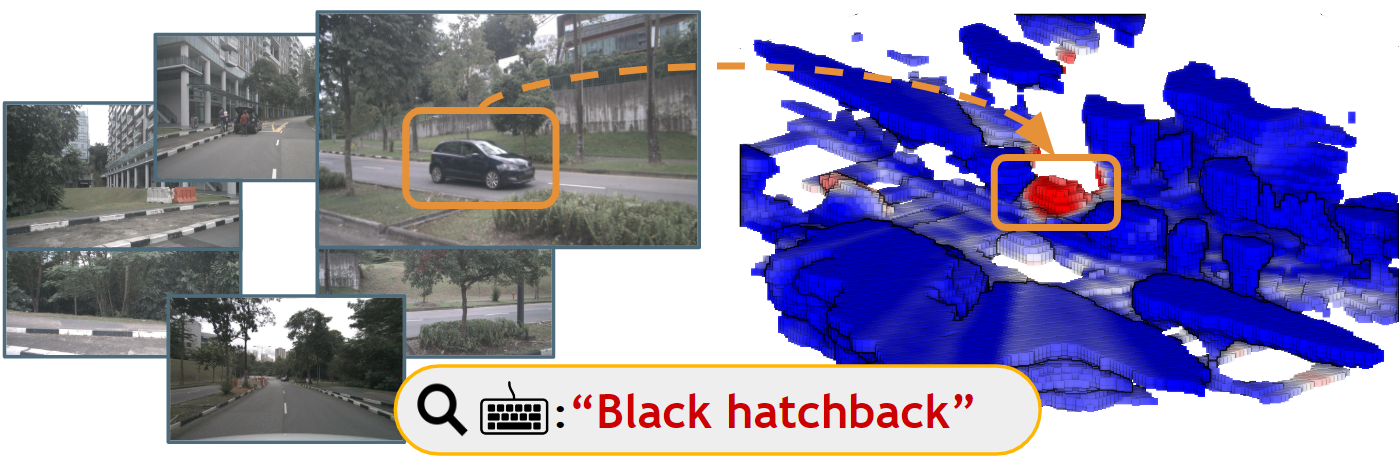

Foundation models

Foundation models break free from predefined and restricted ontologies, enabling them to perceive a wider range of elements in their surroundings. By pretraining these foundation models in an unsupervised way, we can adapt them to a diverse set of tasks. -

Motion forecasting

Accurately predicting the future motion of road users and objects is crucial for safe and efficient autonomous driving. -

Pose estimation

Precise determination of an object's position, orientation, and dimensions in 3D space is vital for autonomous driving.

Data and annotation efficient learning

-

Self-supervised and unsupervised learning

Collecting and annotating diverse data is complex, costly, and time-consuming. We explore alternatives to fully-supervised learning, such as unsupervised, self-supervised and active learning, enabling AI models to generate labels automatically and reduce reliance on manually labeled datasets. -

Zero-shot and open-world learning

Our research in open-world perception focuses on developing models capable of recognizing and adapting to novel objects and scenarios, ensuring safe and reliable performance in the ever-changing real world. -

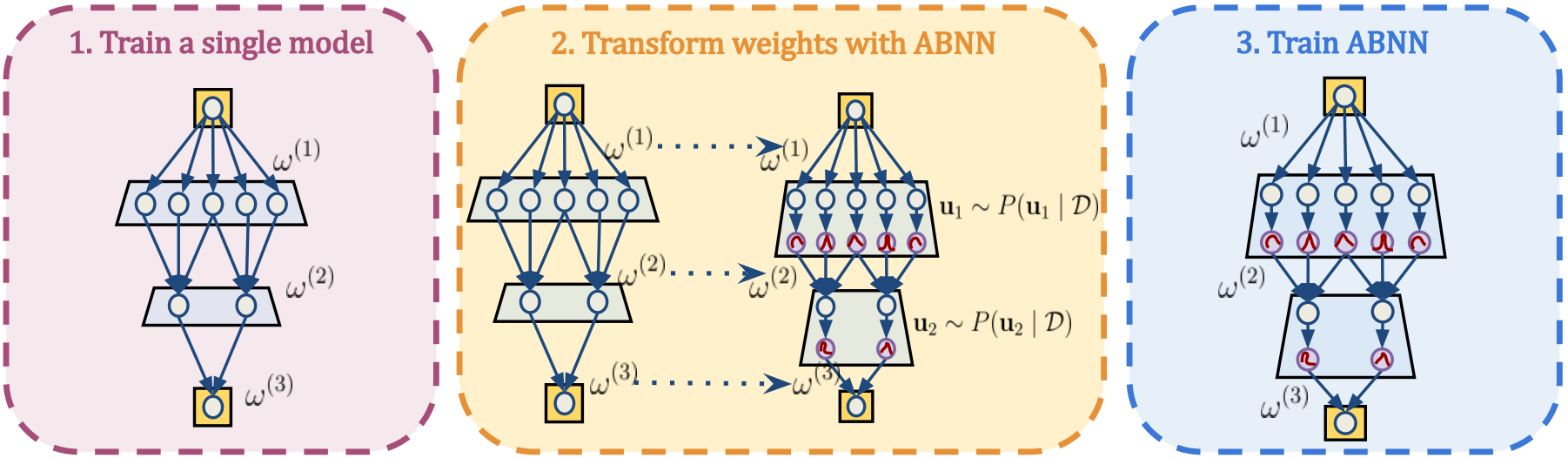

Core Deep Learning

Deep learning being now a key component of AD systems, it is important to get a better understanding of its inner workings, in particular the link between the specifics of the learning optimization and the key properties (performance, regularity, robustness, generalization) of the trained models.

Dependable models

-

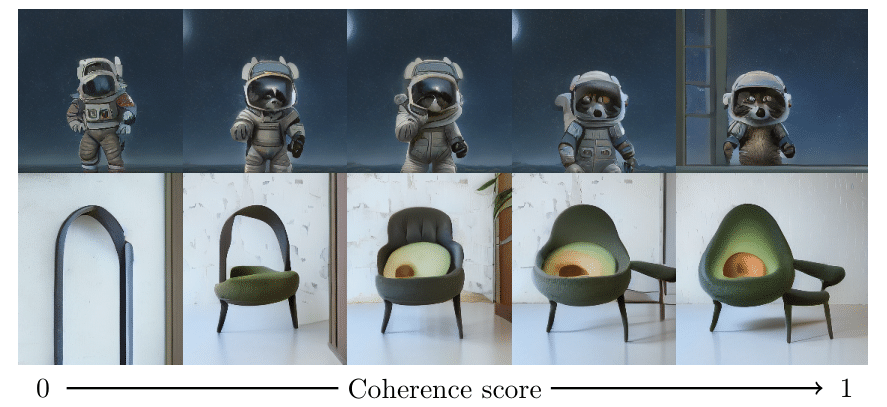

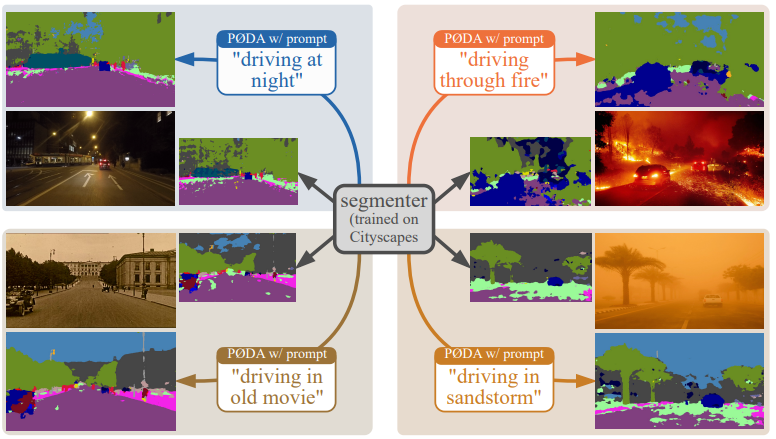

Model and domain generalization

Autonomous vehicles must perform well in diverse and unseen environments. Our research in generalization and domain adaptation focuses on developing models that can effectively transfer their knowledge from familiar to unfamiliar environments, ensuring reliable performance in various real-world conditions. -

Robustness and uncertainty

Autonomous vehicles must handle uncertainty and operate reliably under challenging conditions. Our research in uncertainty and robustness focuses on developing models that can accurately estimate their own uncertainty and maintain resilience against noisy or adversarial inputs -

Explainability of Deep Models

The concept of explainability has several facets and the need for explainability is strong in safety-critical applications such as autonomous driving. We investigate methods providing post-hoc explanations to black-box systems, and approaches to directly design more interpretable models.