Explainability of Deep Models

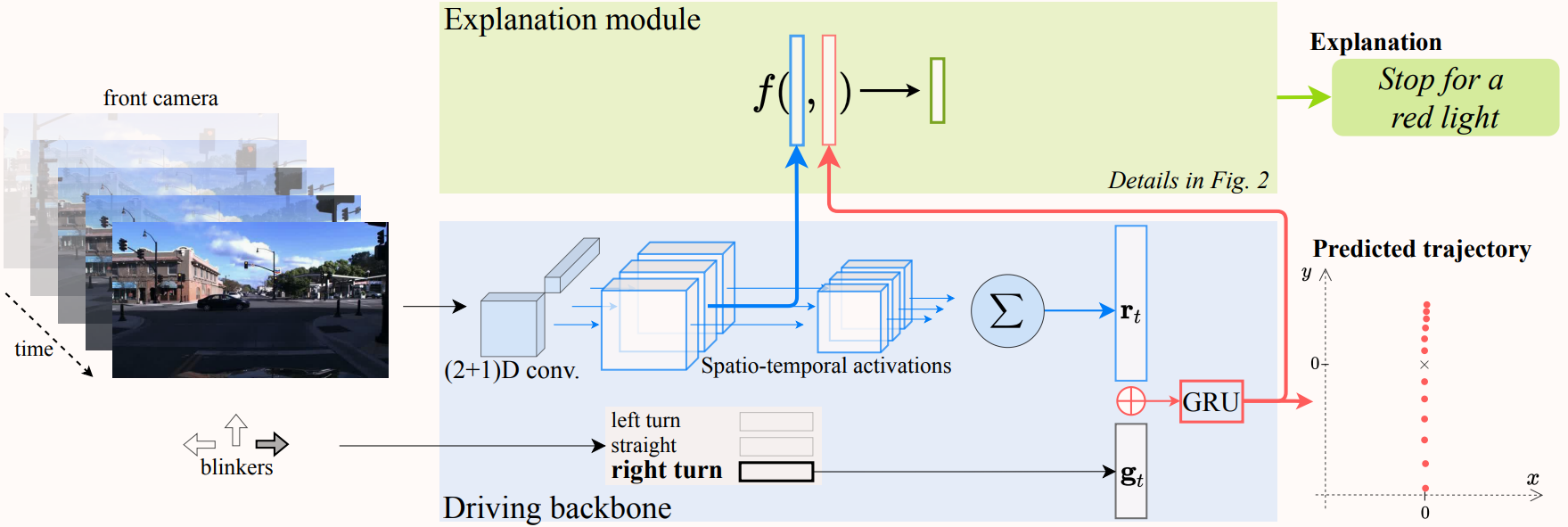

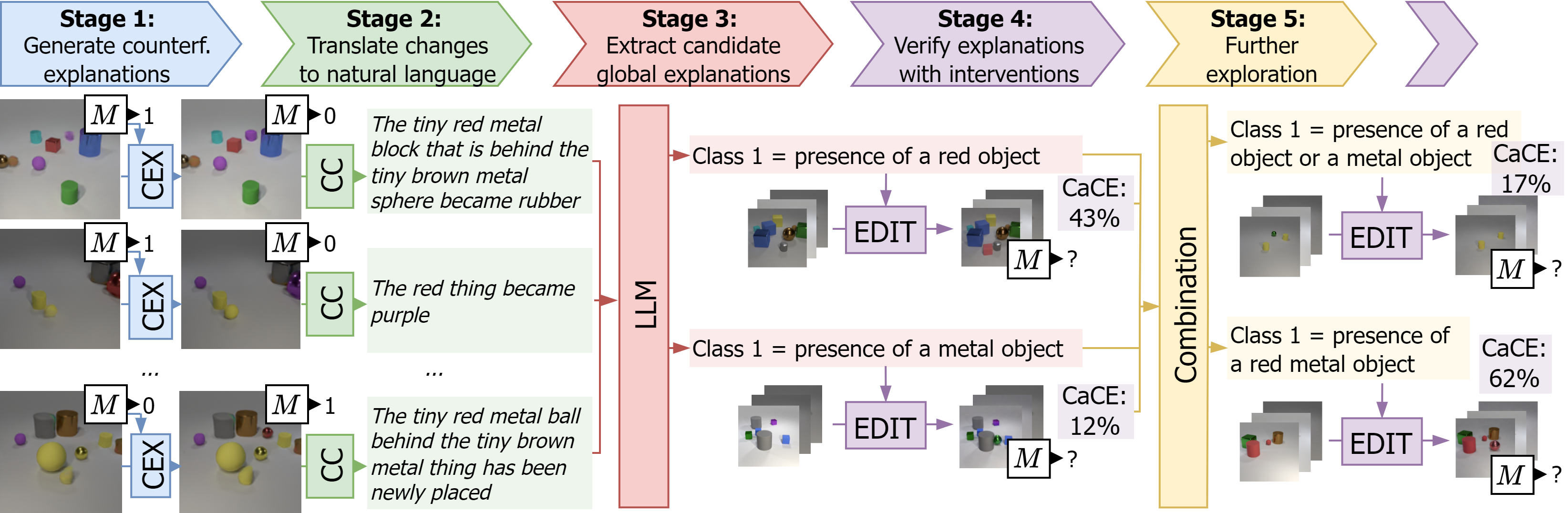

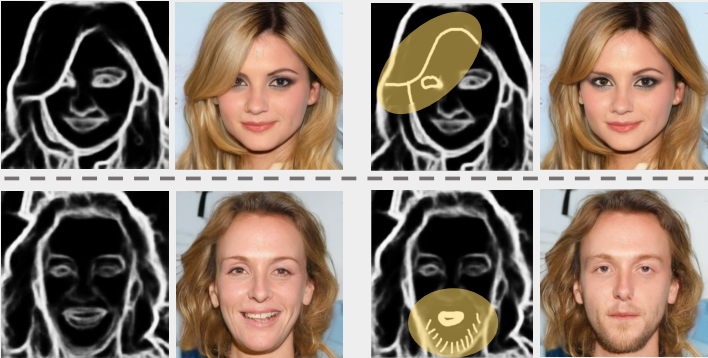

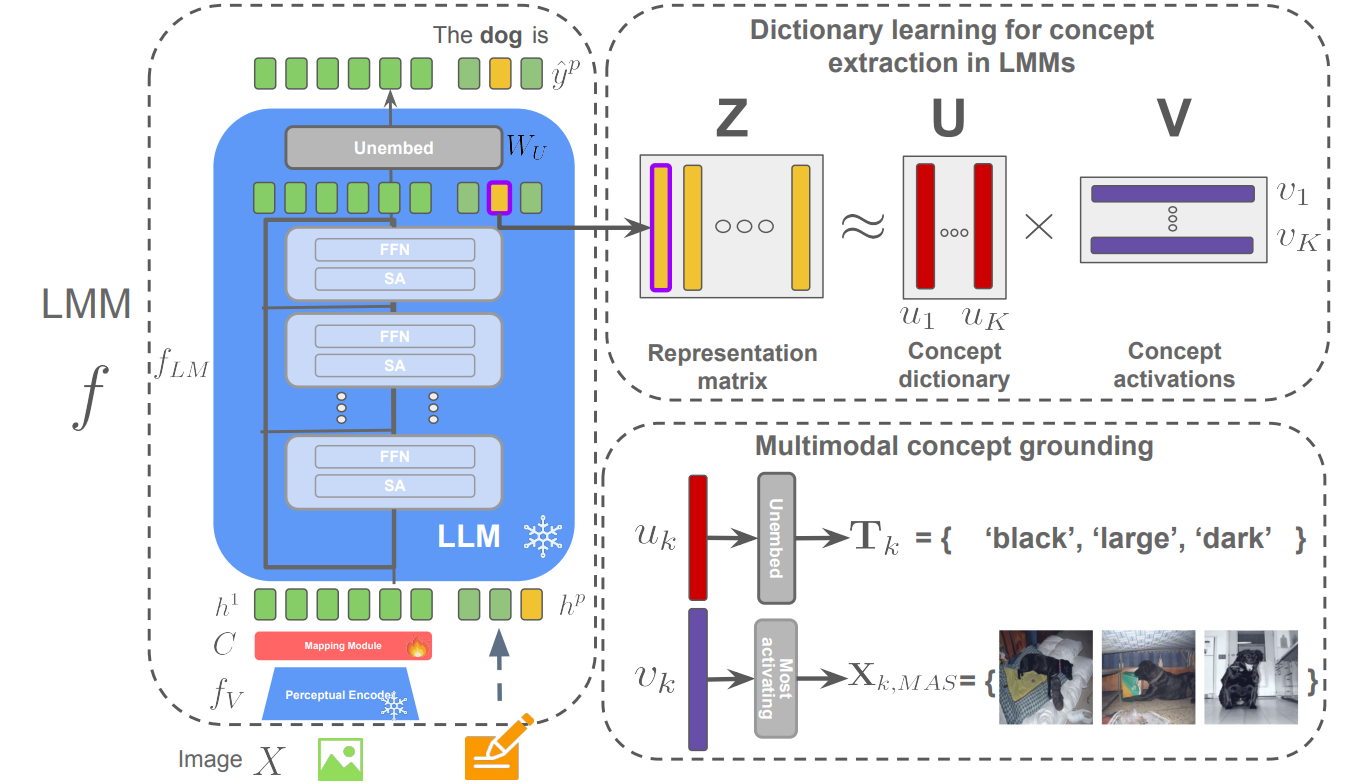

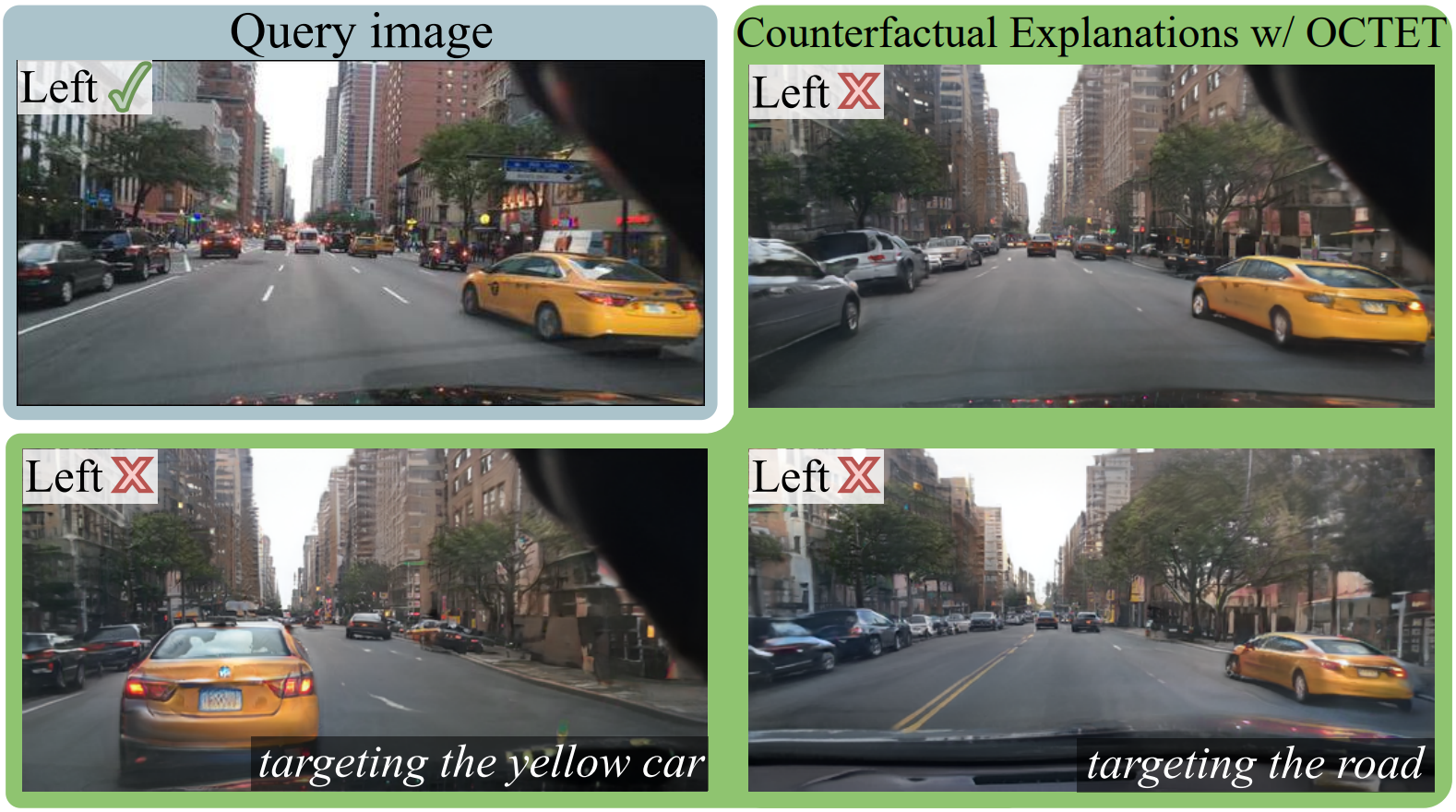

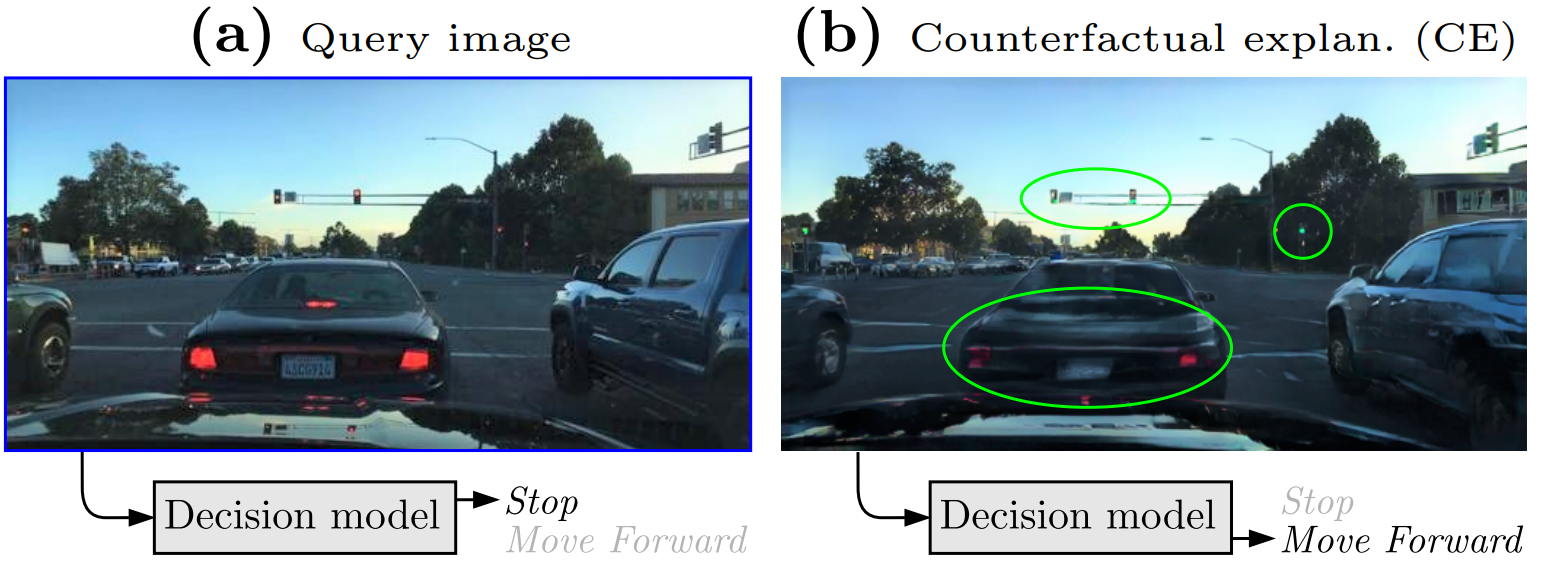

The concept of explainability has several facets and the need for explainability is strong in safety-critical applications such as autonomous driving. We investigate methods providing post-hoc explanations to black-box systems, and approaches to directly design more interpretable models.

Selected publications

- TMLR, 2026Featured Certification

-

-