Frugal learning

Collecting diverse enough data, and annotating it precisely, is complex, costly and time-consuming. To reduce dramatically these needs, we explore various alternatives to fully-supervised learning, e.g, training that is unsupervised (as rOSD at ECCCV’20), self-supervised (as BoWNet at CVPR’20), semi-supervised, active, zero-shot (as ZS3 at NeurIPS’19) or few-shot. We also investigate training with fully-synthetic data (in combination with unsupervised domain adaptation) and with GAN-augmented data (as Semantic Palette at CVPR’21).

Publications

Unsupervised Object Localization in the Era of Self-Supervised ViTs: A Survey

Oriane Siméoni, Éloi Zablocki, Spyros Gidaris, Gilles Puy, Patrick Pérez

International Journal of Computer Vision, 2024

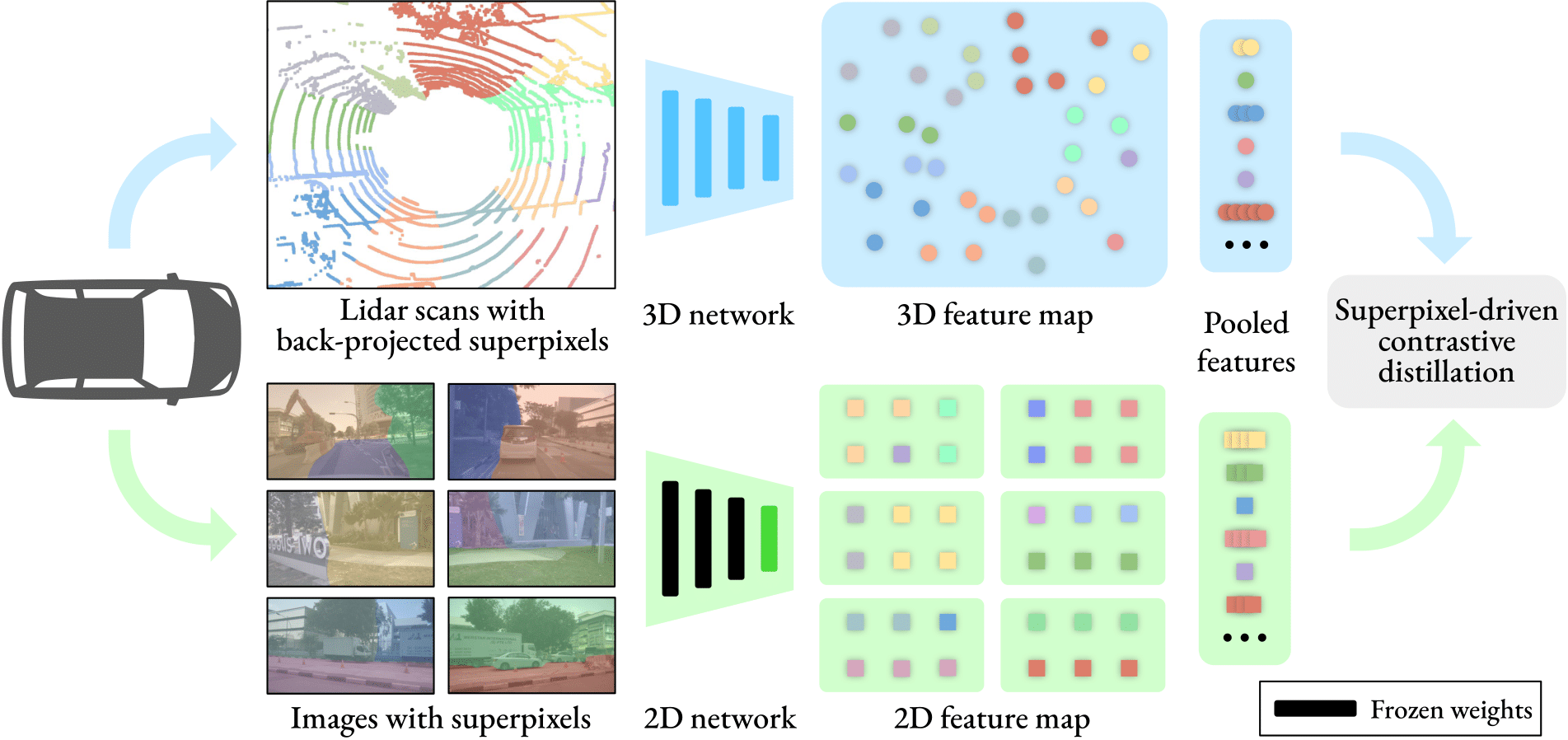

Three Pillars improving Vision Foundation Model Distillation for Lidar

Gilles Puy, Spyros Gidaris, Alexandre Boulch, Oriane Siméoni, Corentin Sautier, Patrick Pérez, Andrei Bursuc, Renaud Marlet

Computer Vision and Pattern Recognition (CVPR), 2024

SPOT: Self-Training with Patch-Order Permutation for Object-Centric Learning with Autoregressive Transformers

Ioannis Kakogeorgiou, Spyros Gidaris, Konstantinos Karantzalos, and Nikos Komodakis

Computer Vision and Pattern Recognition (CVPR), 2024

A Simple Recipe for Language-guided Domain Generalized Segmentation

Mohammad Fahes, Tuan-Hung Vu, Andrei Bursuc, Patrick Pérez, Raoul de Charette

Computer Vision and Pattern Recognition (CVPR), 2024

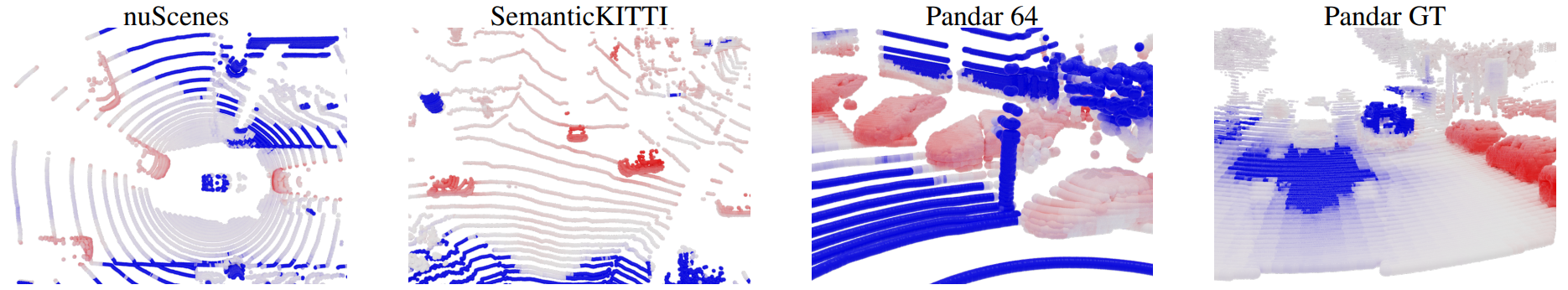

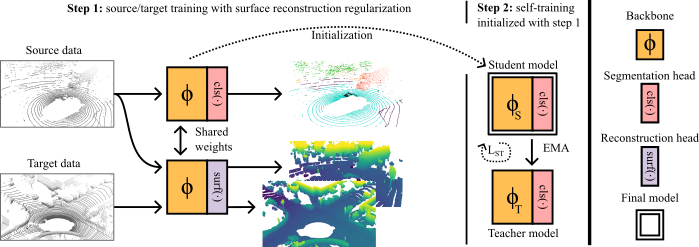

SALUDA: Surface-based Automotive Lidar Unsupervised Domain Adaptation

Bjoern Michele, Alexandre Boulch, Gilles Puy, Tuan-Hung Vu, Renaud Marlet and Nicolas Courty

International Conference on 3D Vision (3DV), 2024

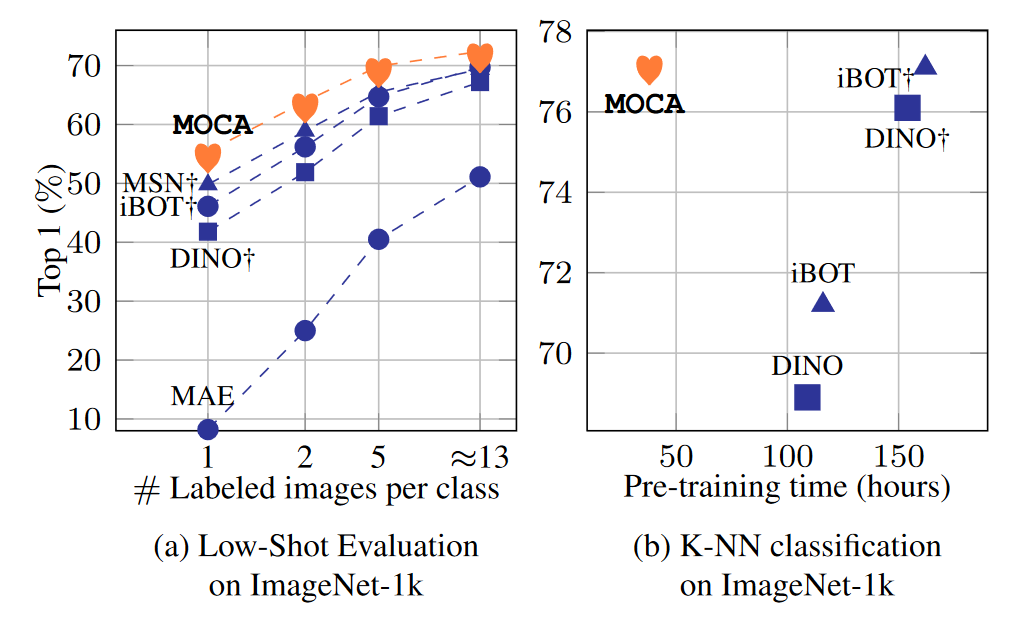

MOCA: Self-supervised Representation Learning by Predicting Masked Online Codebook Assignments

Spyros Gidaris, Andrei Bursuc, Oriane Siméoni, Nikos Komodakis, Matthieu Cord, Patrick Pérez

Transactions on Machine Learning Research (TMLR), 2024

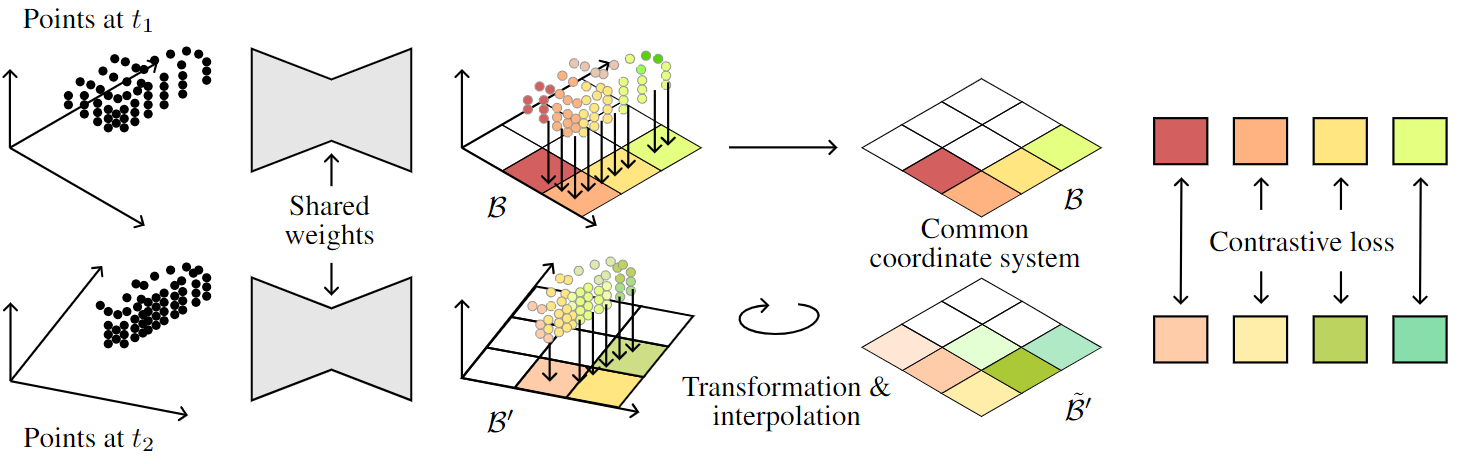

BEVContrast: Self-Supervision in BEV Space for Automotive Lidar Point Clouds

Corentin Sautier, Gilles Puy, Alexandre Boulch, Renaud Marlet, Vincent Lepetit

International Conference on 3D Vision (3DV), 2024

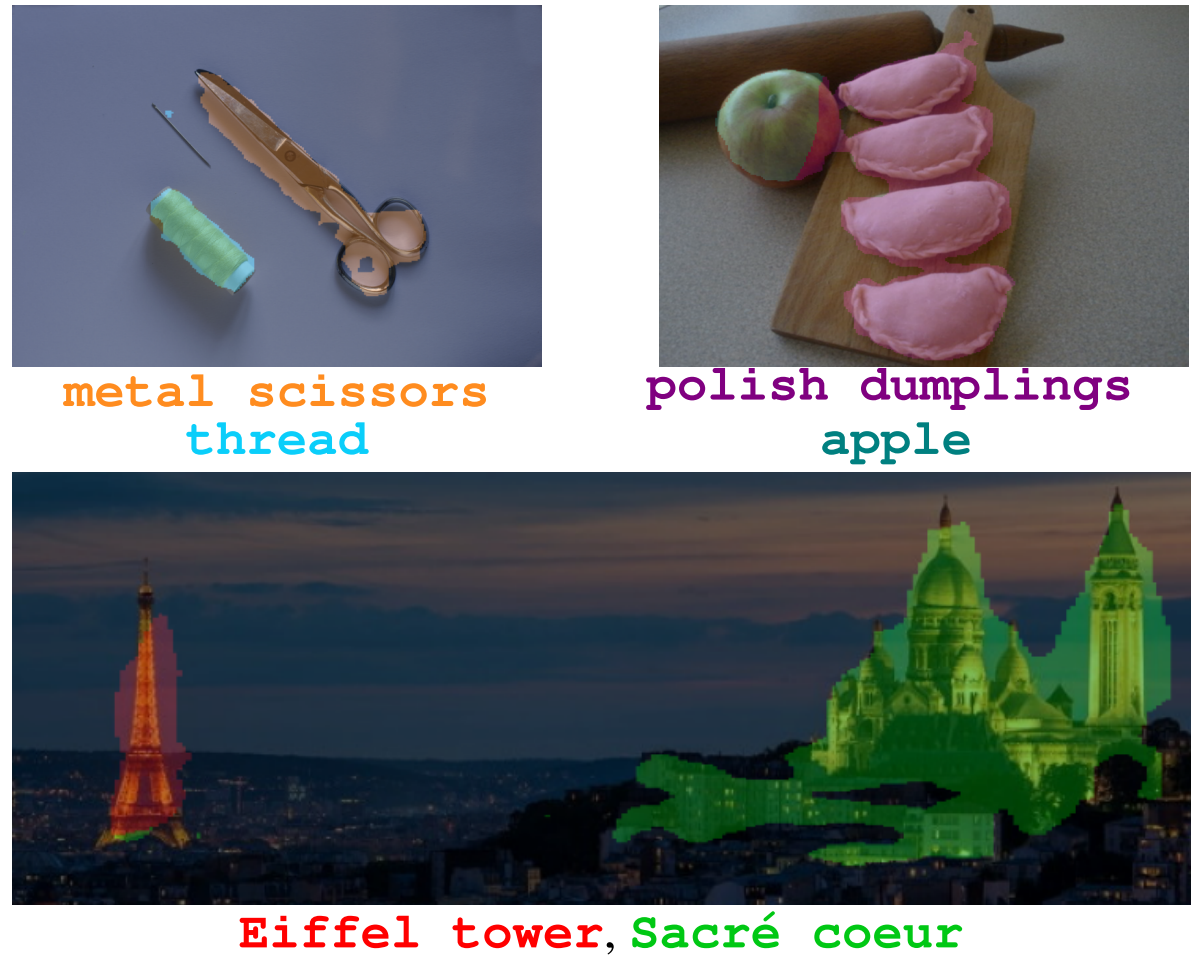

CLIP-DIY: CLIP Dense Inference Yields Open-Vocabulary Semantic Segmentation For-Free

Monika Wysoczańska, Michaël Ramamonjisoa, Tomasz Trzciński, Oriane Siméoni

Winter Conference on Applications of Computer Vision (WACV), 2024

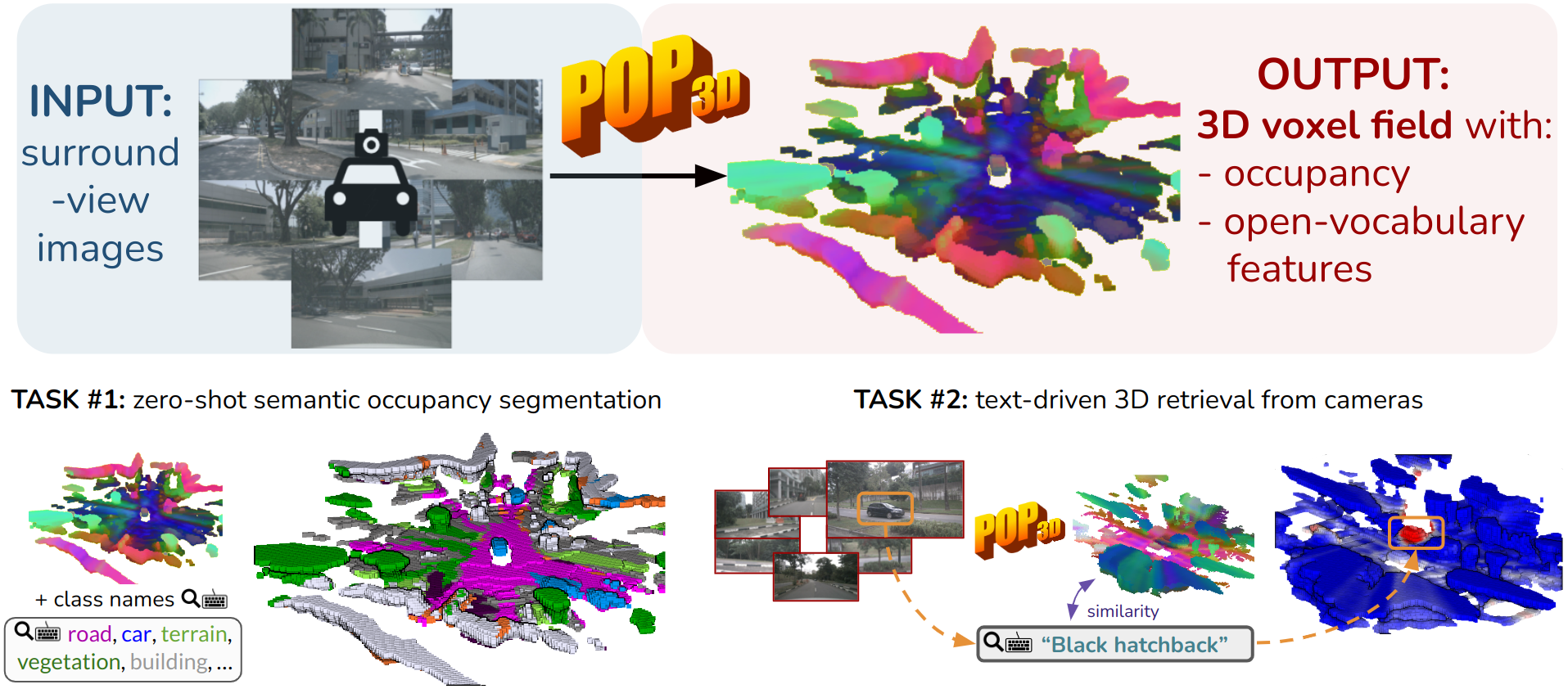

POP-3D: Open-Vocabulary 3D Occupancy Prediction from Images

Antonin Vobecky, Oriane Siméoni, David Hurych, Spyros Gidaris, Andrei Bursuc, Patrick Pérez, Josef Sivic

Advances in Neural Information Processing Systems (NeurIPS), 2023

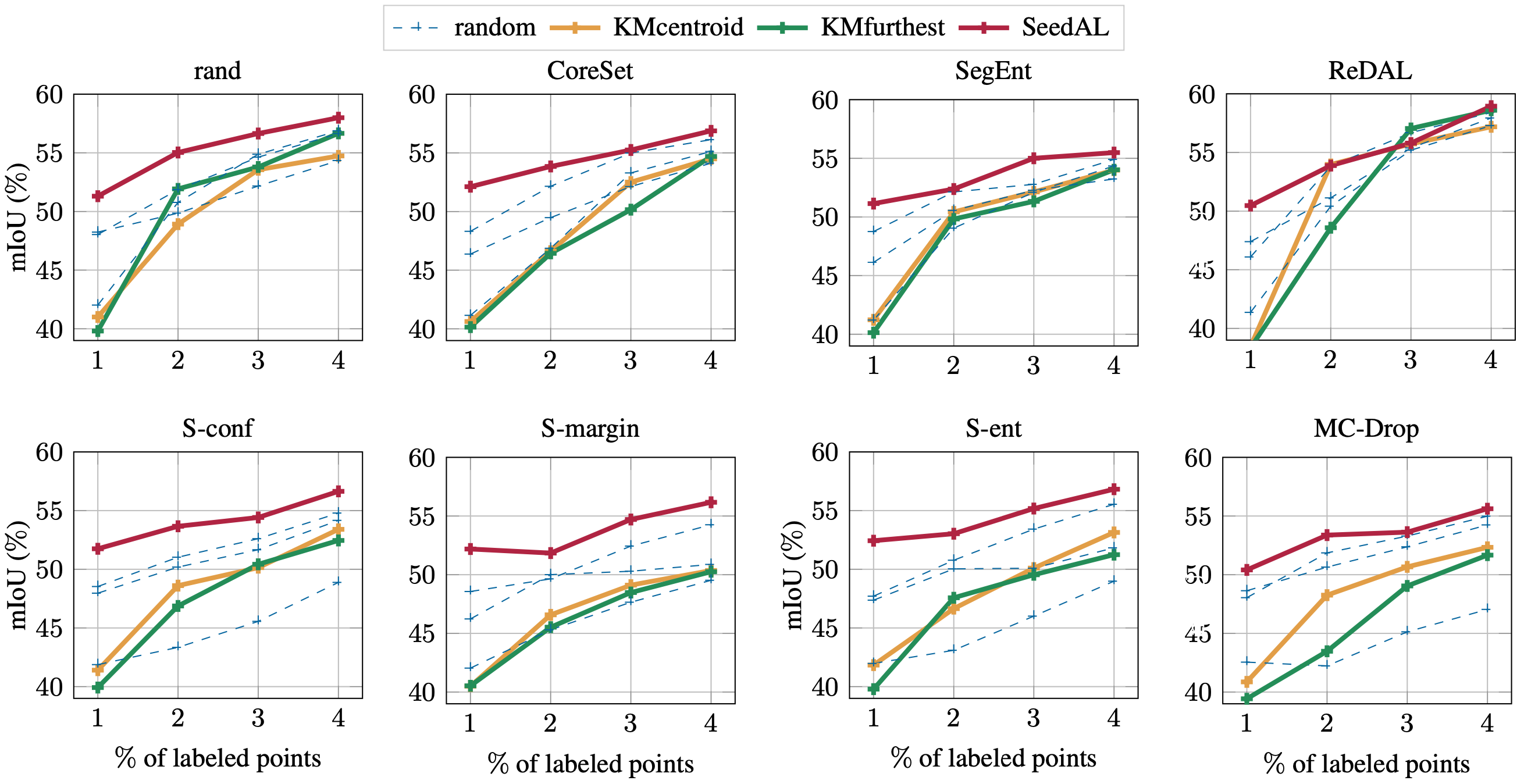

You Never Get a Second Chance To Make a Good First Impression: Seeding Active Learning for 3D Semantic Segmentation

Nermin Samet, Oriane Siméoni, Gilles Puy, Georgy Ponimatkin, Renaud Marlet, and Vincent Lepetit

International Conference on Computer Vision (ICCV), 2023

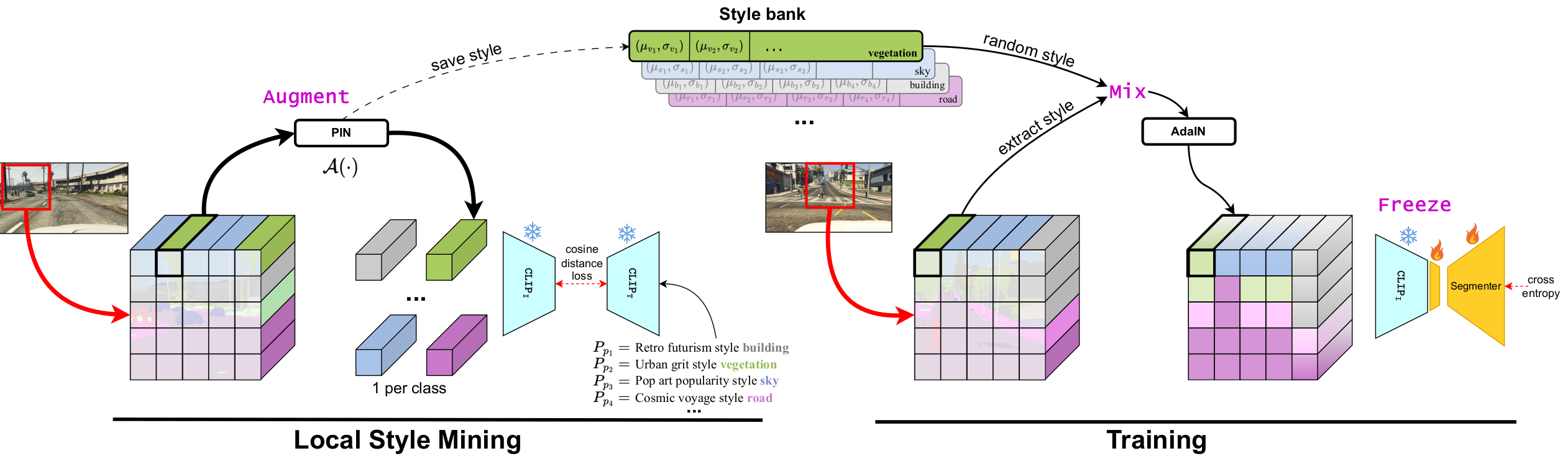

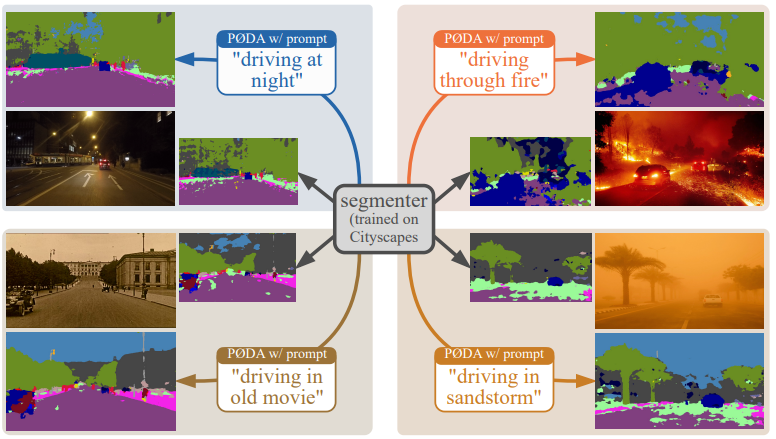

PØDA: Prompt-driven Zero-shot Domain Adaptation

Mohammad Fahes, Tuan-Hung Vu, Andrei Bursuc, Patrick Pérez, Raoul de Charette

International Conference on Computer Vision (ICCV), 2023

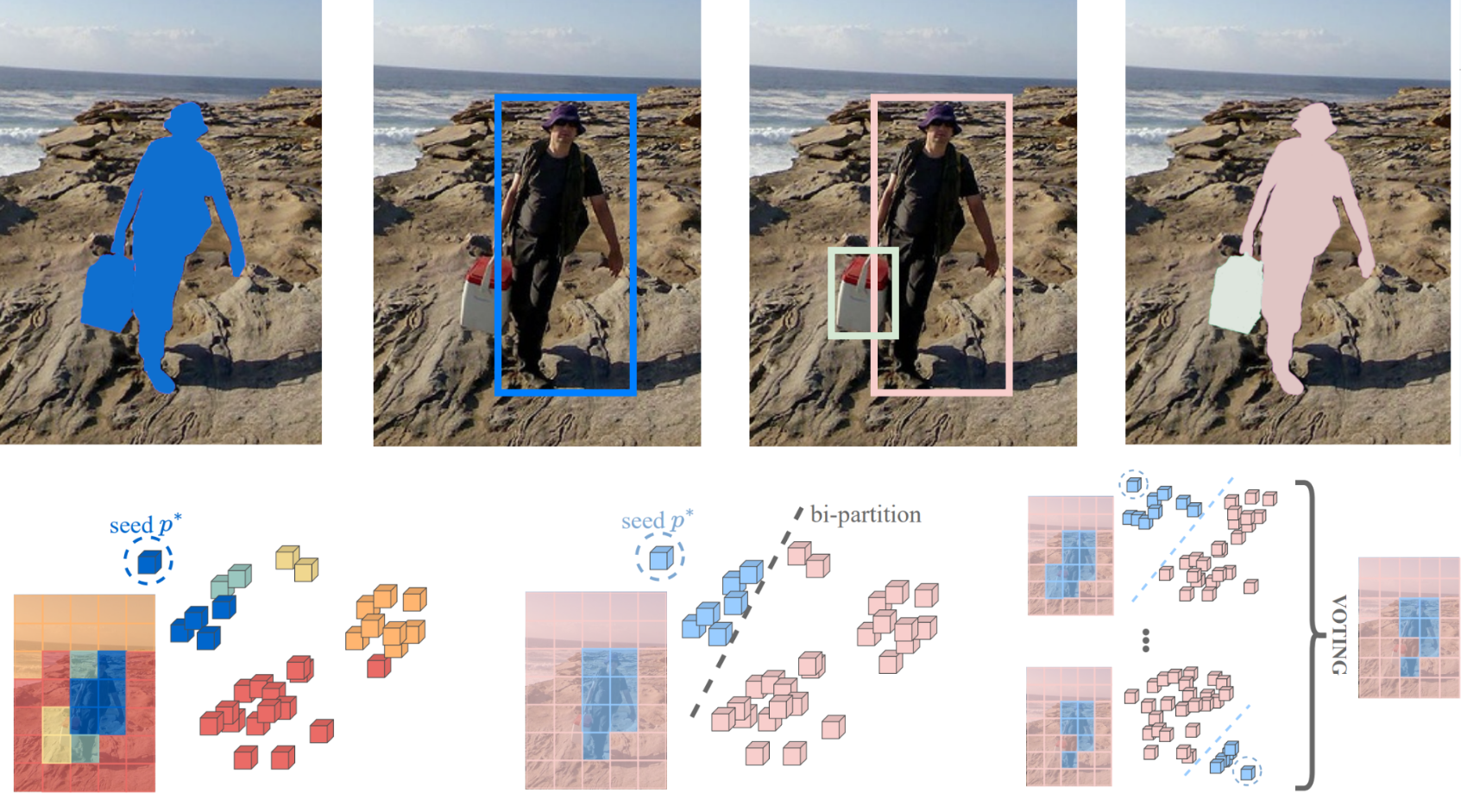

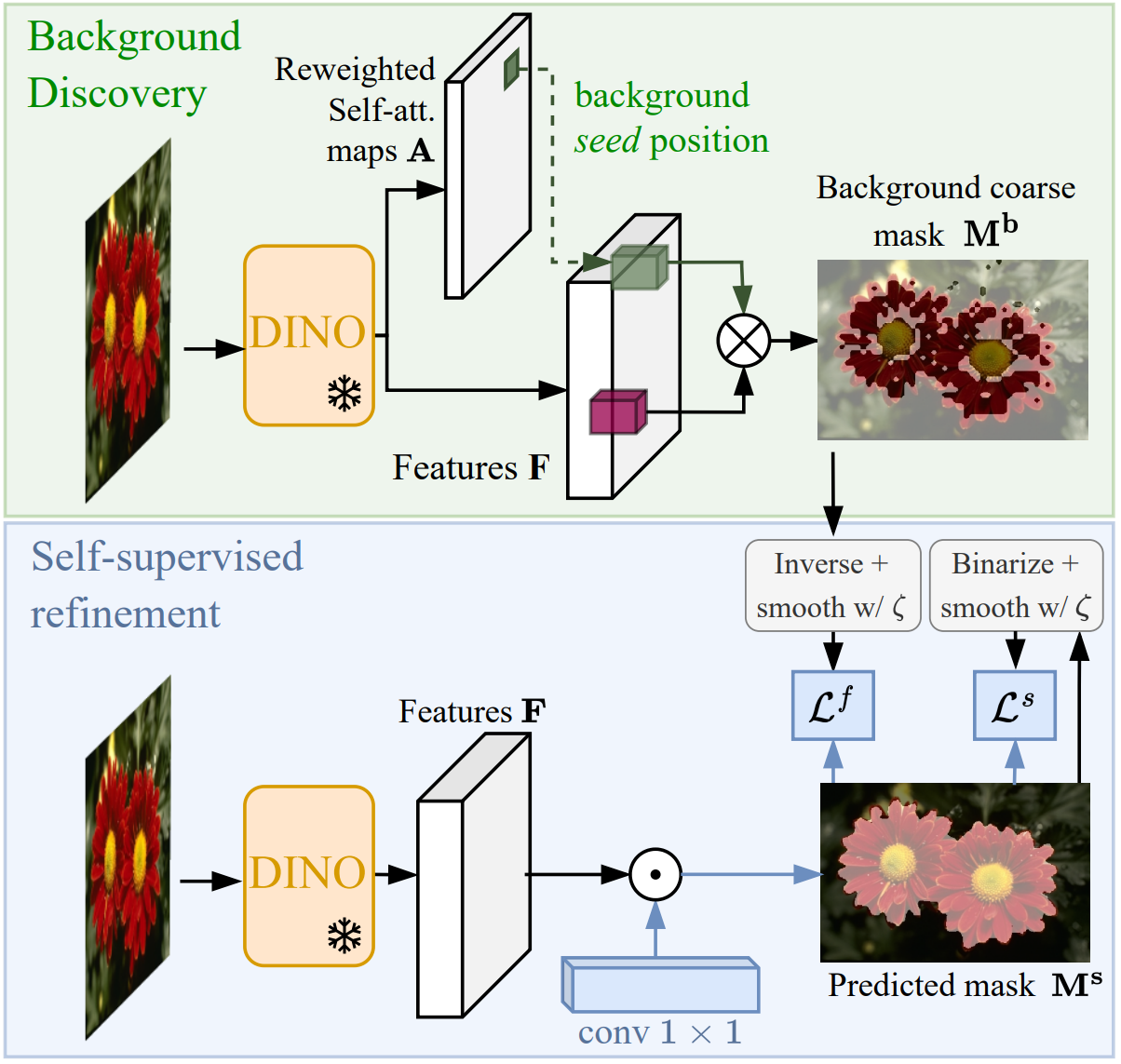

Unsupervised Object Localization: Observing the Background to Discover Objects

Oriane Siméoni, Chloé Sekkat, Gilles Puy, Antonin Vobecky, Éloi Zablocki, Patrick Pérez

Computer Vision and Pattern Recognition (CVPR), 2023

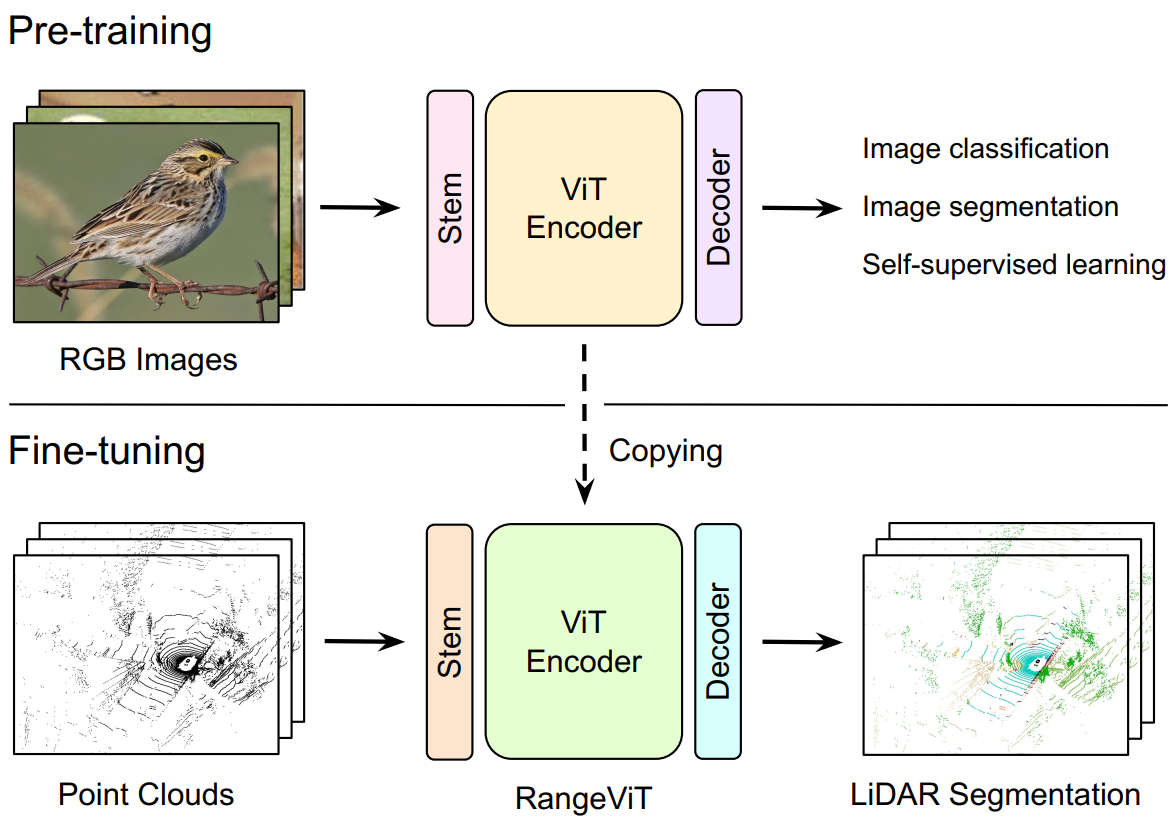

RangeViT: Towards Vision Transformers for 3D Semantic Segmentation in Autonomous Driving

Angelika Ando, Spyros Gidaris, Andrei Bursuc, Gilles Puy, Alexandre Boulch, and Renaud Marlet

Computer Vision and Pattern Recognition (CVPR), 2023

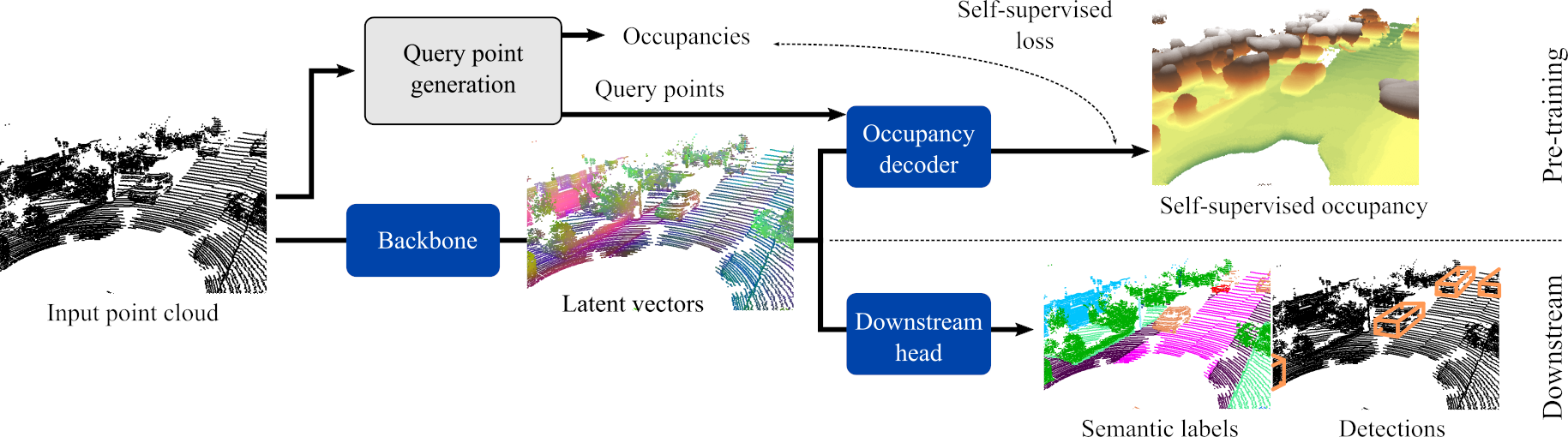

ALSO: Automotive Lidar Self-supervision by Occupancy estimation

Alexandre Boulch, Corentin Sautier, Björn Michele, Gilles Puy, Renaud Marlet

Computer Vision and Pattern Recognition (CVPR), 2023

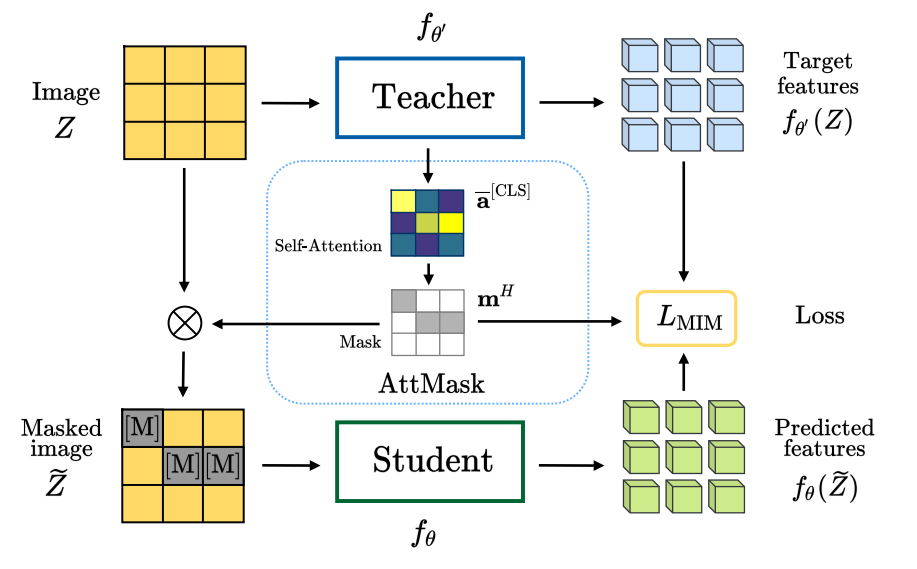

What to Hide from Your Students: Attention-Guided Masked Image Modeling

Ioannis Kakogeorgiou, Spyros Gidaris, Bill Psomas, Yannis Avrithis, Andrei Bursuc, Konstantinos Karantzalos, and Nikos Komodakis

European Conference on Computer Vision (ECCV), 2022

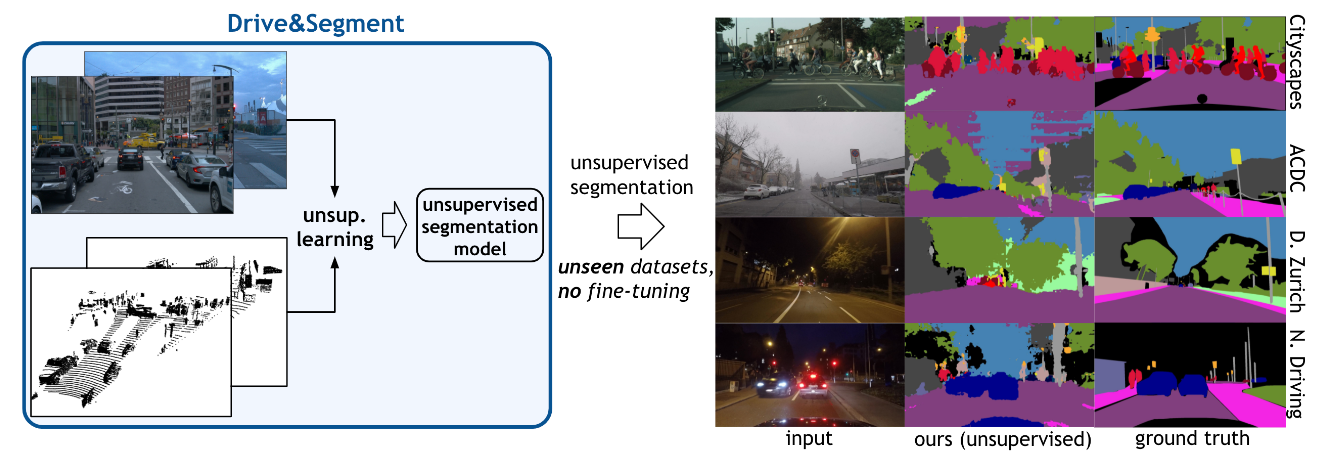

Drive&Segment: Unsupervised Semantic Segmentation of Urban Scenes via Cross-modal Distillation

Antonin Vobecky, Oriane Siméoni, David Hurych, Spyros Gidaris, Andrei Bursuc, Patrick Pérez, Josef Sivic

European Conference on Computer Vision (ECCV), 2022

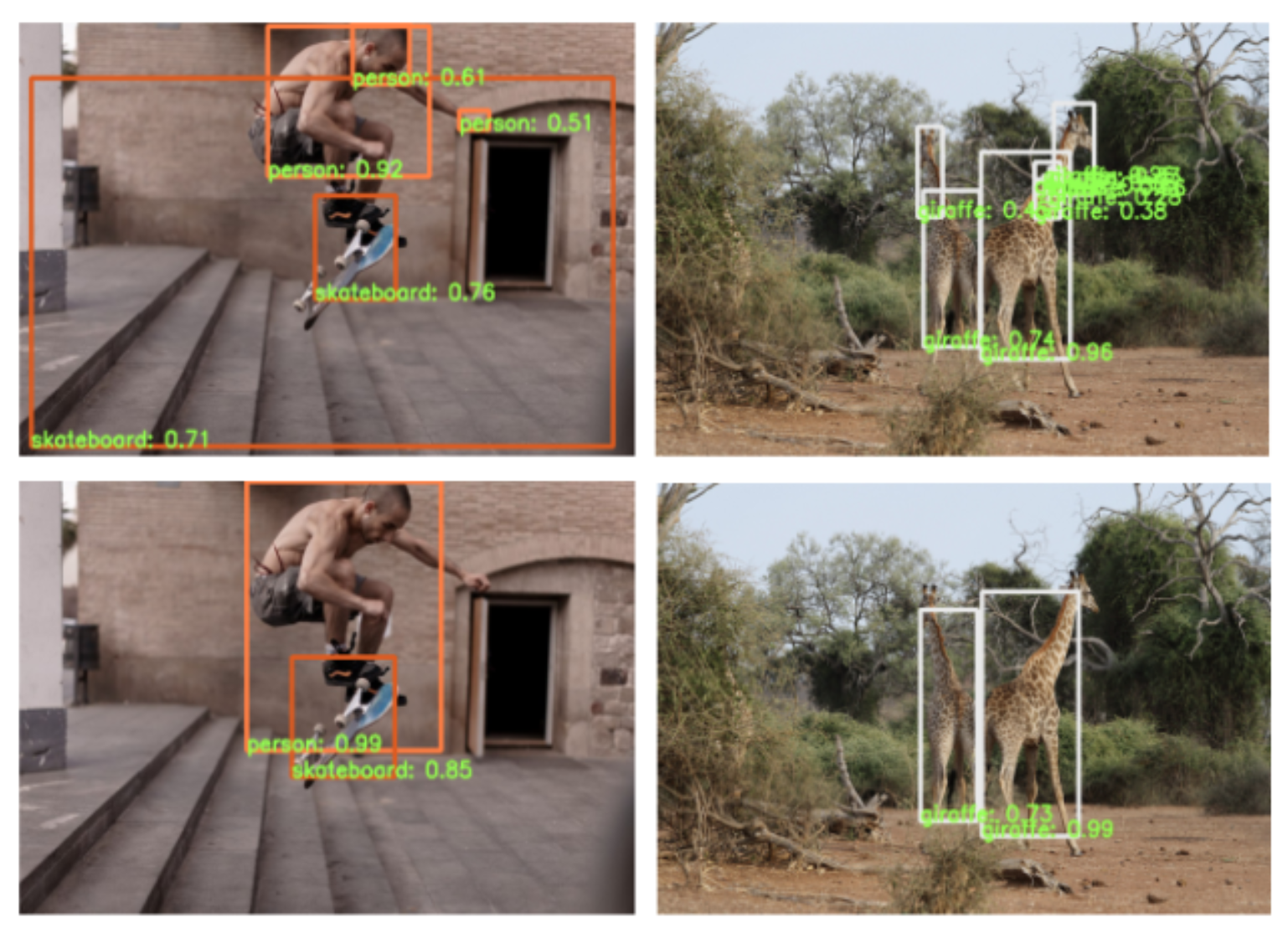

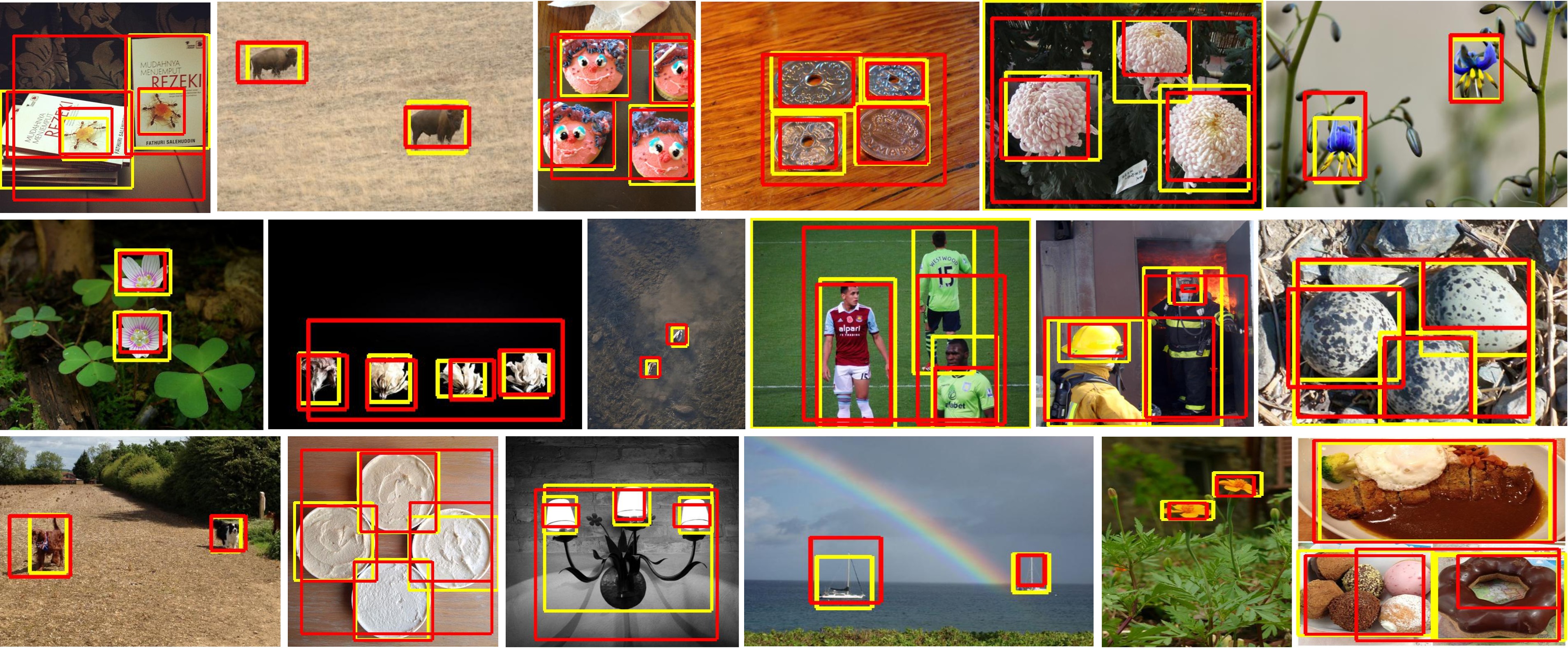

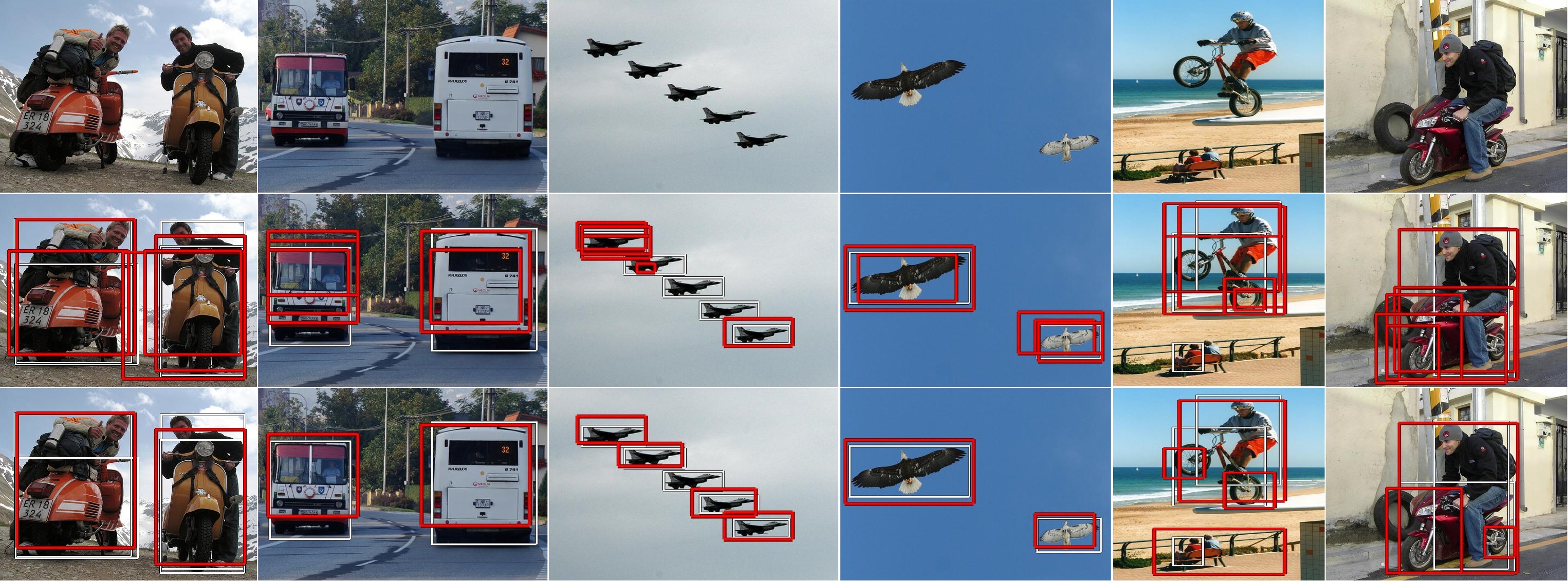

Active Learning Strategies for Weakly-Supervised Object Detection

Huy V. Vo, Oriane Siméoni, Spyros Gidaris, Andrei Bursuc, Patrick Pérez, and Jean Ponce

European Conference on Computer Vision (ECCV), 2022

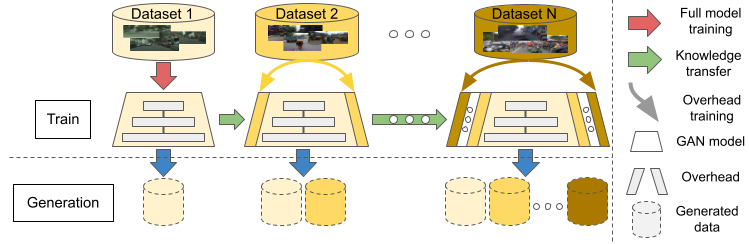

CSG0: Continual Urban Scene Generation with Zero Forgetting

Himalaya Jain (*), Tuan-Hung Vu (*), Patrick Pérez and Matthieu Cord (* equal contrib.)

Computer Vision and Pattern Recognition (CVPR) Workshop, 2022

Image-to-Lidar Self-Supervised Distillation for Autonomous Driving Data

Corentin Sautier, Gilles Puy, Spyros Gidaris, Alexandre Boulch, Andrei Bursuc, and Renaud Marlet

Computer Vision and Pattern Recognition (CVPR), 2022

Localizing Objects with Self-Supervised Transformers and no Labels

Oriane Siméoni, Gilles Puy, Huy V. Vo, Simon Roburin, Spyros Gidaris, Andrei Bursuc, Patrick Pérez, Renaud Marlet, Jean Ponce

British Machine Vision Conference (BMVC), 2021

Large-Scale Unsupervised Object Discovery

Huy V. Vo, Elena Sizikova, Cordelia Schmid, Patrick Pérez and Jean Ponce

Advances in Neural Information Processing Systems (NeurIPS), 2021

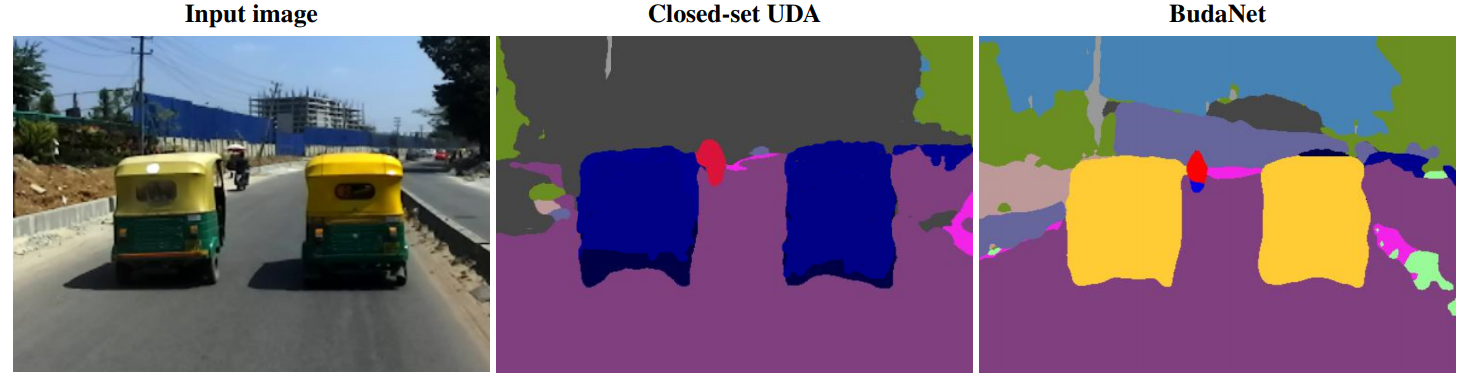

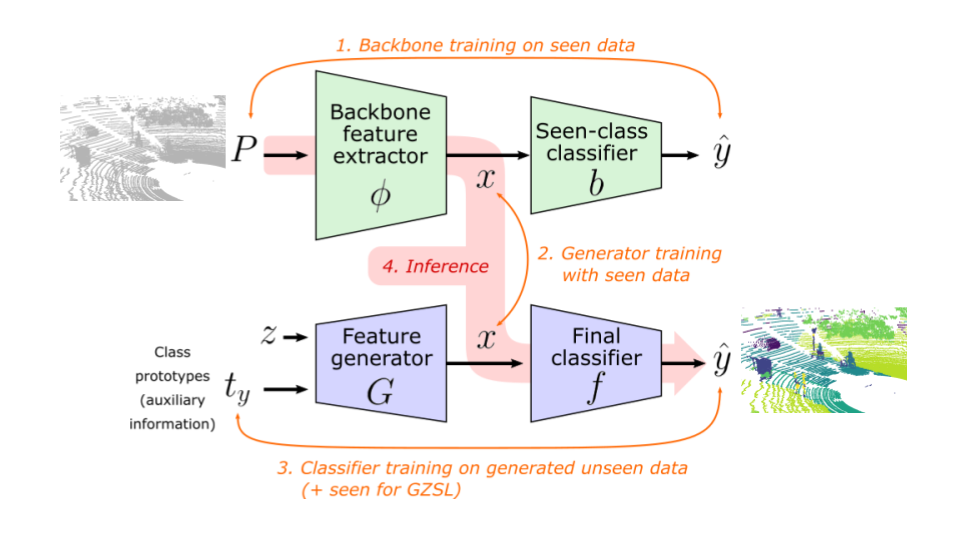

Handling new target classes in semantic segmentation with domain adaptation

Maxime Bucher, Tuan-Hung Vu, Matthieu Cord, and Patrick Pérez

Computer Vision and Image Understanding (CVIU), 2021

Generative Zero-Shot Learning for Semantic Segmentation of 3D Point Clouds

Bjoern Michele, Alexandre Boulch, Gilles Puy, Maxime Bucher, and Renaud Marlet

International Conference on 3D Vision (3DV), 2021

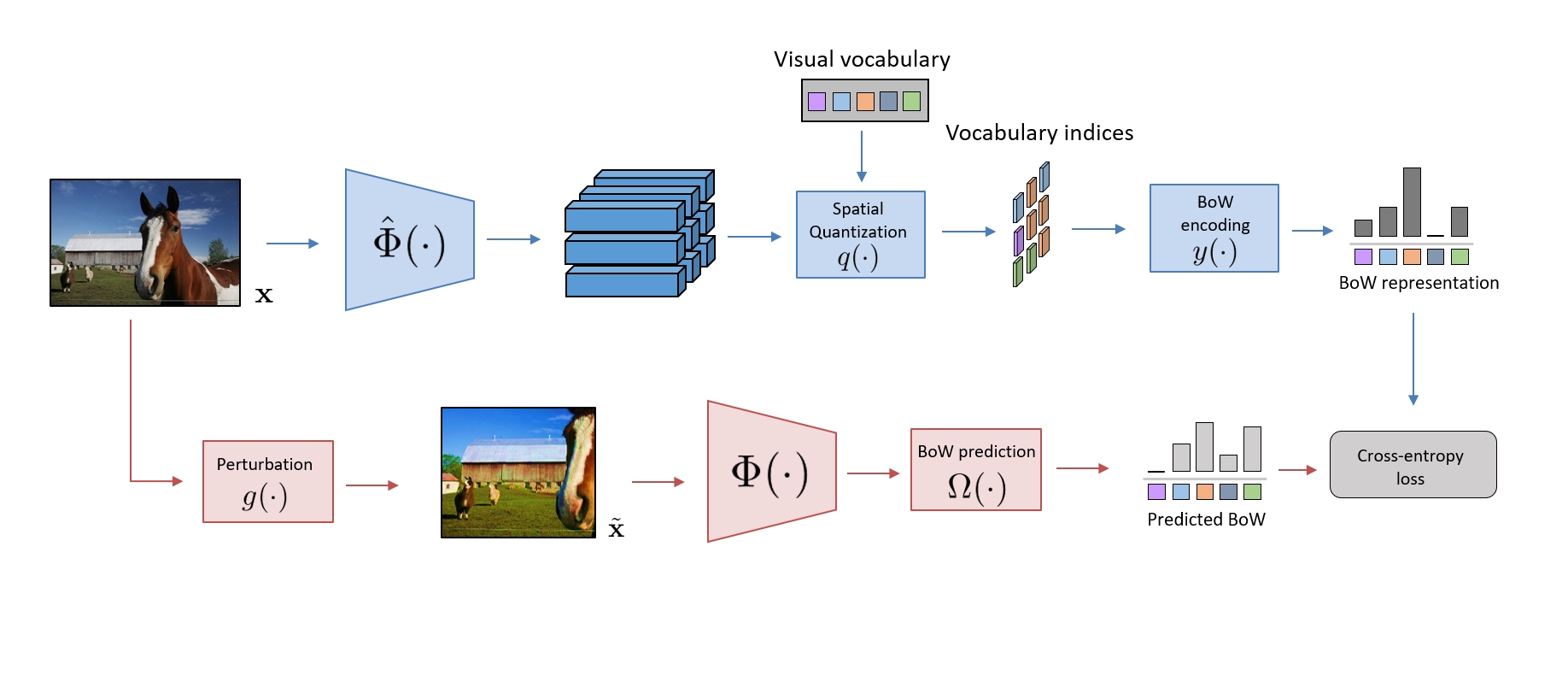

Online Bag-of-Visual-Words Generation for Unsupervised Representation Learning

Spyros Gidaris, Andrei Bursuc, Gilles Puy, Nikos Komodakis, Patrick Pérez, and Matthieu Cord

Computer Vision and Pattern Recognition (CVPR), 2021

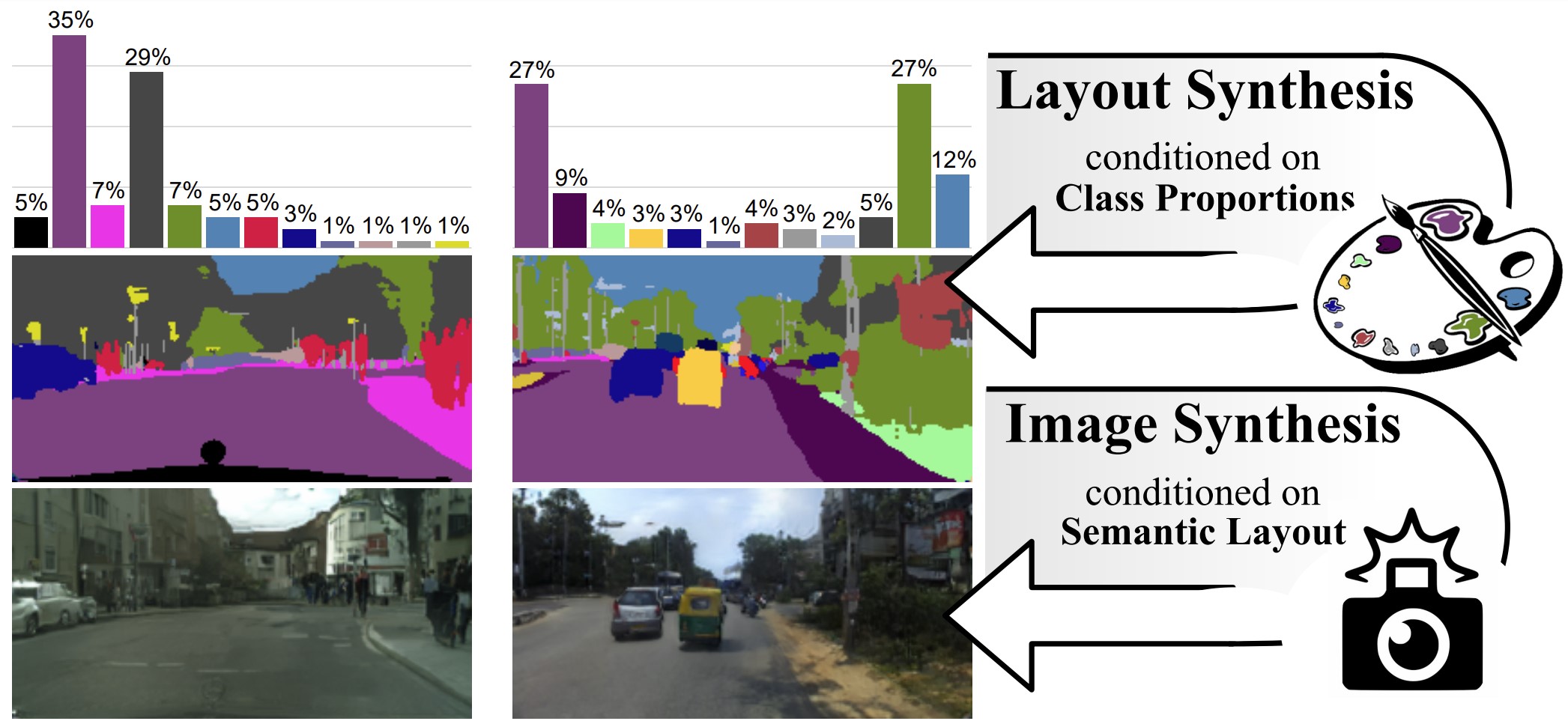

Semantic Palette: Guiding Scene Generation with Class Proportions

Guillaume Le Moing, Tuan-Hung Vu, Himalaya Jain, Patrick Pérez and Matthieu Cord

Computer Vision and Pattern Recognition (CVPR), 2021

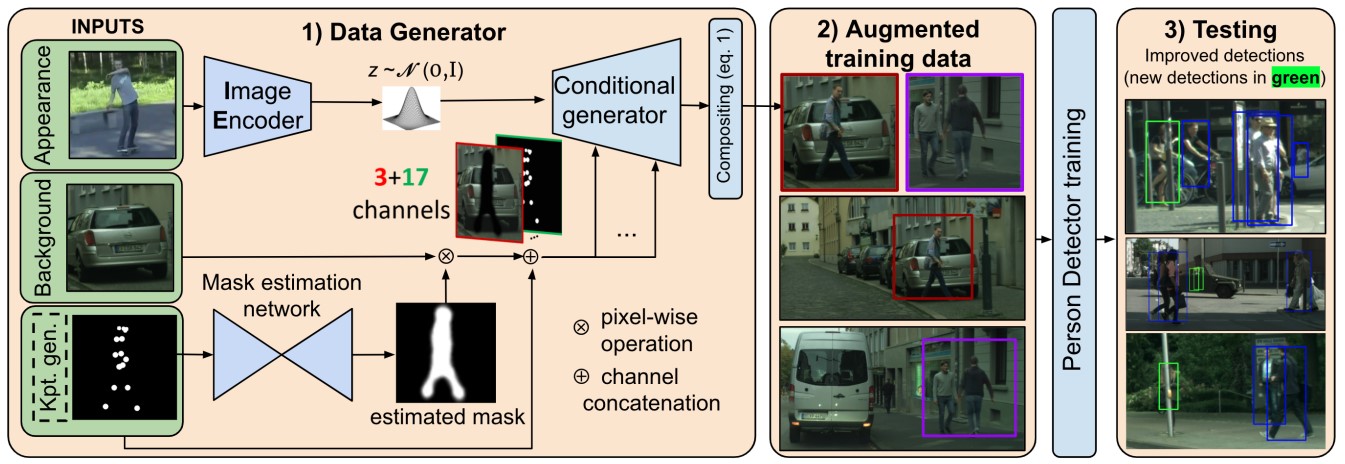

Artificial Dummies for Urban Dataset Augmentation

Antonín Vobecký, David Hurych, Michal Uřičář, Patrick Pérez, and Josef Šivic

AAAI Conference on Artificial Intelligence (AAAI), 2021

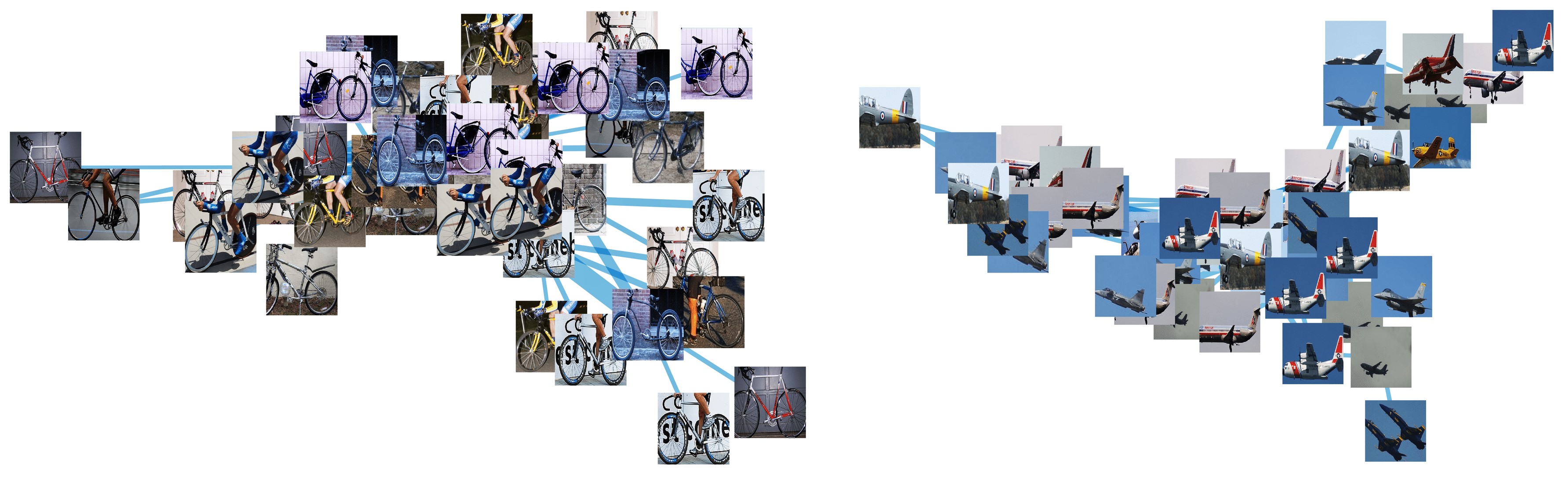

Toward Unsupervised, Multi-Object Discovery in Large-Scale Image Collections

Huy V. Vo, Patrick Pérez and Jean Ponce

European Conference on Computer Vision (ECCV), 2020

Learning Representations by Predicting Bags of Visual Words

Spyros Gidaris, Andrei Bursuc, Nikos Komodakis, Patrick Pérez, and Matthieu Cord

Computer Vision and Pattern Recognition (CVPR), 2020

This dataset does not exist: training models from generated images

Victor Besnier, Himalaya Jain, Andrei Bursuc, Matthieu Cord, and Patrick Pérez

International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2020

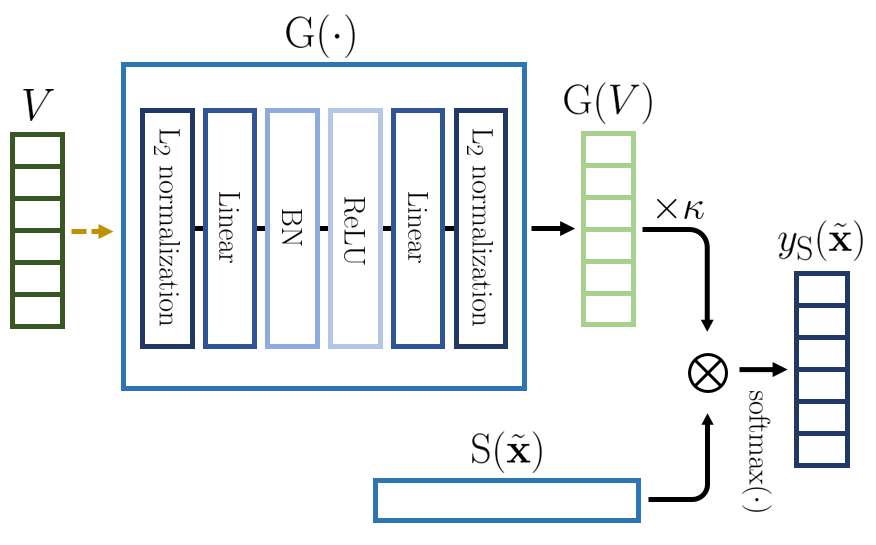

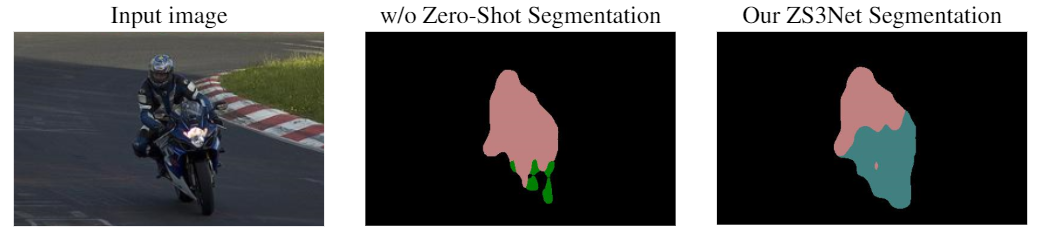

Zero-Shot Semantic Segmentation

Maxime Bucher, Tuan-Hung Vu, Matthieu Cord, and Patrick Pérez

Neural Information Processing Systems (NeurIPS), 2019

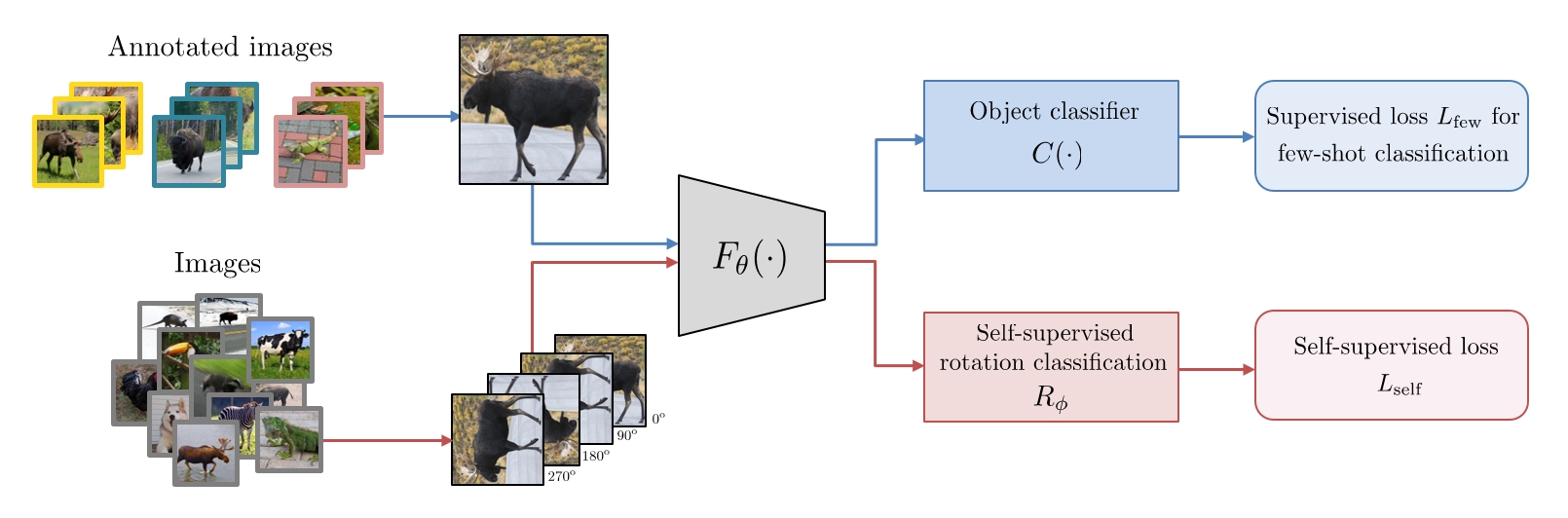

Boosting Few-Shot Visual Learning With Self-Supervision

Spyros Gidaris, Andrei Bursuc, Nikos Komodakis, Patrick Pérez, and Matthieu Cord

International Conference on Computer Vision (ICCV), 2019

Unsupervised Image Matching and Object Discovery as Optimization

Huy V. Vo, Francis Bach, Minsu Cho, Kai Han, Yann Lecun, Patrick Pérez and Jean Ponce

Computer Vision and Pattern Recognition (CVPR), 2019