Driving in action

Getting from sensory inputs to car control goes either through a modular stack (perception > localization > forecast > planning > actuation) or, more radically, through a single end-to-end model. We work on both strategies, more specificaly on action forecasting, automatic interpretation of decisions taken by a driving system, and reinforcement / imitation learning for end-to-end systems (as in RL work at CVPR’20).

Publications

UniTraj: A Unified Framework for Scalable Vehicle Trajectory Prediction

Lan Feng, Mohammadhossein Bahari, Kaouther Messaoud Ben Amor, Éloi Zablocki, Matthieu Cord, Alexandre Alahi

European Conference on Computer Vision, 2024

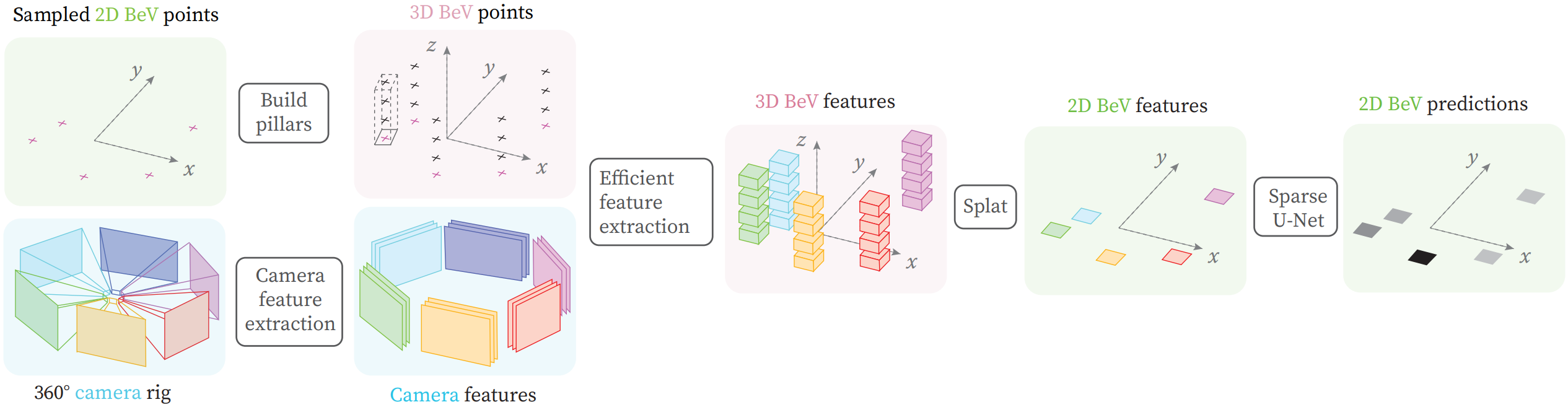

PointBeV: A Sparse Approach to BeV Predictions

Loïck Chambon, Éloi Zablocki, Mickaël Chen, Florent Bartoccioni, Patrick Pérez, Matthieu Cord

Computer Vision and Pattern Recognition (CVPR), 2024

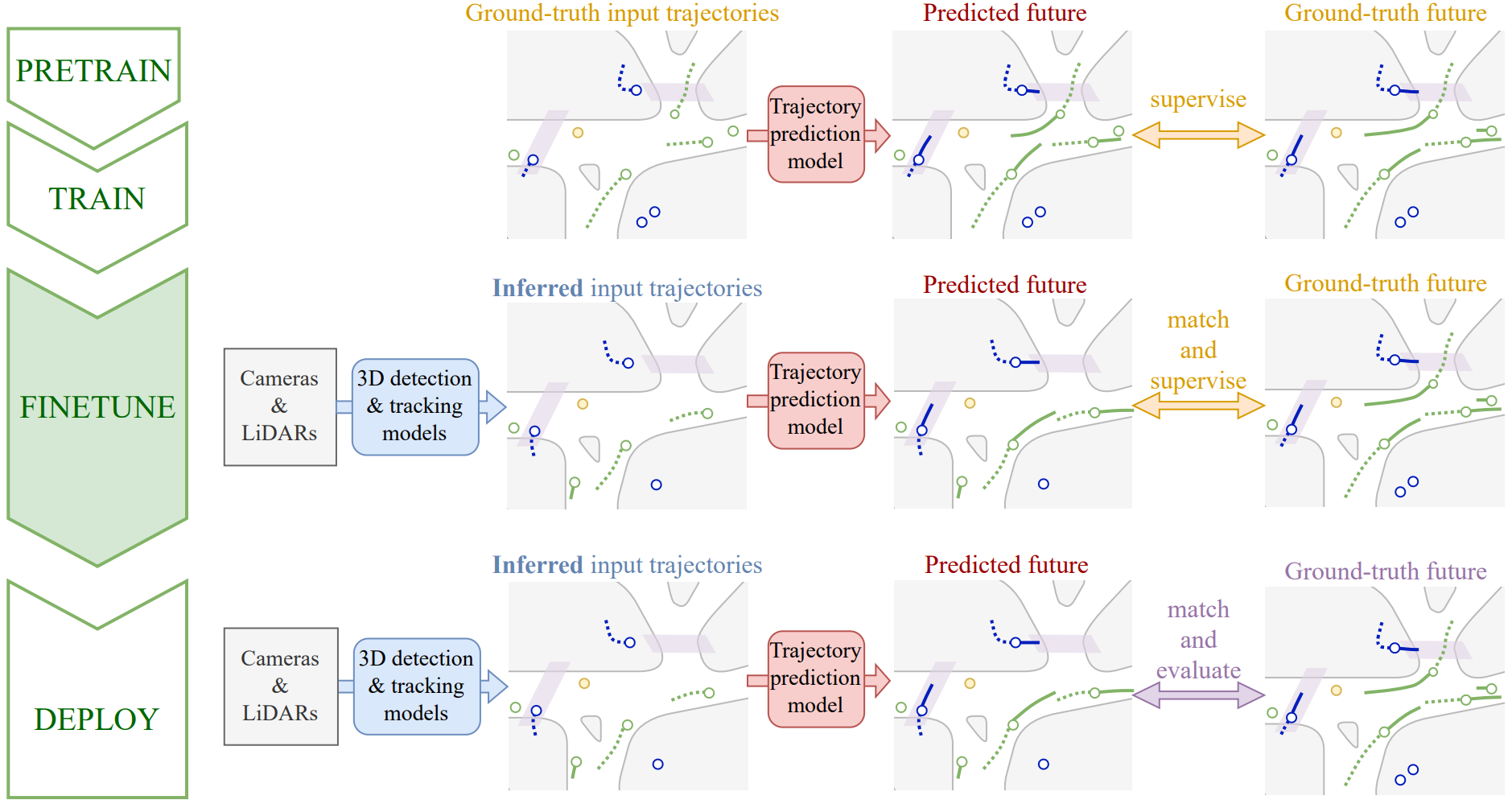

Valeo4Cast: A Modular Approach to End-to-End Forecasting

Yihong Xu, Éloi Zablocki, Alexandre Boulch, Gilles Puy, Mickael Chen, Florent Bartoccioni, Nermin Samet, Oriane Siméoni, Spyros Gidaris, Tuan-Hung Vu, Andrei Bursuc, Eduardo Valle, Renaud Marlet, Matthieu Cord

Winning solution to the "Unified Detection, Tracking and Forecasting" Argoverse 2 challenge @CVPR Worshop on Autonomous Driving (WAD), 2024

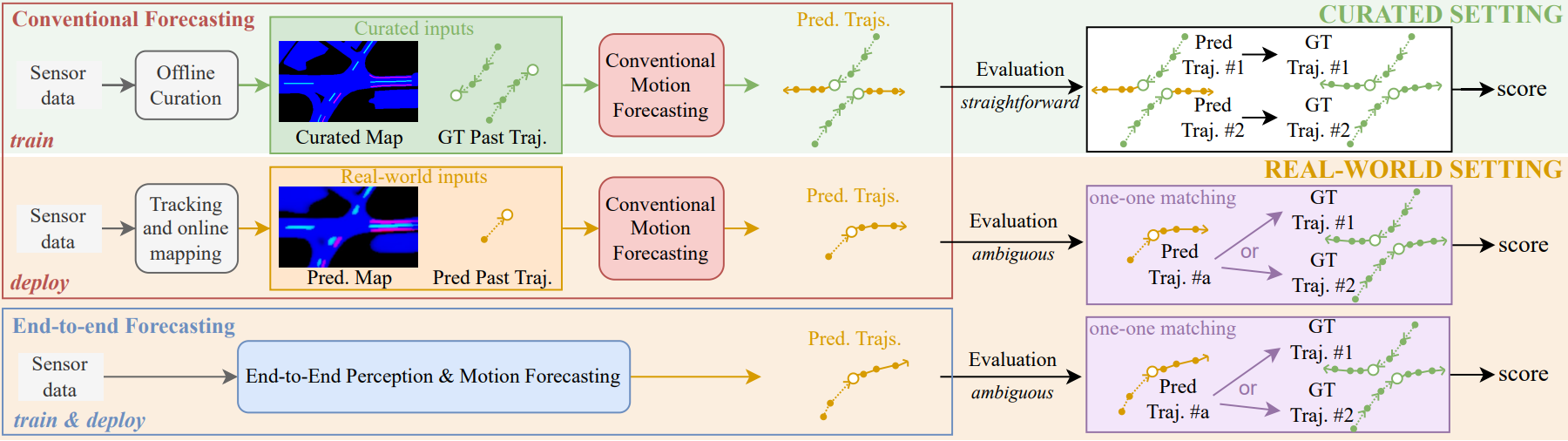

Towards Motion Forecasting with Real-World Perception Inputs: Are End-to-End Approaches Competitive?

Yihong Xu, Loïck Chambon, Éloi Zablocki, Mickaël Chen, Alexandre Alahi, Matthieu Cord, Patrick Pérez

IEEE International Conference on Robotics and Automation (ICRA), 2024

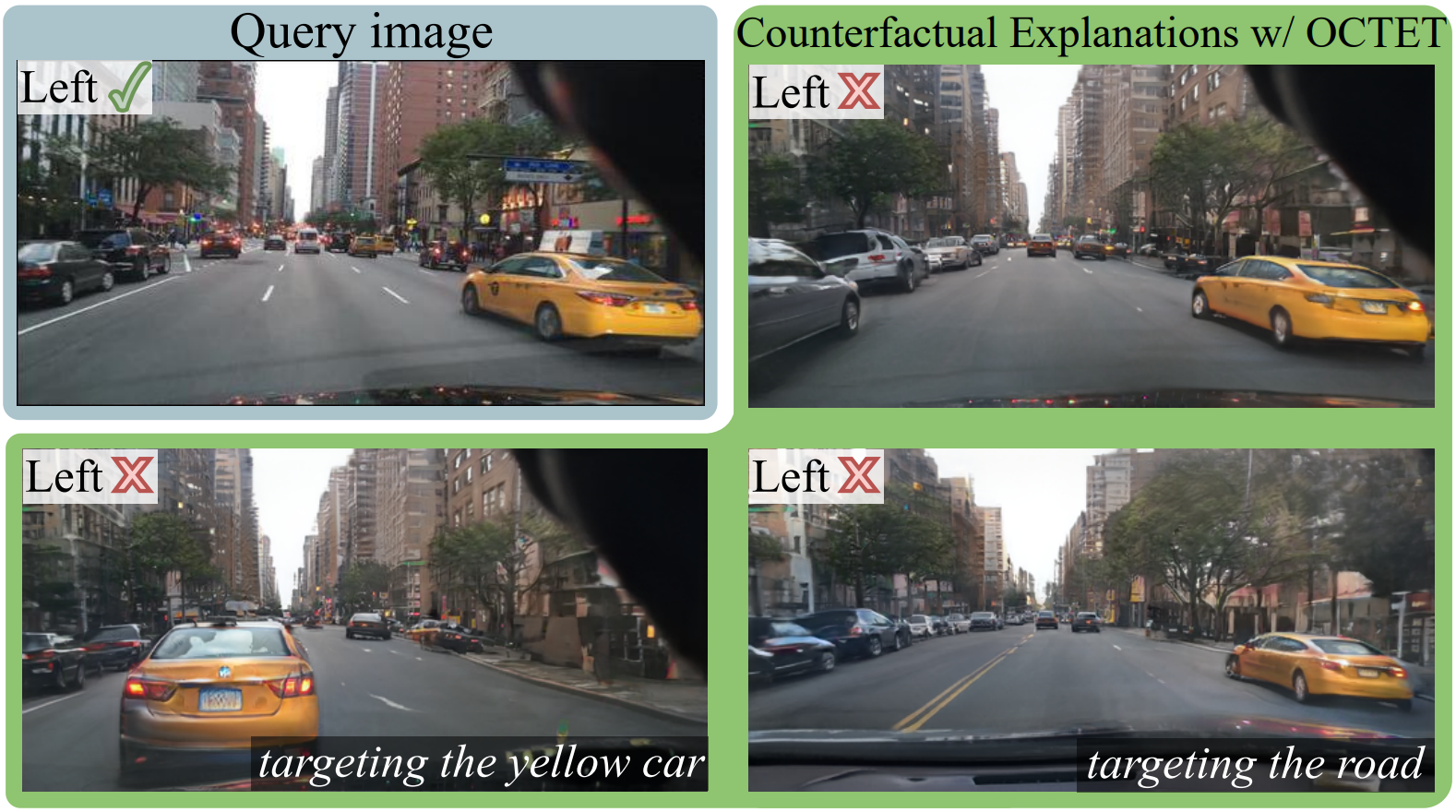

OCTET: Object-aware Counterfactual Explanations

Mehdi Zemni, Mickaël Chen, Éloi Zablocki, Hédi Ben-Younes, Patrick Pérez, Matthieu Cord

Computer Vision and Pattern Recognition (CVPR), 2023

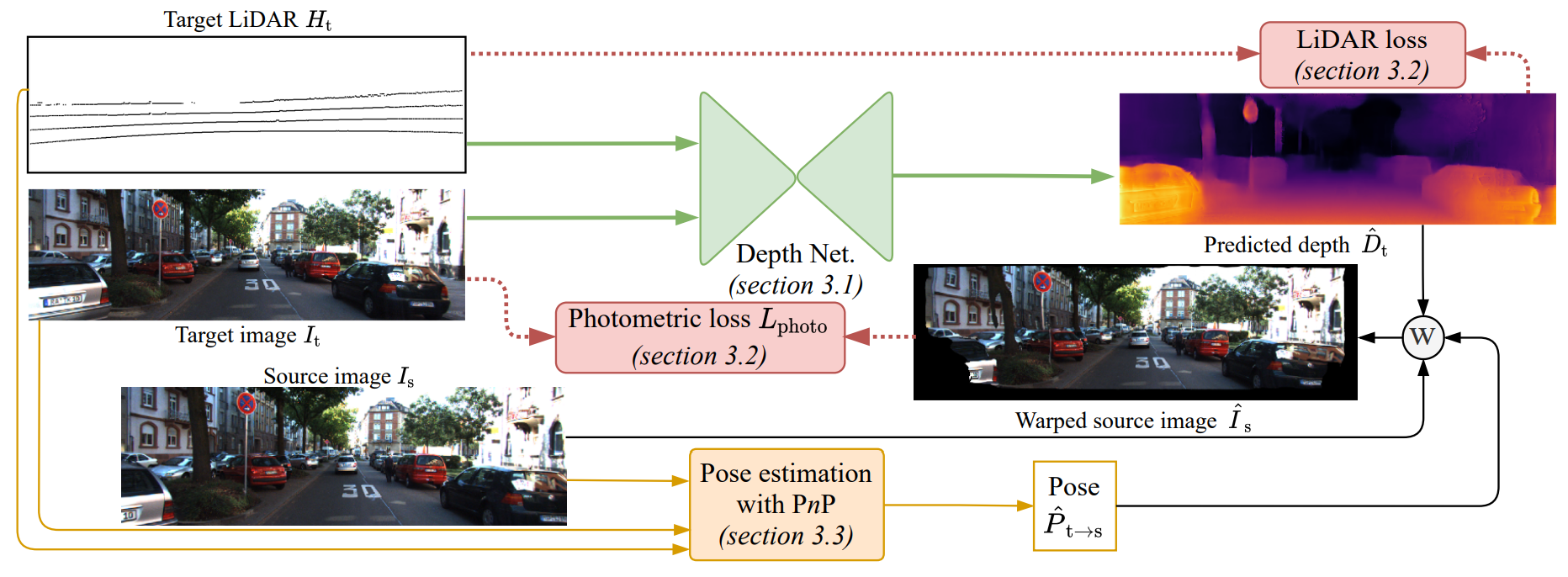

LiDARTouch: Monocular metric depth estimation with a few-beam LiDAR

Florent Bartoccioni, Éloi Zablocki, Patrick Pérez, Matthieu Cord, Karteek Alahari

Computer Vision and Image Understanding (CVIU), 2022

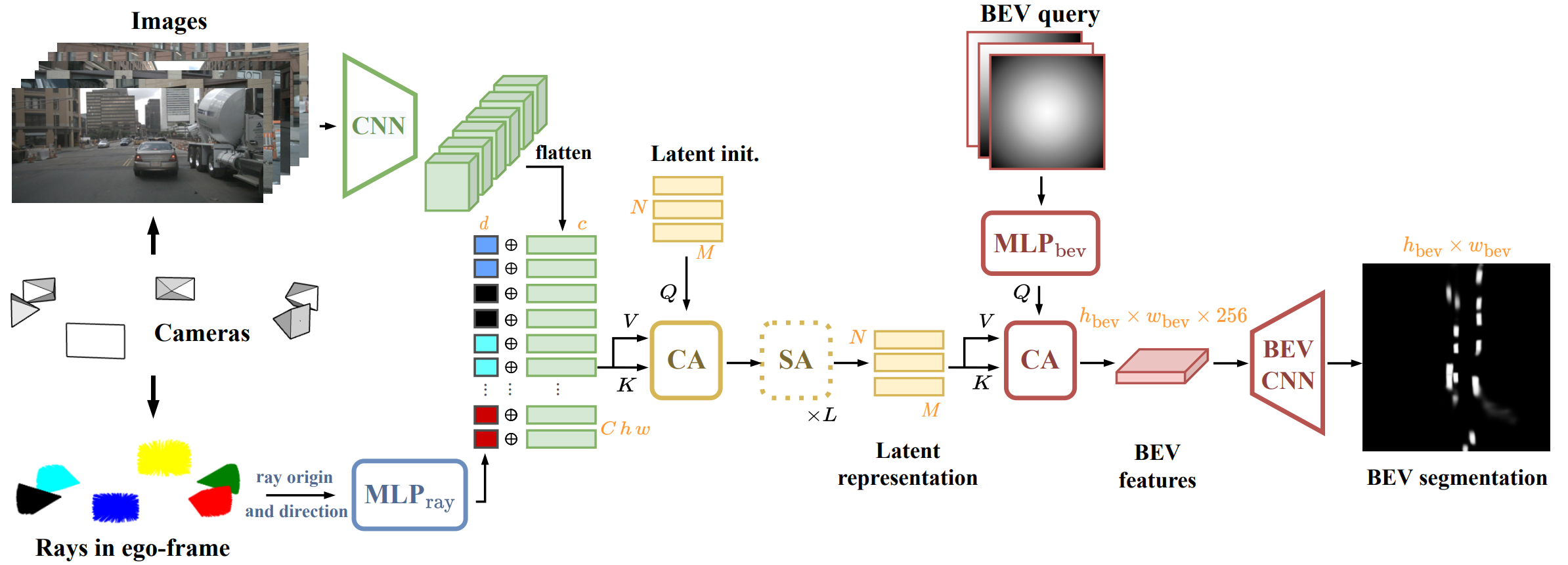

LaRa: Latents and Rays for Multi-Camera Bird's-Eye-View Semantic Segmentation

Florent Bartoccioni, Éloi Zablocki, Andrei Bursuc, Patrick Pérez, Matthieu Cord, Karteek Alahari

Conference on Robot Learning, 2022

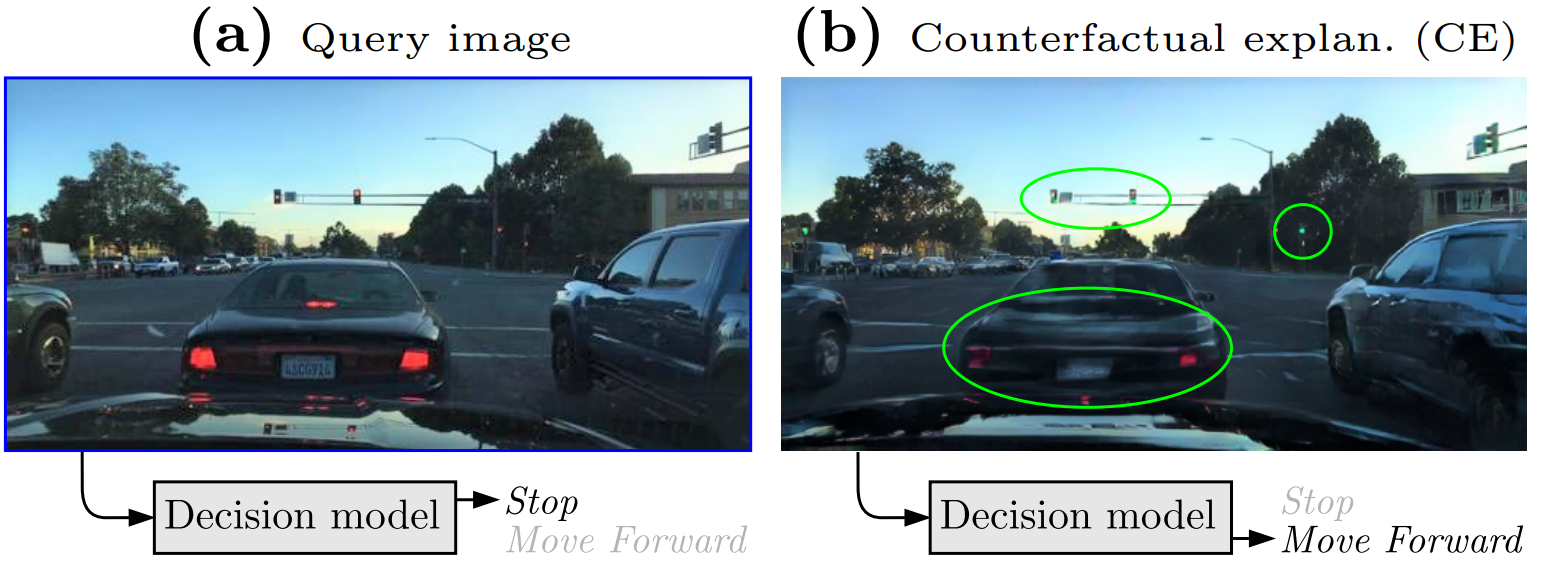

STEEX: Steering Counterfactual Explanations with Semantics

Paul Jacob, Éloi Zablocki, Hédi Ben-Younes, Mickaël Chen, Patrick Pérez, Matthieu Cord

European Conference on Computer Vision, 2022

Explainability of deep vision-based autonomous driving systems: Review and challenges

Éloi Zablocki*, Hédi Ben-Younes*, Patrick Pérez, Matthieu Cord

International Journal of Computer Vision, 2022

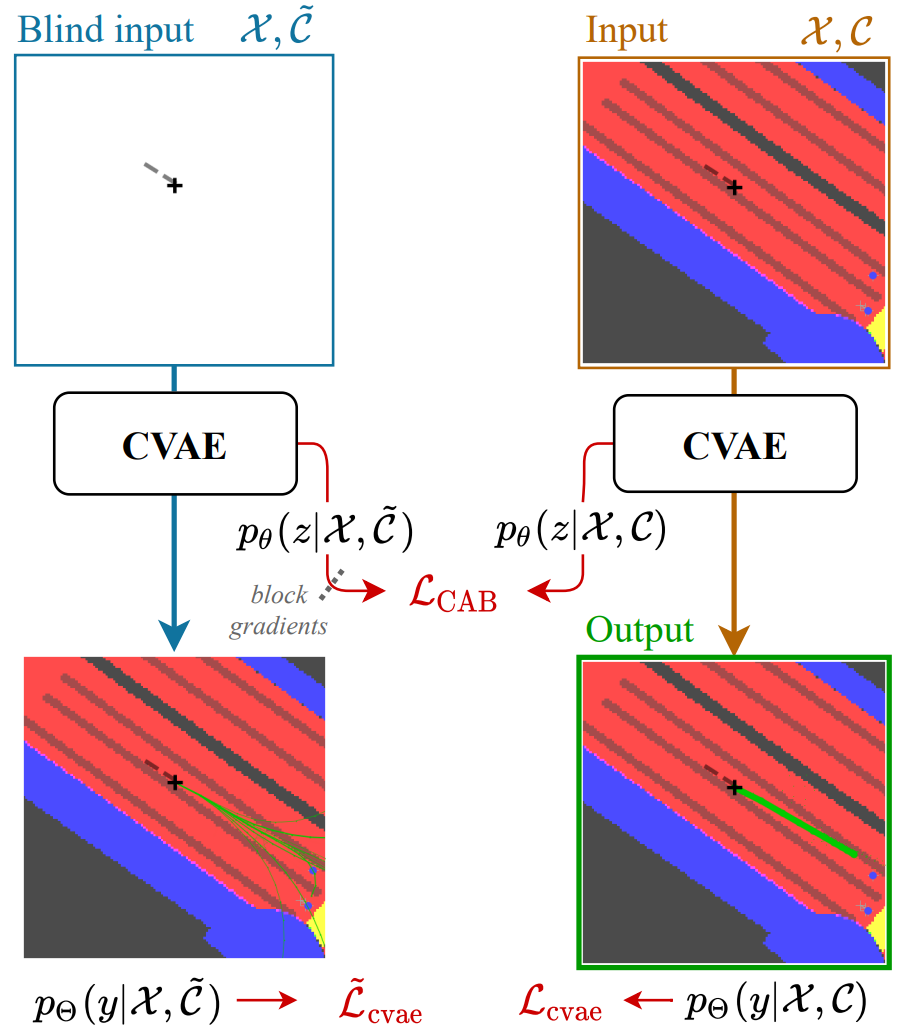

Raising context awareness in motion forecasting

Hédi Ben-Younes*, Éloi Zablocki*, Mickaël Chen, Patrick Pérez, Matthieu Cord

Computer Vision and Pattern Recognition (CVPR) Workshop, 2022

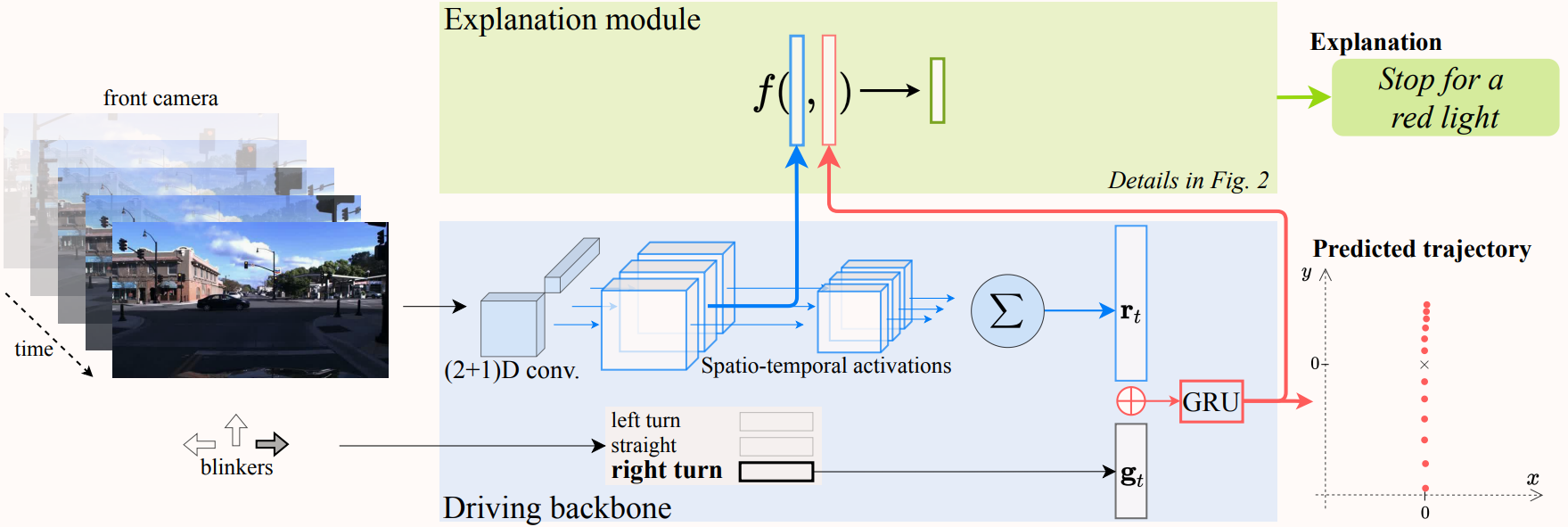

Driving behavior explanation with multi-level fusion

Hédi Ben-Younes*, Éloi Zablocki*, Patrick Pérez, Matthieu Cord

Pattern Recognition (PR), 2022

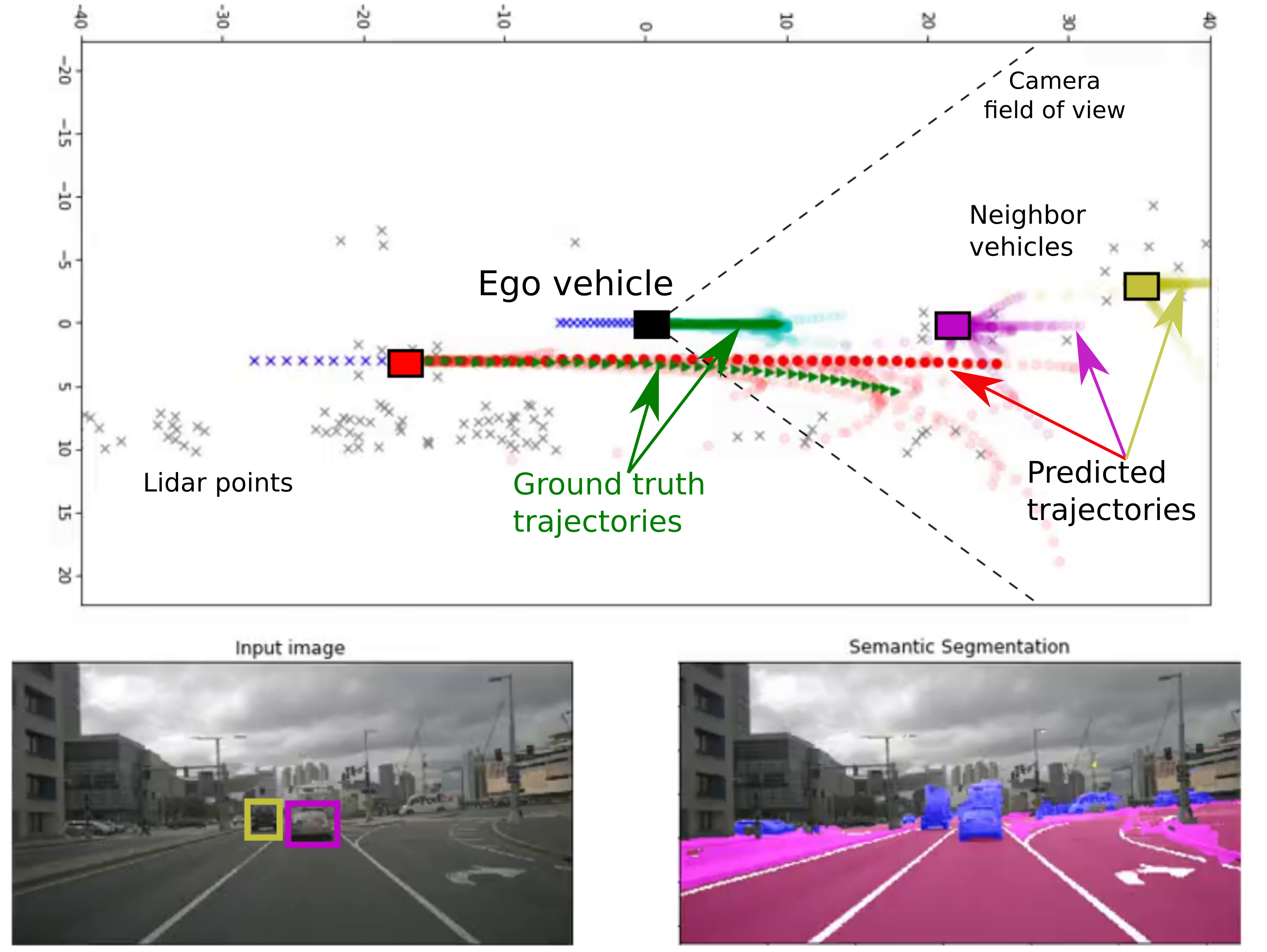

PLOP: Probabilistic poLynomial Objects trajectory Prediction for autonomous driving

Thibault Buhet, Emilie Wirbel, Andrei Bursuc and Xavier Perrotton

Conference on Robot Learning (CoRL), 2020

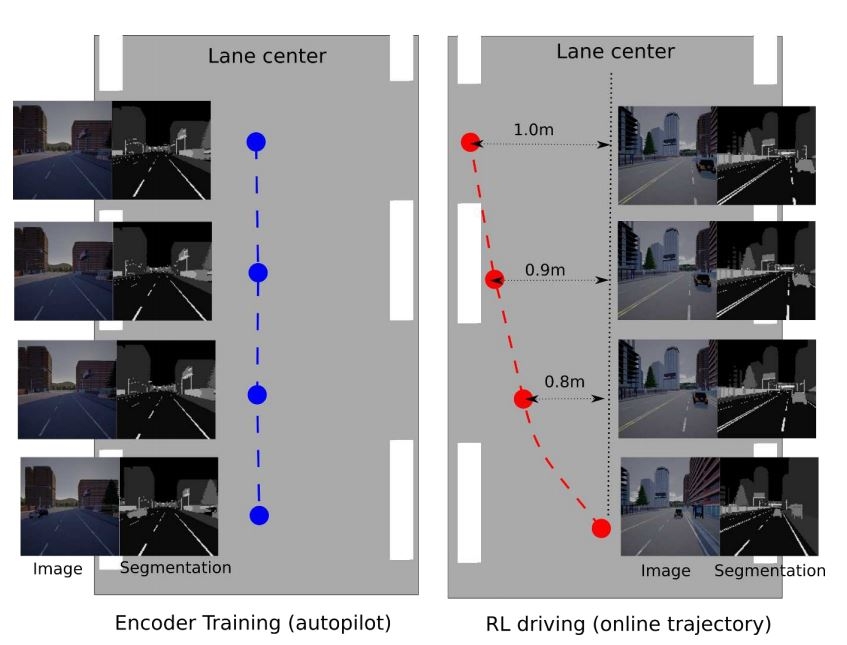

End-to-End Model-Free Reinforcement Learning for Urban Driving using Implicit Affordances

Marin Toromanoff, Emilie Wirbel, and Fabien Moutarde

Computer Vision and Pattern Recognition (CVPR), 2020

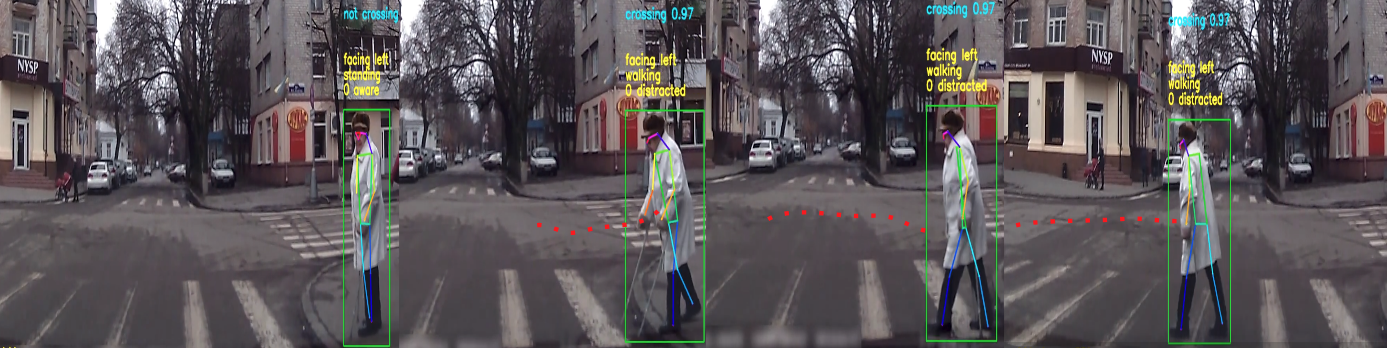

VRUNet: Multi-Task Learning Model for Intent Prediction of Vulnerable Road Users

Adithya Ranga, Filippo Giruzzi, Jagdish Bhanushali, Emilie Wirbel, Patrick Pérez, Tuan-Hung Vu, Xavier Perotton

Electronic Imaging, 2020