STEEX: Steering Counterfactual Explanations with Semantics

Paul Jacob Éloi Zablocki Hédi Ben-Younes Mickaël Chen Patrick Pérez Matthieu Cord

ECCV 2022

Abstract

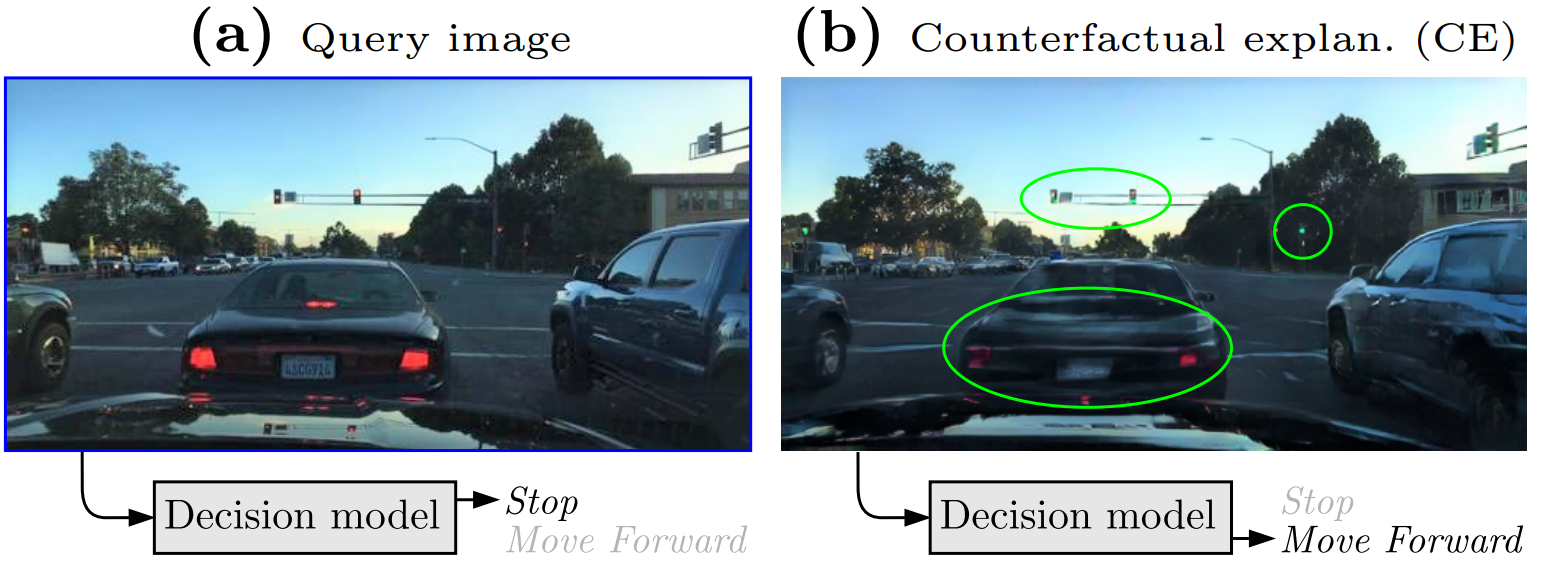

As deep learning models are increasingly used in safety-critical applications, explainability and trustworthiness become major concerns. For simple images, such as low-resolution face portraits, synthesizing visual counterfactual explanations has recently been proposed as a way to uncover the decision mechanisms of a trained classification model. In this work, we address the problem of producing counterfactual explanations for high-quality images and complex scenes. Leveraging recent semantic-to-image models, we propose a new generative counterfactual explanation framework that produces plausible and sparse modifications which preserve the overall scene structure. Furthermore, we introduce the concept of "region-targeted counterfactual explanations", and a corresponding framework, where users can guide the generation of counterfactuals by specifying a set of semantic regions of the query image the explanation must be about. Extensive experiments are conducted on challenging datasets including high-quality portraits (CelebAMask-HQ) and driving scenes (BDD100k).

Video

BibTeX

@inproceedings{steex2022,

author = {Paul Jacob and

{\'{E}}loi Zablocki and

Hedi Ben{-}Younes and

Micka{\"{e}}l Chen and

Patrick P{\'{e}}rez and

Matthieu Cord},

title = {STEEX: Steering Counterfactual Explanations with Semantics},

booktitle = {ECCV},

publisher = {Springer},

year = {2022}

}