Multi-sensor perception

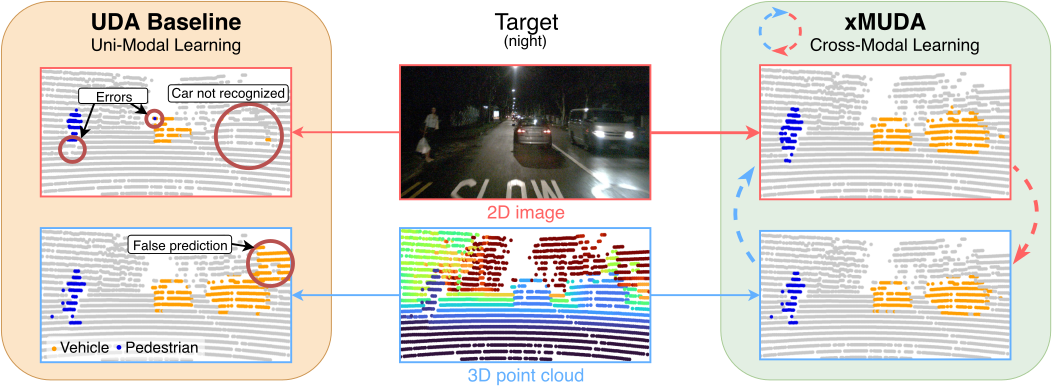

Automated driving relies first on a diverse range of sensors, like Valeo’s fish-eye cameras, LiDARs, radars and ultrasonics. Exploiting at best the outputs of each of these sensors at any instant is fundamental to understand the complex environment of the vehicle and gain robustness. To this end, we explore various machine learning approaches where sensors are considered either in isolation (as radar in Carrada at ICPR’20) or collectively (as in xMUDA at CVPR’20).

Publications

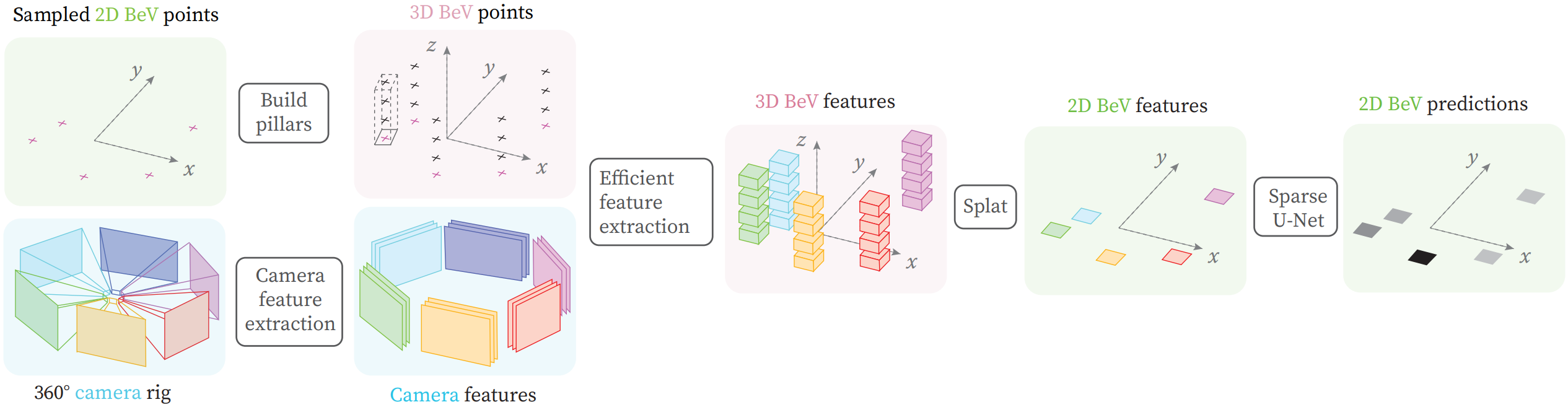

PointBeV: A Sparse Approach to BeV Predictions

Loïck Chambon, Éloi Zablocki, Mickaël Chen, Florent Bartoccioni, Patrick Pérez, Matthieu Cord

Computer Vision and Pattern Recognition (CVPR), 2024

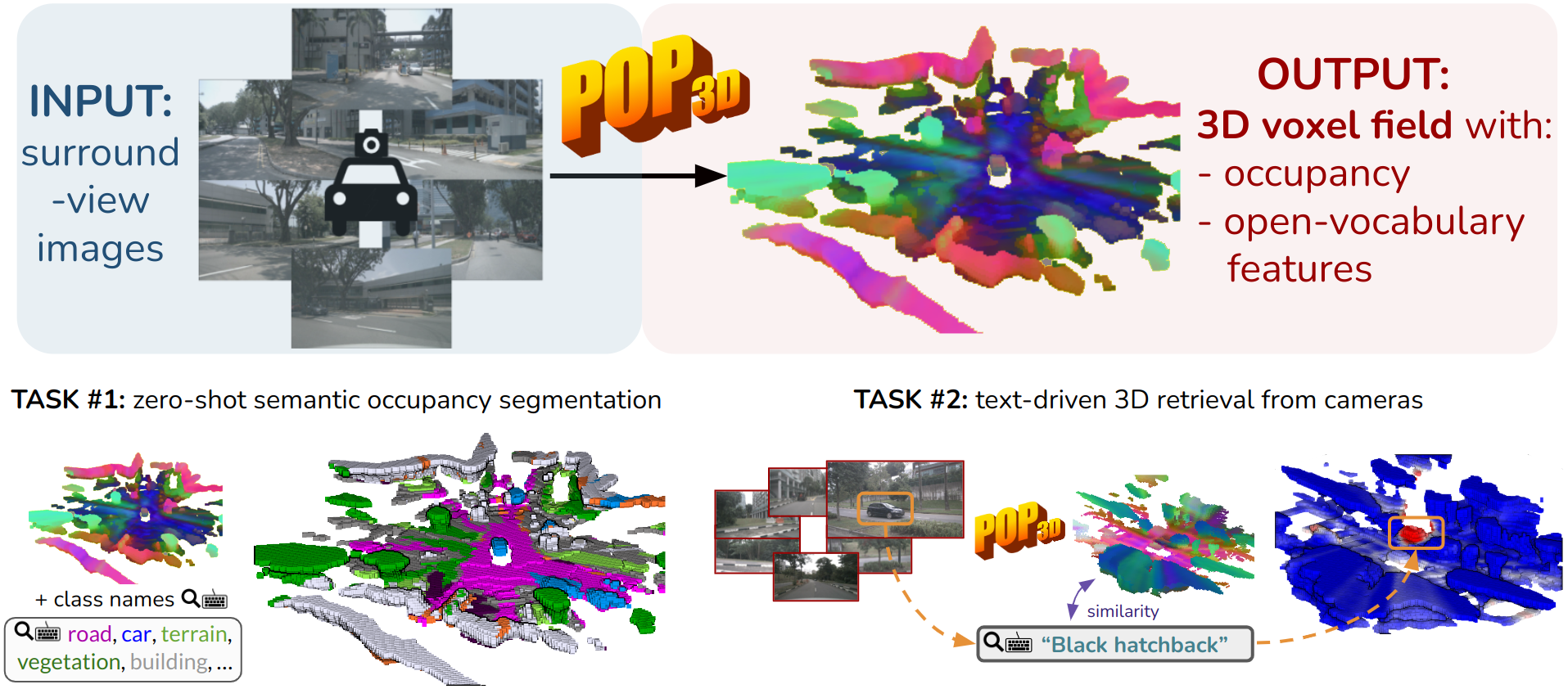

POP-3D: Open-Vocabulary 3D Occupancy Prediction from Images

Antonin Vobecky, Oriane Siméoni, David Hurych, Spyros Gidaris, Andrei Bursuc, Patrick Pérez, Josef Sivic

Advances in Neural Information Processing Systems (NeurIPS), 2023

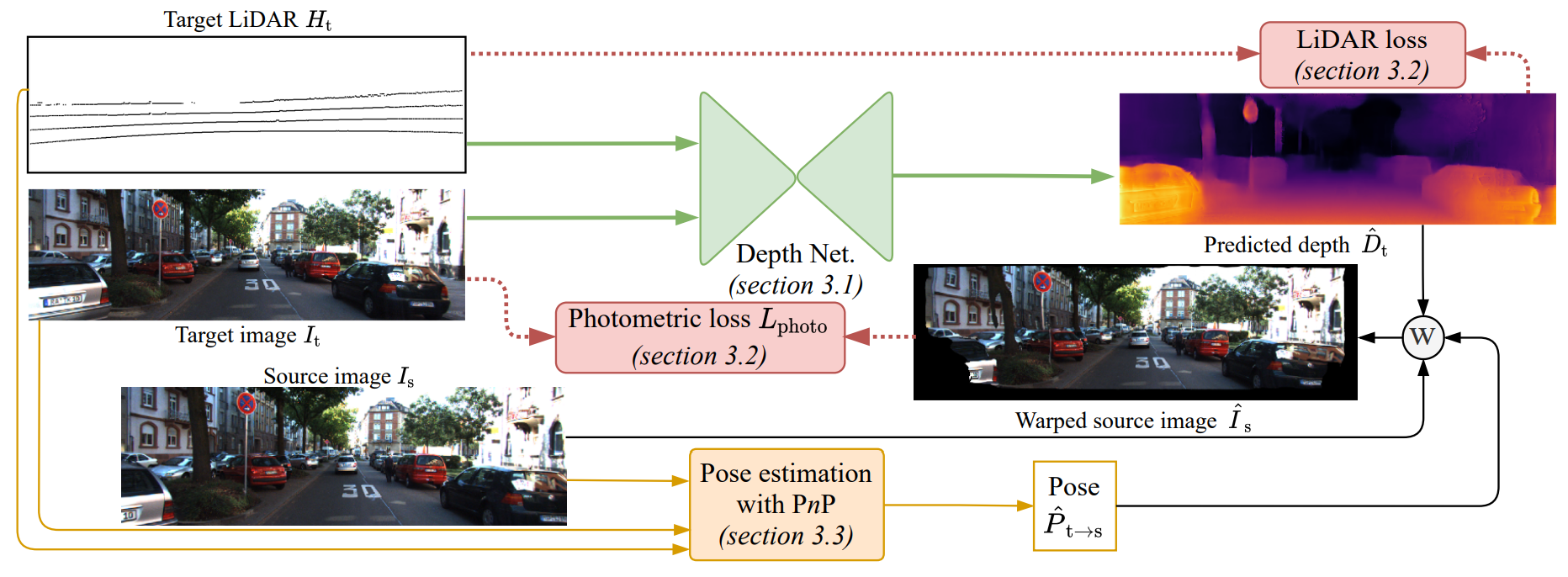

LiDARTouch: Monocular metric depth estimation with a few-beam LiDAR

Florent Bartoccioni, Éloi Zablocki, Patrick Pérez, Matthieu Cord, Karteek Alahari

Computer Vision and Image Understanding (CVIU), 2022

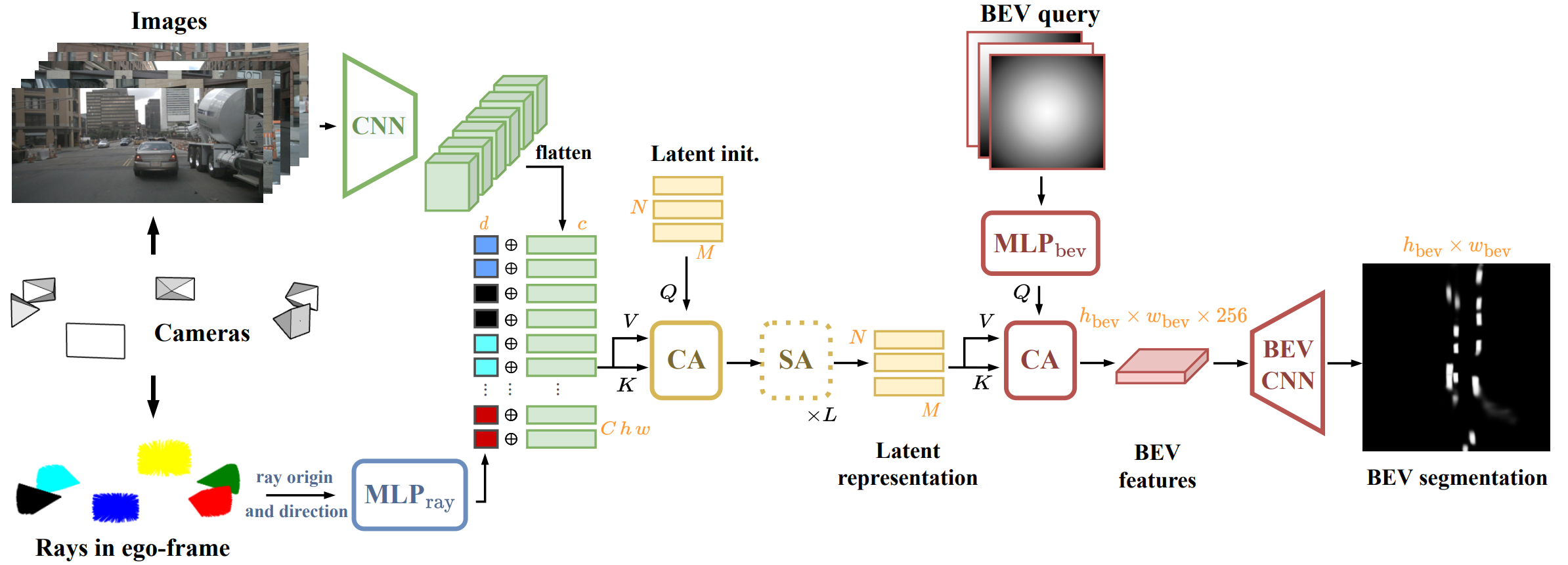

LaRa: Latents and Rays for Multi-Camera Bird's-Eye-View Semantic Segmentation

Florent Bartoccioni, Éloi Zablocki, Andrei Bursuc, Patrick Pérez, Matthieu Cord, Karteek Alahari

Conference on Robot Learning, 2022

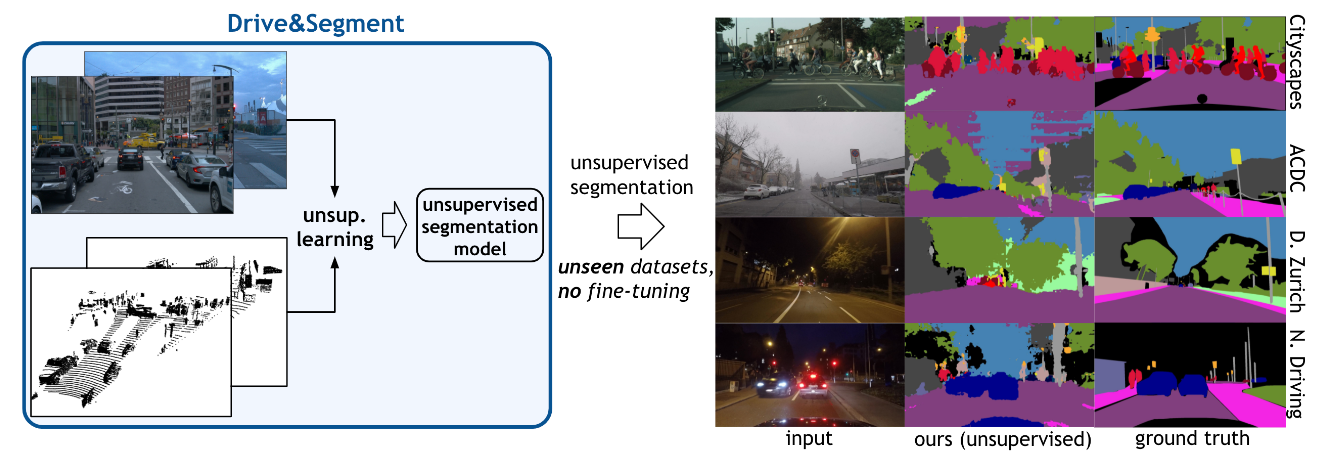

Drive&Segment: Unsupervised Semantic Segmentation of Urban Scenes via Cross-modal Distillation

Antonin Vobecky, Oriane Siméoni, David Hurych, Spyros Gidaris, Andrei Bursuc, Patrick Pérez, Josef Sivic

European Conference on Computer Vision (ECCV), 2022

Raw High-Definition Radar for Multi-Task Learning

Julien Rebut, Arthur Ouaknine, Waqas Malik, and Patrick Pérez

Computer Vision and Pattern Recognition (CVPR), 2022

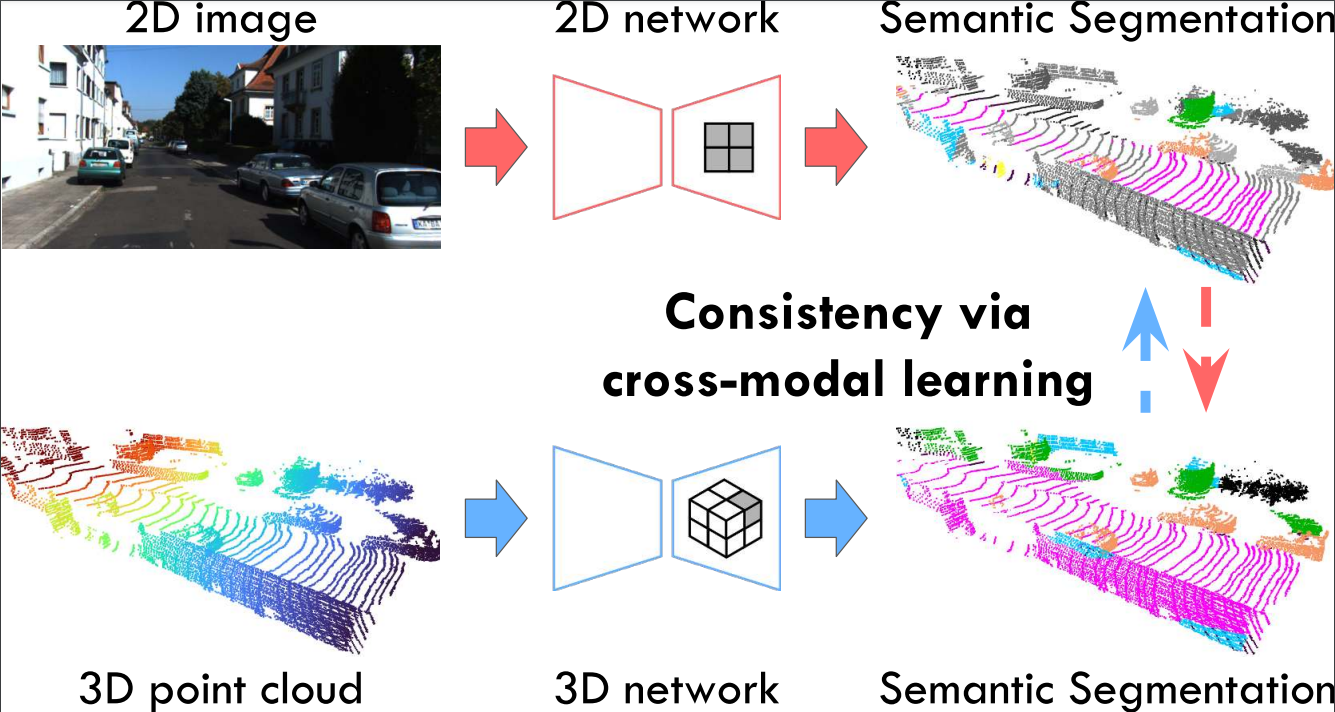

Cross-modal Learning for Domain Adaptation in 3D Semantic Segmentation

Maximilian Jaritz, Tuan-Hung Vu, Raoul de Charette, Émilie Wirbel, and Patrick Pérez

IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022

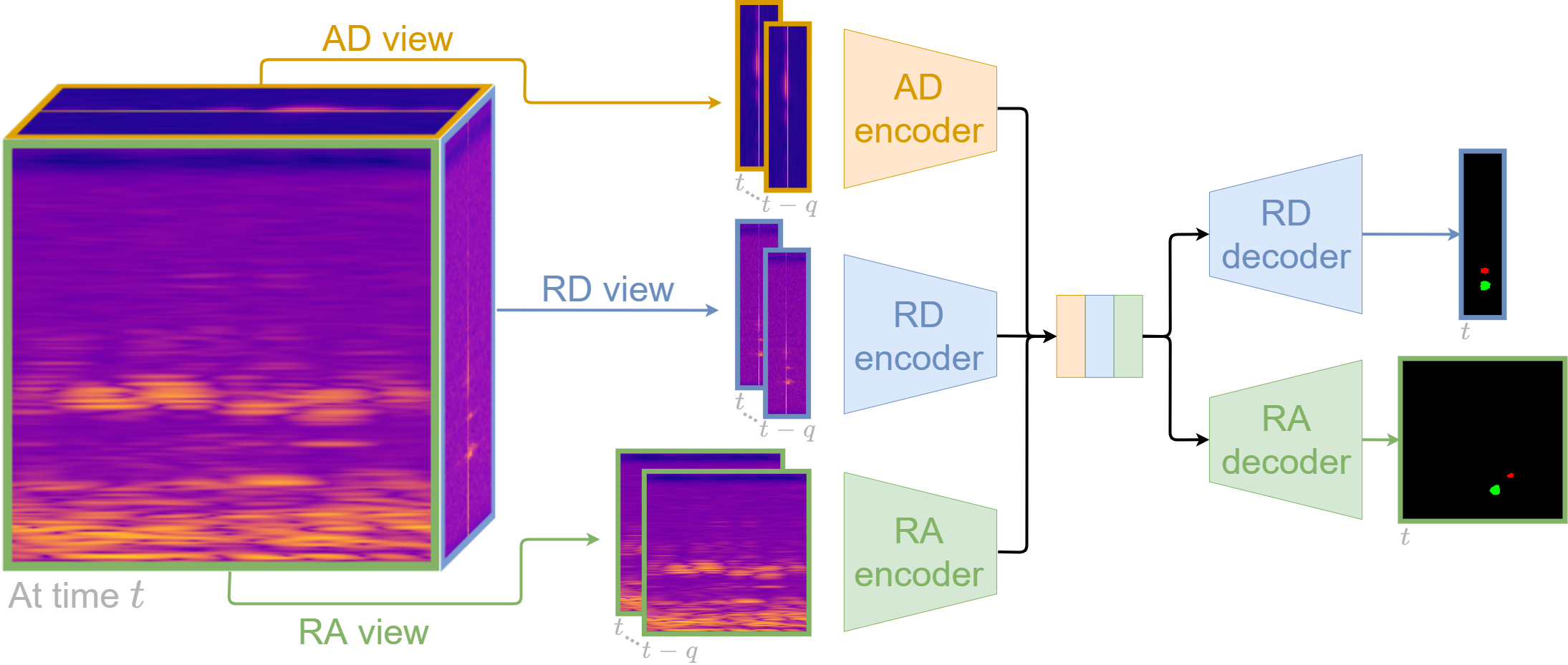

Multi-View Radar Semantic Segmentation

Arthur Ouaknine, Alasdair Newson, Patrick Pérez, Florence Tupin and Julien Rebut

International Conference on Computer Vision (ICCV), 2021

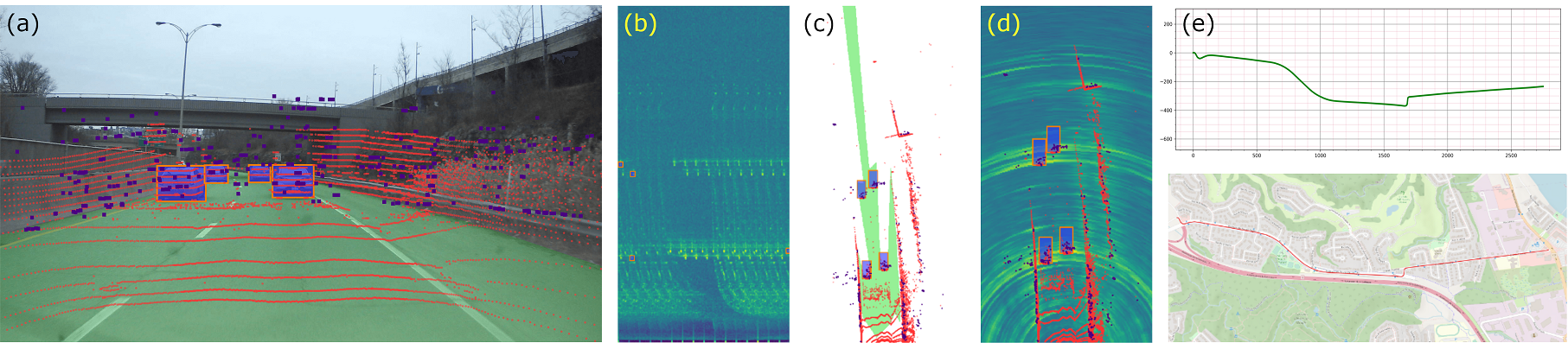

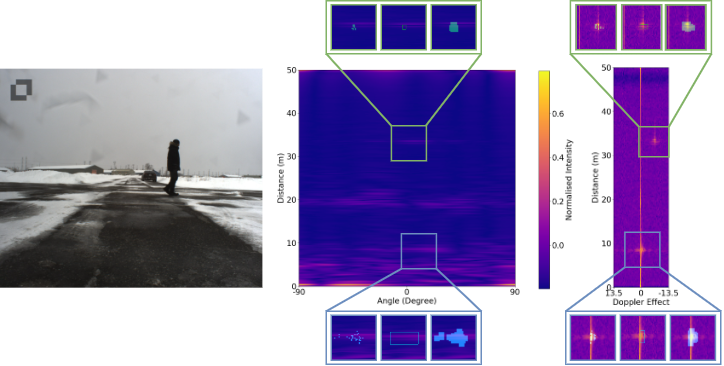

CARRADA Dataset: Camera and Automotive Radar with Range-Angle-Doppler Annotations

Arthur Ouaknine, Alasdair Newson, Julien Rebut, Florence Tupin and Patrick Pérez

International Conference on Pattern Recognition (ICPR), 2020

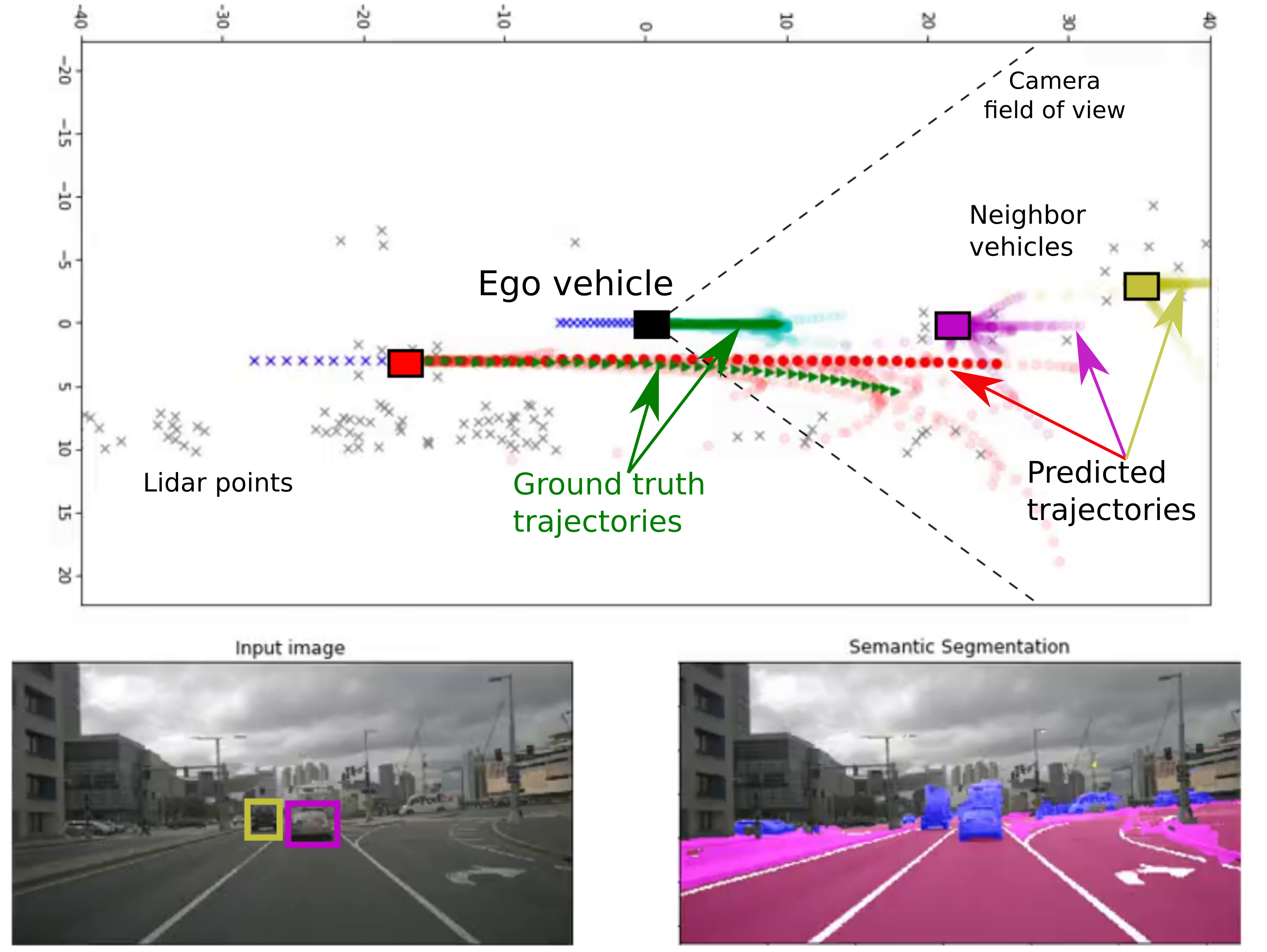

PLOP: Probabilistic poLynomial Objects trajectory Prediction for autonomous driving

Thibault Buhet, Emilie Wirbel, Andrei Bursuc and Xavier Perrotton

Conference on Robot Learning (CoRL), 2020

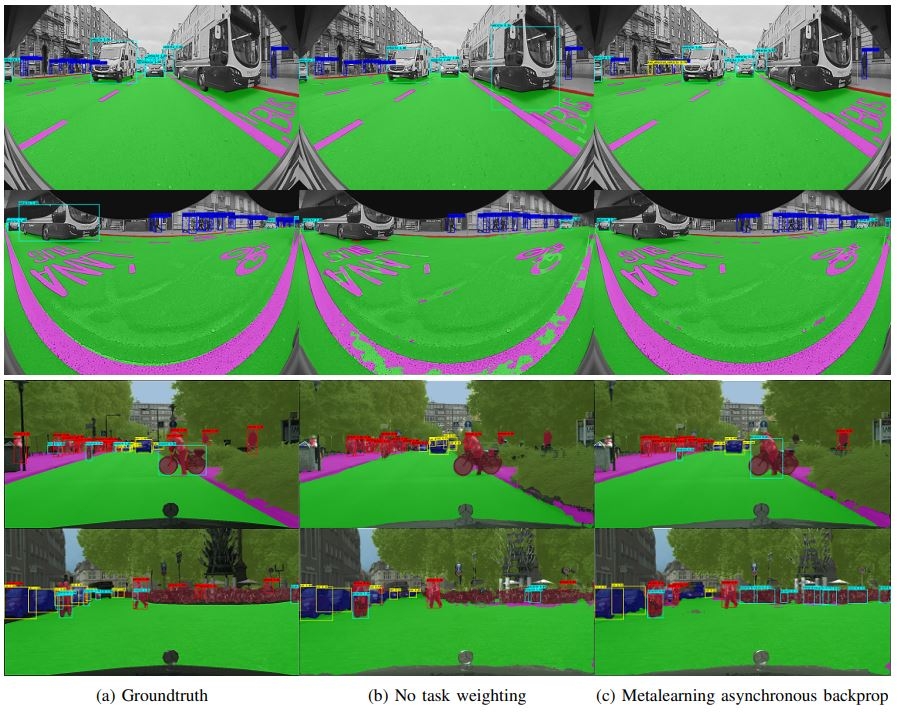

Dynamic Task Weighting Methods for Multi-task Networks in Autonomous Driving Systems

Isabelle Leang, Ganesh Sistu, Fabian Burger, Andrei Bursuc, and Senthil Yogamani

IEEE International Conference on Intelligent Transportation Systems (ITSC), 2020

xMUDA: Cross-Modal Unsupervised Domain Adaptation for 3D Semantic Segmentation

Maximilian Jaritz, Tuan-Hung Vu, Raoul de Charette, Émilie Wirbel, and Patrick Pérez

Computer Vision and Pattern Recognition (CVPR), 2020