Interpretability and Explainability of Deep Models

The concept of explainability has several facets and the need for explainability is strong in safety-critical applications such as autonomous driving where deep learning models are now widely used. As the underlying mechanisms of these models remain opaque, explainability and trustworthiness have become major concerns. Among other things, we investigate methods providing explanations to a black-box visual-based systems in a post-hoc fashion, as well as approaches that aim at building more interpretable self-driving systems by design.

Publications

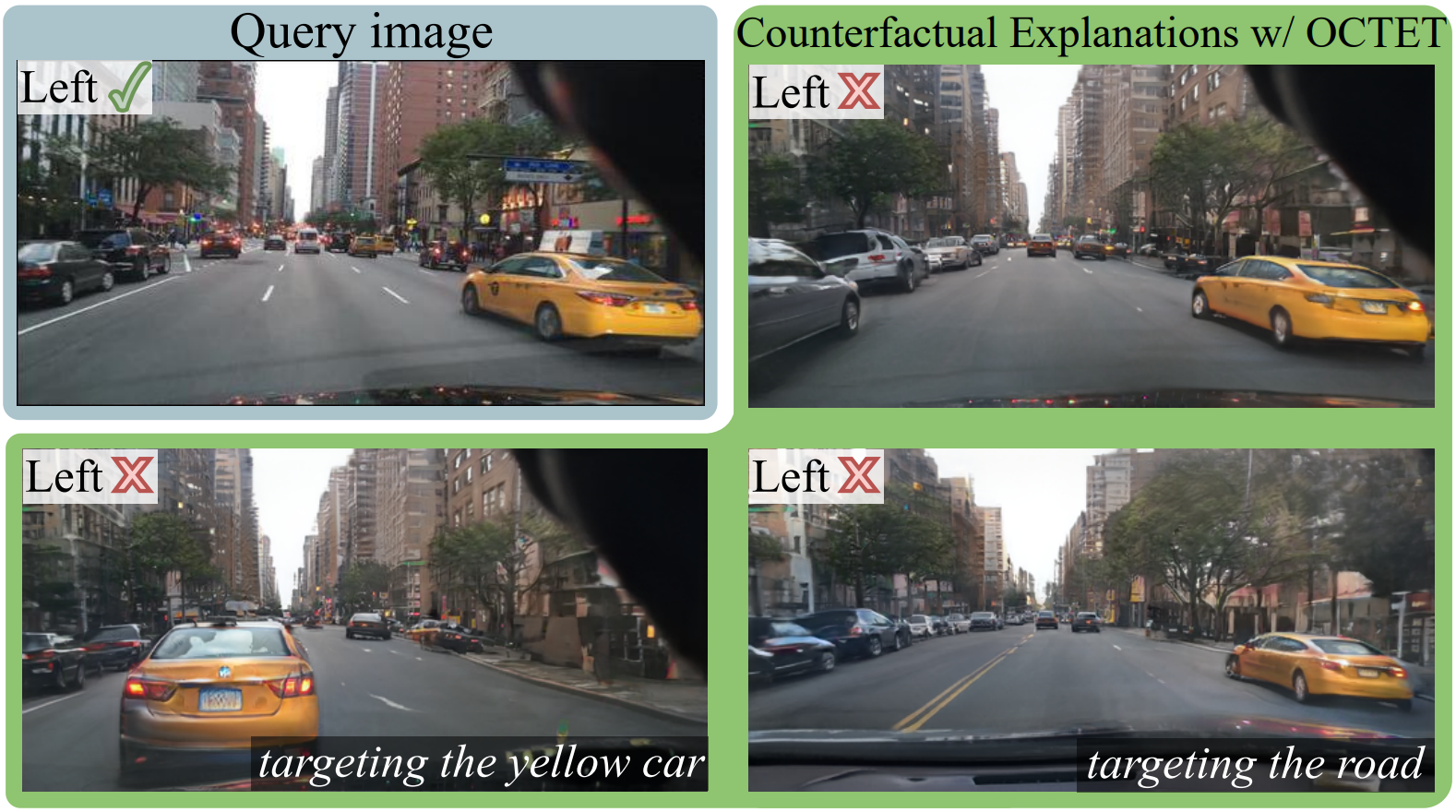

OCTET: Object-aware Counterfactual Explanations

Mehdi Zemni, Mickaël Chen, Éloi Zablocki, Hédi Ben-Younes, Patrick Pérez, Matthieu Cord

Computer Vision and Pattern Recognition (CVPR), 2023

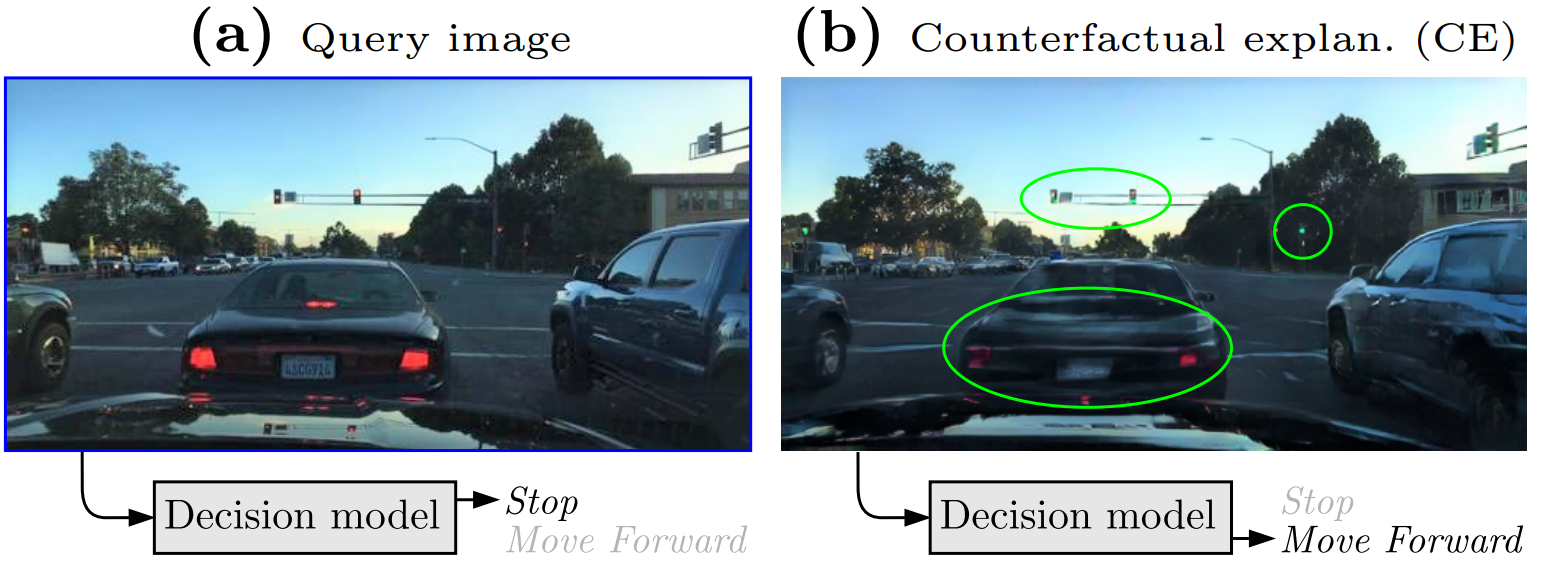

STEEX: Steering Counterfactual Explanations with Semantics

Paul Jacob, Éloi Zablocki, Hédi Ben-Younes, Mickaël Chen, Patrick Pérez, Matthieu Cord

European Conference on Computer Vision, 2022

Explainability of deep vision-based autonomous driving systems: Review and challenges

Éloi Zablocki*, Hédi Ben-Younes*, Patrick Pérez, Matthieu Cord

International Journal of Computer Vision, 2022

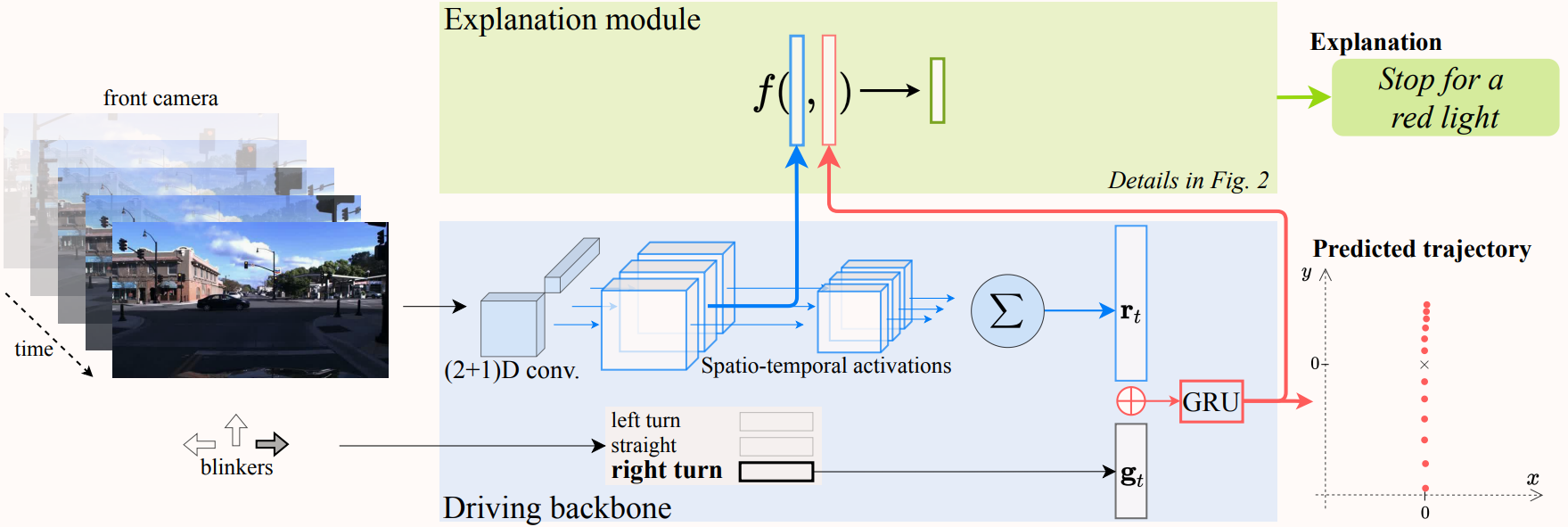

Driving behavior explanation with multi-level fusion

Hédi Ben-Younes*, Éloi Zablocki*, Patrick Pérez, Matthieu Cord

Pattern Recognition (PR), 2022