Addressing Failure Prediction by Learning Model Confidence

Charles Corbière Nicolas Thome Avner Bar-Hen Matthieu Cord Patrick Pérez

NeurIPS 2019

Abstract

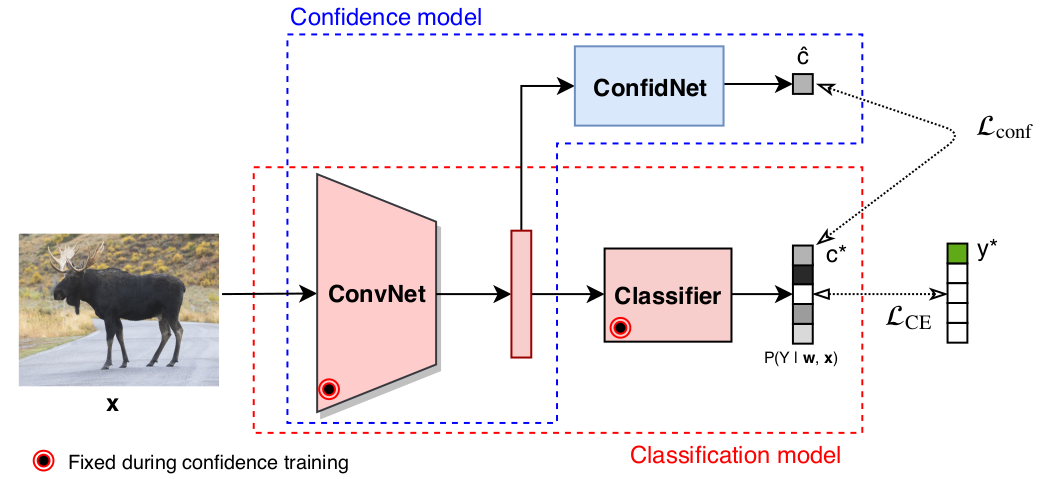

Assessing reliably the confidence of a deep neural net and predicting its failures is of primary importance for the practical deployment of these models. In this paper, we propose a new target criterion for model confidence, corresponding to the True Class Probability (TCP). We show how using the TCP is more suited than relying on the classic Maximum Class Probability (MCP). We provide in addition theoretical guarantees for TCP in the context of failure prediction. Since the true class is by essence unknown at test time, we propose to learn TCP criterion on the training set, introducing a specific learning scheme adapted to this context. Extensive experiments are conducted for validating the relevance of the proposed approach. We study various network architectures, small and large scale datasets for image classification and semantic segmentation. We show that our approach consistently outperforms several strong methods, from MCP to Bayesian uncertainty, as well as recent approaches specifically designed for failure prediction.

BibTeX

@incollection{NIPS2019_8556,

title = {Addressing Failure Prediction by Learning Model Confidence},

author = {Corbi\`{e}re, Charles and THOME, Nicolas and Bar-Hen, Avner and Cord, Matthieu and \'{e}rez, Patrick},

booktitle = {Advances in Neural Information Processing Systems 32},

pages = {2902--2913},

year = {2019},

}