Explainability of deep vision-based autonomous driving systems: Review and challenges

Éloi Zablocki Hédi Ben-Younes Patrick Pérez Matthieu Cord

IJCV 2022

Abstract

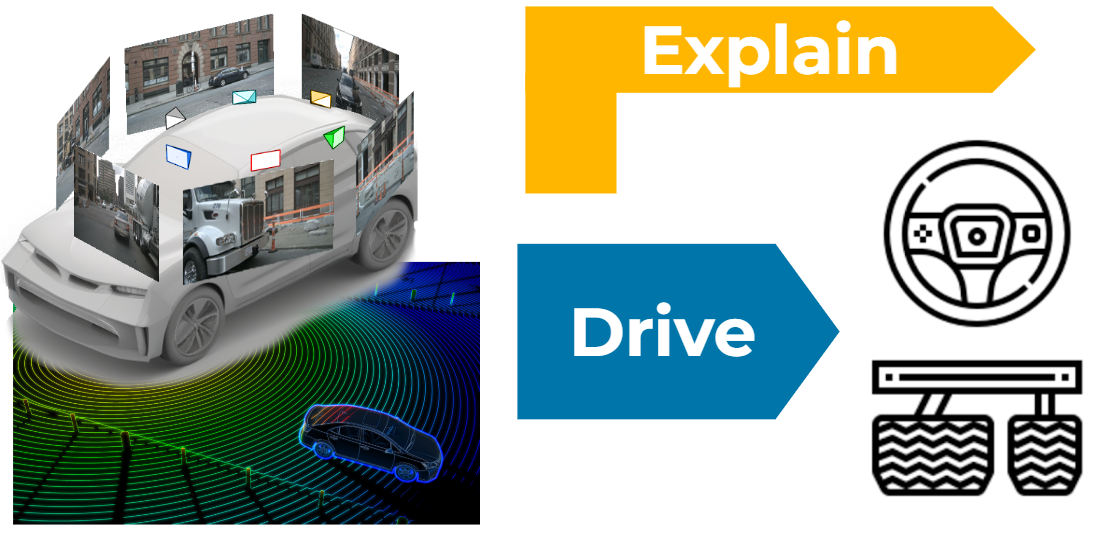

This survey reviews explainability methods for vision-based self-driving systems trained with behavior cloning. The concept of explainability has several facets and the need for explainability is strong in driving, a safety-critical application. Gathering contributions from several research fields, namely computer vision, deep learning, autonomous driving, explainable AI (X-AI), this survey tackles several points. First, it discusses definitions, context, and motivation for gaining more interpretability and explainability from self-driving systems, as well as the challenges that are specific to this application. Second, methods providing explanations to a black-box self-driving system in a post-hoc fashion are comprehensively organized and detailed. Third, approaches from the literature that aim at building more interpretable self-driving systems by design are presented and discussed in detail. Finally, remaining open-challenges and potential future research directions are identified and examined.

BibTeX

@article{xai-driving-survey-2022,

author = {Eloi Zablocki and

Hedi Ben{-}Younes and

Patrick P{\'{e}}rez and

Matthieu Cord},

title = {Explainability of deep vision-based autonomous driving systems: Review and challenges},

journal = {IJCV},

year = {2022}

}