UNIT: unsupervised Online Instance Segmentation through Time

Corentin Sautier Gilles Puy Alexandre Boulch Renaud Marlet Vincent Lepetit

3DV 2025

Abstract

Online object segmentation and tracking in Lidar point clouds enables autonomous agents to understand their surroundings and make safe decisions. Unfortunately, manual annotations for these tasks are prohibitively costly. We tackle this problem with the task of class-agnostic unsupervised online instance segmentation and tracking. To that end, we leverage an instance segmentation backbone and propose a new training recipe that enables the online tracking of objects. Our network is trained on pseudo-labels, eliminating the need for manual annotations. We conduct an evaluation using metrics adapted for temporal instance segmentation. Computing these metrics requires temporally-consistent instance labels. When unavailable, we construct these labels using the available 3D bounding boxes and semantic labels in the dataset. We compare our method against strong baselines and demonstrate its superiority across two different outdoor Lidar datasets.

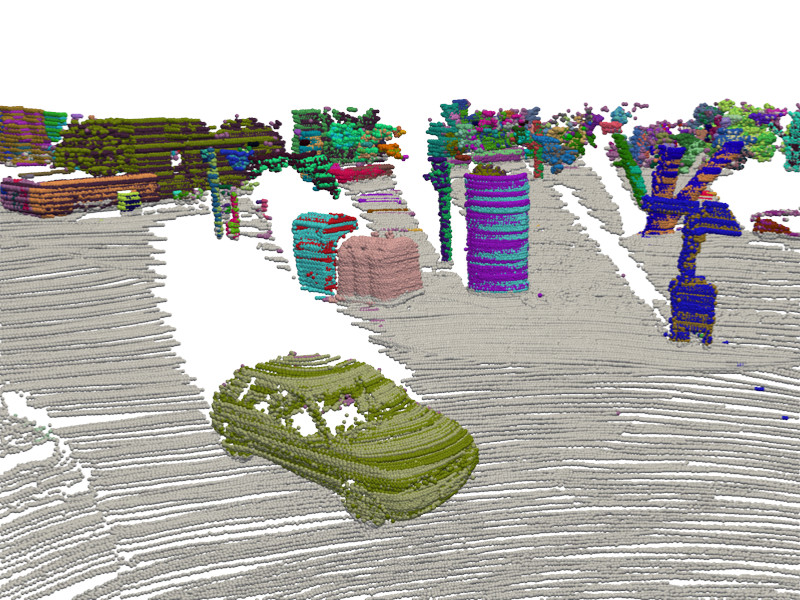

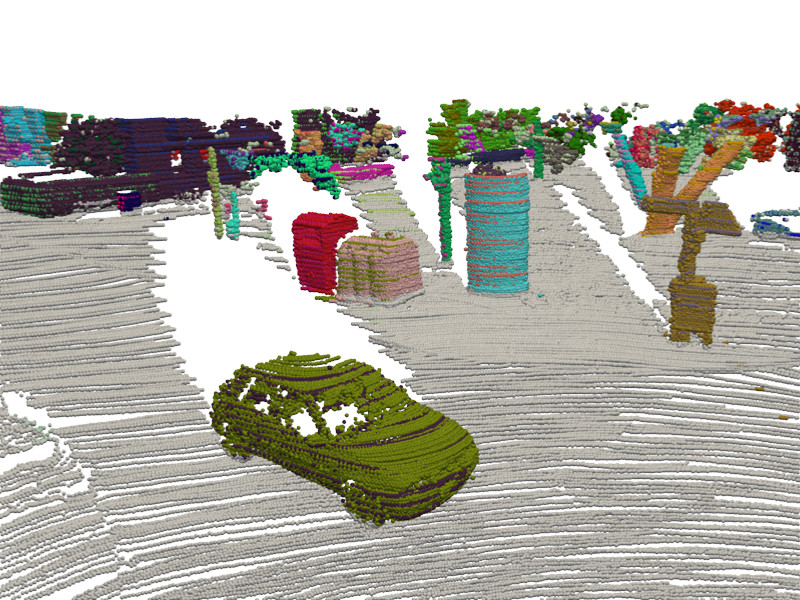

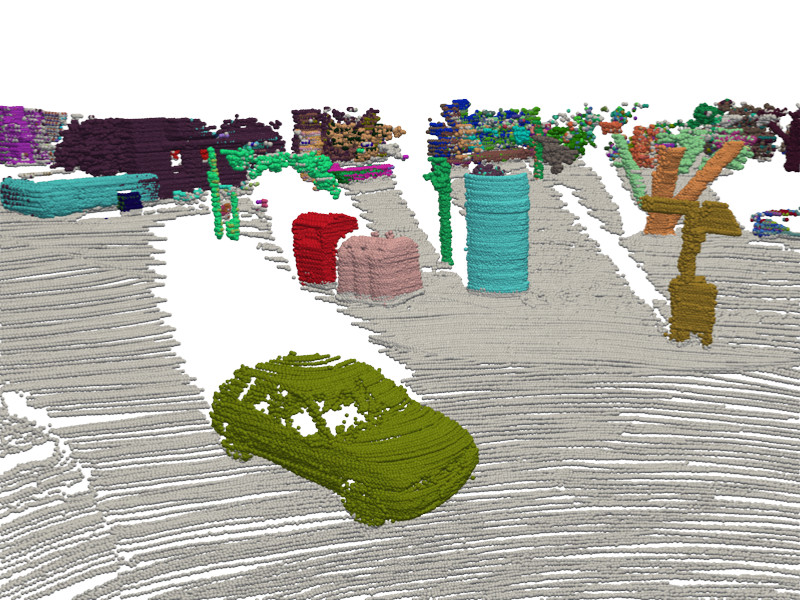

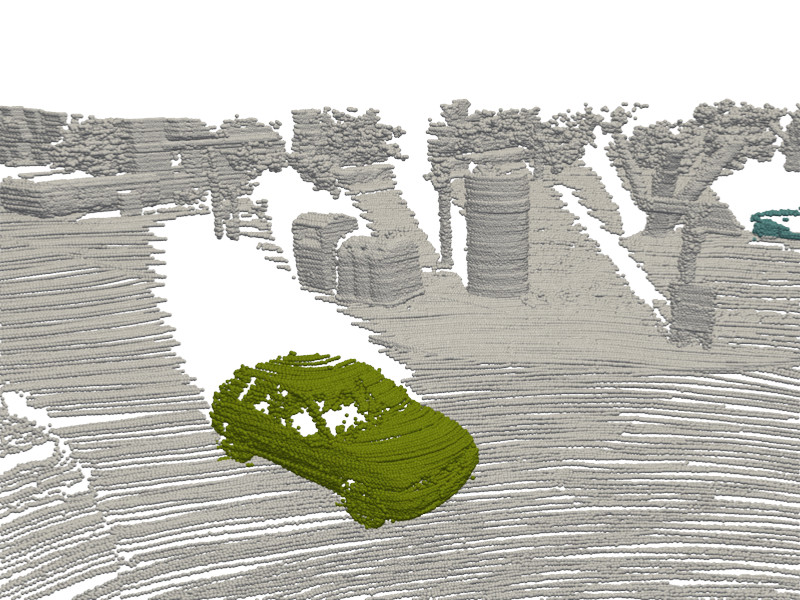

We generate pseudo-labels of instances across time in Lidar scans (4D-Seg), which we use to train an online segmenter (UNIT) that assigns to each 3D point an instance ID consistent over time. We show aggregations of scans over time. This sample scene if from SemanticKITTI, and the car in the foreground is static. The first two images show two prior baselines for this task. Image 3 is shows our input pseudo-labels. The fourth image renders an aggregation of UNIT on successive scans (our input is not an aggregated scan). Note that we obtain more labels than in the ground truth as our class-agnostic segmentation include all objects and stuff, such as trees or buildings.

| Semantic KITTI | Online | Unfiltered | Filtered | |||

|---|---|---|---|---|---|---|

| $$\mathrm{S_{assoc}^{temp}}$$ | $$\mathrm{IoU^*}$$ | $$\mathrm{S_{assoc}}$$ | $$\mathrm{S_{assoc}^{temp}}$$ | $$\mathrm{S_{assoc}}$$ | ||

| 3DUIS w/o time [2] | ✔ | - | - | 0.550 | - | 0.768 |

| UNIT w/o time | ✔ | - | - | 0.715 | - | 0.811 |

| 3DUIS++ | ✔ | 0.116 | 0.214 | 0.550 | 0.148 | 0.769 |

| TARL-Seg [1] | ✘ | 0.231 | 0.353 | 0.668 | 0.264 | 0.735 |

| TARL-Seg++ | ✔ | 0.317 | 0.446 | 0.617 | 0.370 | 0.678 |

| 4D-Seg | ✘ | 0.421 | 0.529 | 0.667 | 0.486 | 0.784 |

| 4D-Seg++ | ✔ | 0.447 | 0.513 | 0.647 | 0.512 | 0.762 |

| UNIT | ✔ | 0.482 | 0.568 | 0.696 | 0.563 | 0.790 |

BibTeX

@inproceedings{sautier2025unit,

title = {{UNIT}: Unsupervised Online Instance Segmentation through Time},

author = {Corentin Sautier and Gilles Puy and Alexandre Boulch and Renaud Marlet and Vincent Lepetit},

booktitle= {3DV},

year = {2025}

} Sources

[1] Nunes et al. “Temporal consistent 3D lidar representation learning for semantic perception in autonomous driving.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2023.

[2] Nunes et al. “Unsupervised Class-Agnostic Instance Segmentation of 3D LiDAR Data for Autonomous Vehicles.” IEEE Robotics and Automation Letters (RA-L). 2022.