TRADI: Tracking deep neural network weight distributions for uncertainty estimation

Gianni Franchi Andrei Bursuc Emanuel Aldea Severine Dubuisson Isabelle Bloch

ECCV 2020

Abstract

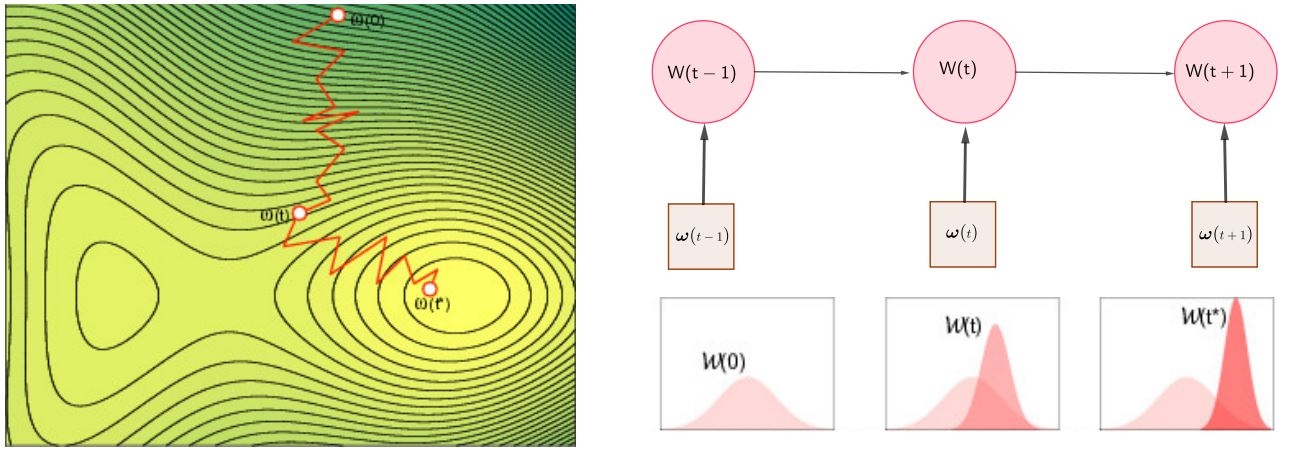

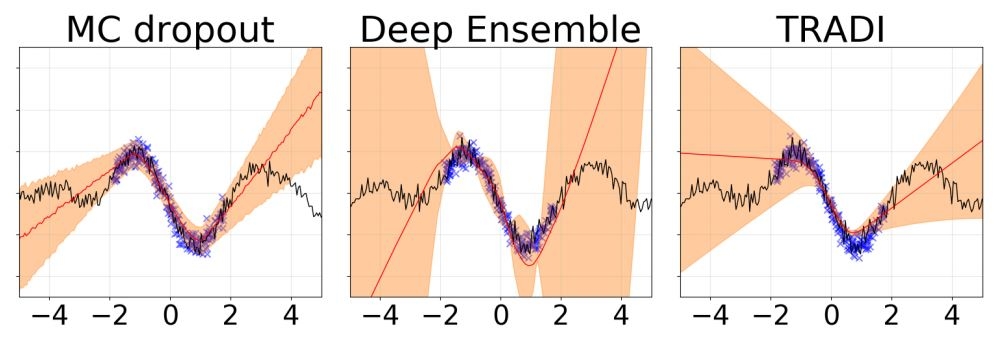

During training, the weights of a Deep Neural Network (DNN) are optimized from a random initialization towards a nearly optimum value minimizing a loss function. Only this final state of the weights is typically kept for testing, while the wealth of information on the geometry of the weight space, accumulated over the descent towards the minimum is discarded. In this work we propose to make use of this knowledge and leverage it for computing the distributions of the weights of the DNN. This can be further used for estimating the epistemic uncertainty of the DNN by sampling an ensemble of networks from these distributions. To this end we introduce a method for tracking the trajectory of the weights during optimization, that does not require any changes in the architecture nor on the training procedure. We evaluate our method on standard classification and regression benchmarks, and on out-of-distribution detection for classification and semantic segmentation. We achieve competitive results, while preserving computational efficiency in comparison to other popular approaches.

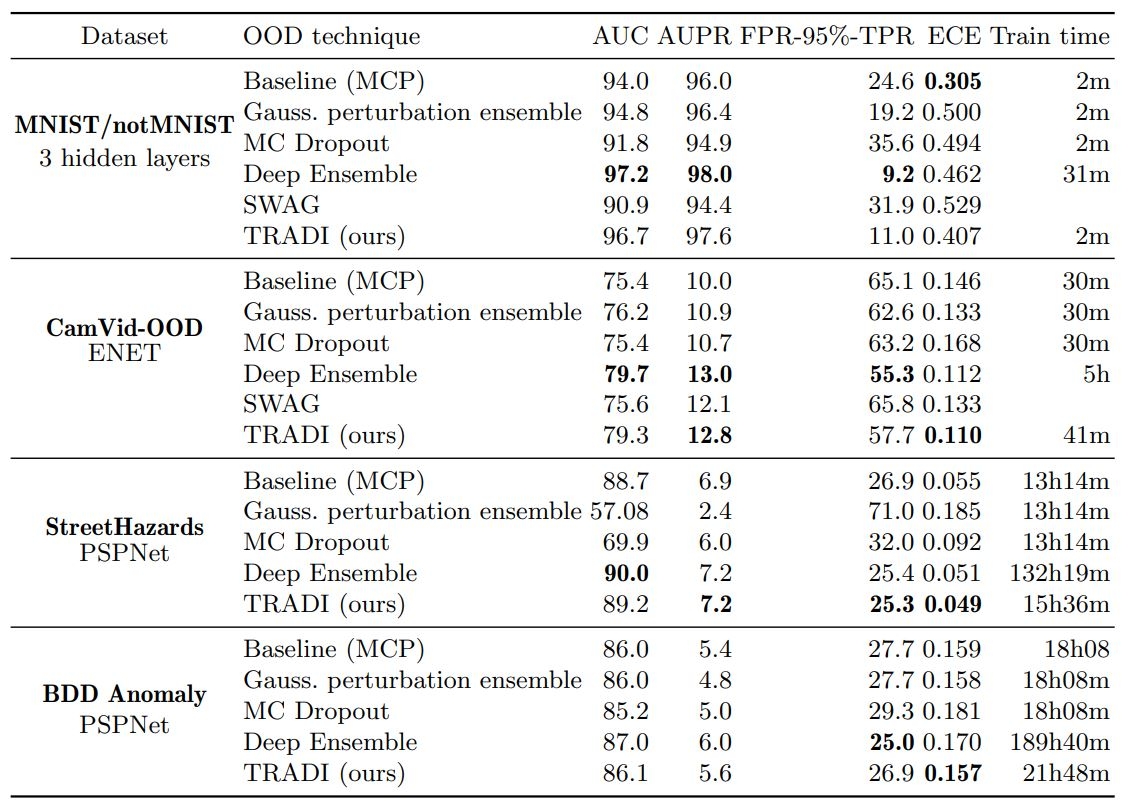

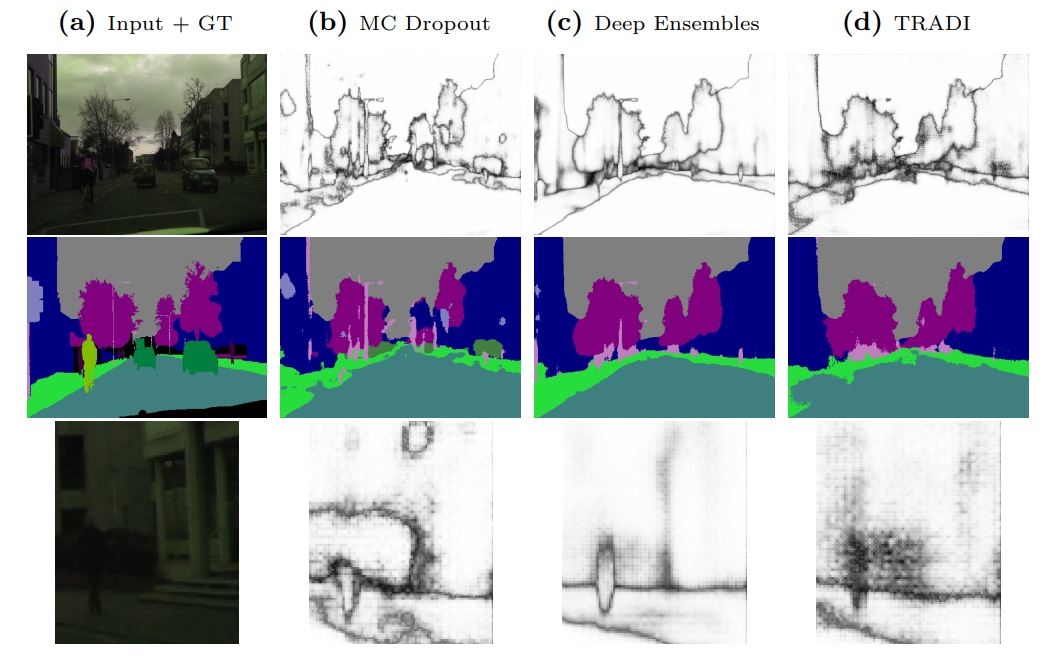

Results

BibTeX

@article{franchi2019tradi,

title={TRADI: Tracking deep neural network weight distributions for uncertainty estimation},

author={Franchi, Gianni and Bursuc, Andrei and Aldea, Emanuel and Dubuisson, S{\'e}verine and Bloch, Isabelle},

journal={arXiv preprint arXiv:1912.11316},

year={2019}

}