Learning to Generate Training Datasets for Robust Semantic Segmentation

Marwane Hariat Olivier Laurent Remi Kazmierczak Shihao Zhang

Andrei Bursuc Angela Yao Gianni Franchi

WACV 2024

Abstract

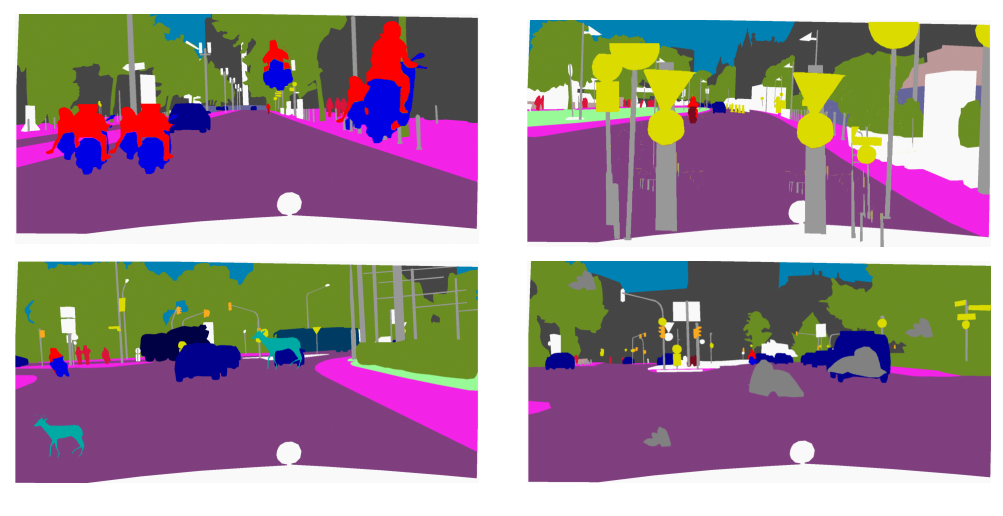

Semantic segmentation methods have advanced significantly. Still, their robustness to real-world perturbations and object types not seen during training remains a challenge, particularly in safety-critical applications. We propose a novel approach to improve the robustness of semantic segmentation techniques by leveraging the synergy between label-to-image generators and image-to-label segmentation models. Specifically, we design Robusta, a novel robust conditional generative adversarial network to generate realistic and plausible perturbed images that can be used to train reliable segmentation models. We conduct in-depth studies of the proposed generative model, assess the performance and robustness of the downstream segmentation network, and demonstrate that our approach can significantly enhance the robustness in the face of real-world perturbations, distribution shifts, and out-of-distribution samples. Our results suggest that this approach could be valuable in safety-critical applications, where the reliability of perception modules such as semantic segmentation is of utmost importance and comes with a limited computational budget in inference.

BibTeX

@inproceedings{hariat2024learning,

title={Learning to generate training datasets for robust semantic segmentation},

author={Hariat, Marwane and Laurent, Olivier and Kazmierczak, R{\'e}mi and Zhang, Shihao and Bursuc, Andrei and Yao, Angela and Franchi, Gianni},

booktitle={WACV},

year={2024}

}