Multi-Modal 3D GAN for Urban Scenes

Loïck Chambon Mickaël Chen Tuan-Hung Vu Alexandre Boulch Andrei Bursuc Matthieu Cord Patrick Pérez

NeurIPS ML4AD Workshop 2022

Abstract

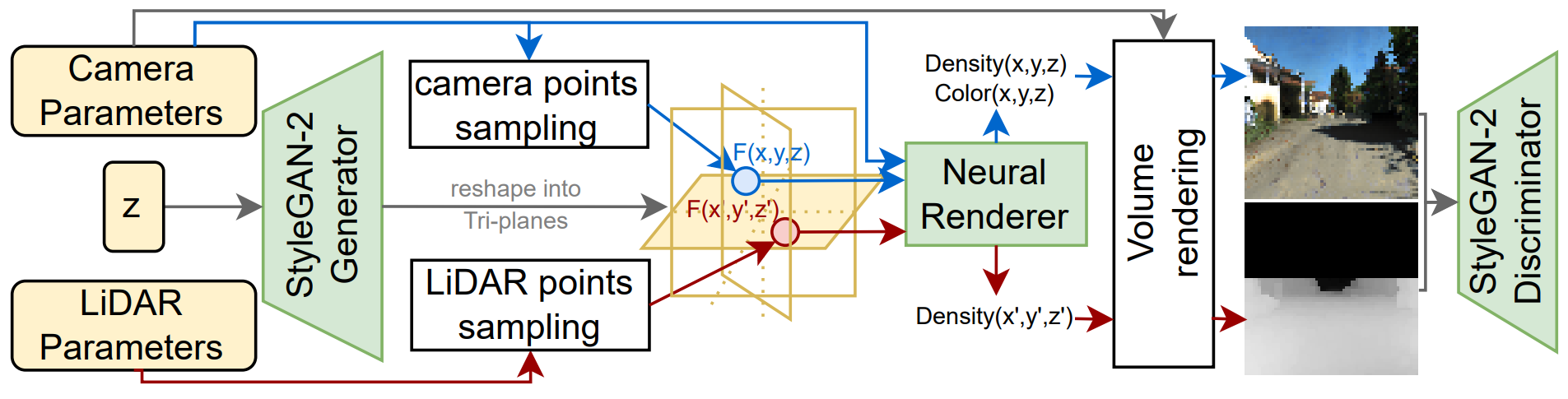

Recently, a number of works have explored training 3D-aware Generative Adversarial Networks (GANs) that include a neural rendering layer in the generative pipeline. Doing so, they succeed in building models that can infer impressive 3D information while being trained solely on 2D images. However, they have been mostly applied to images centered around an object. Transitioning to driving scenes is still a challenge, as not only the scenes are open and more complex, but also one usually does not have access to as many diverse viewpoints. Typically only the front camera view is available. We investigate in this work how 3D GANs are amenable are for such a setup, and propose a method to leverage information from LiDAR sensors to alleviate the detected issues.

BibTeX

@article{chambon2022multimodal_3d_gan,

title={Multi-Modal 3D GAN for Urban Scenes},

author={Loick Chambon and Mickael Chen and Tuan-Hung Vu and Alexandre Boulch and Andrei Bursuc and Matthieu Cord and Patrick Perez},

year={2022},

journal={NeurIPS ML4AD Workshop}

}