GEPS: Boosting Generalization in Parametric PDE Neural Solvers through Adaptive Conditioning

Armand Kassaï Koupaï Jorge Mifsut-Benet Yuan Yin Jean-Noël Vittaut Patrick Gallinari

NeurIPS 2024

Abstract

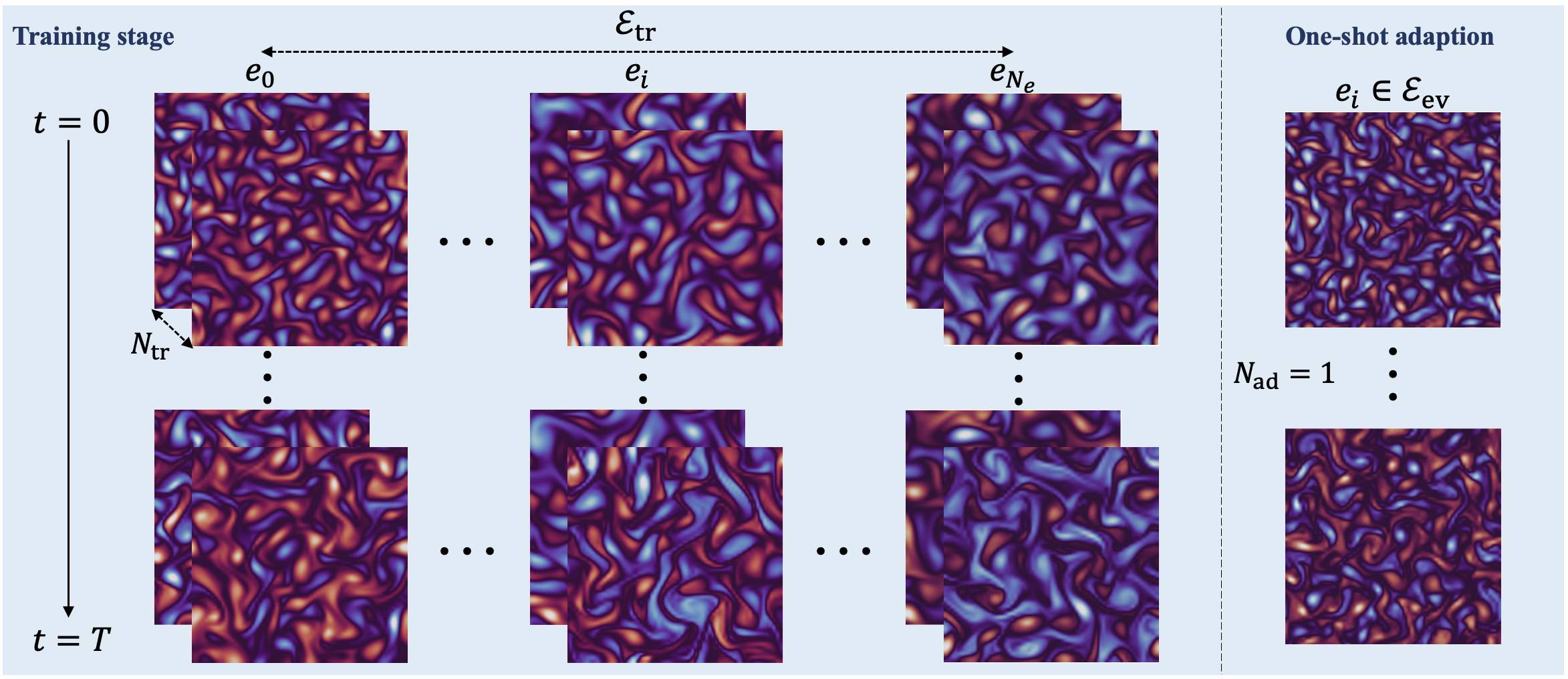

Solving parametric partial differential equations (PDEs) presents significant challenges for data-driven methods due to the sensitivity of spatio-temporal dynamics to variations in PDE parameters. Machine learning approaches often struggle to capture this variability. To address this, data-driven approaches learn parametric PDEs by sampling a very large variety of trajectories with varying PDE parameters. We first show that incorporating conditioning mechanisms for learning parametric PDEs is essential and that among them, \textit{adaptive conditioning}, allows stronger generalization. As existing adaptive conditioning methods do not scale well with respect to the number of PDE parameters, we propose GEPS, a simple adaptation mechanism to boost GEneralization in Pde Solvers via a first-order optimization and low-rank rapid adaptation of a small set of context parameters. We demonstrate the versatility of our approach for both fully data-driven and for physics-aware neural solvers. Validation performed on a whole range of spatio-temporal forecasting problems demonstrates excellent performance for generalizing to unseen conditions including initial conditions, PDE coefficients, forcing terms and solution domain.

BibTeX

@inproceedings{kassai2024geps,

title={GEPS: Boosting Generalization in Parametric PDE Neural Solvers through Adaptive Conditioning},

author={Kassaï Koupaï, Armand and Mifsut Benet, Jorge and Vittaut, Jean-Noël and Gallinari, Patrick},

booktitle={38th Conference on Neural Information Processing Systems (NeurIPS 2024)},

year={2024}

}