Unsupervised Object Localization: Observing the Background to Discover Objects

Oriane Siméoni Chloé Sekkat Gilles Puy Antonin Vobecky Éloi Zablocki Patrick Pérez

CVPR 2023

Abstract

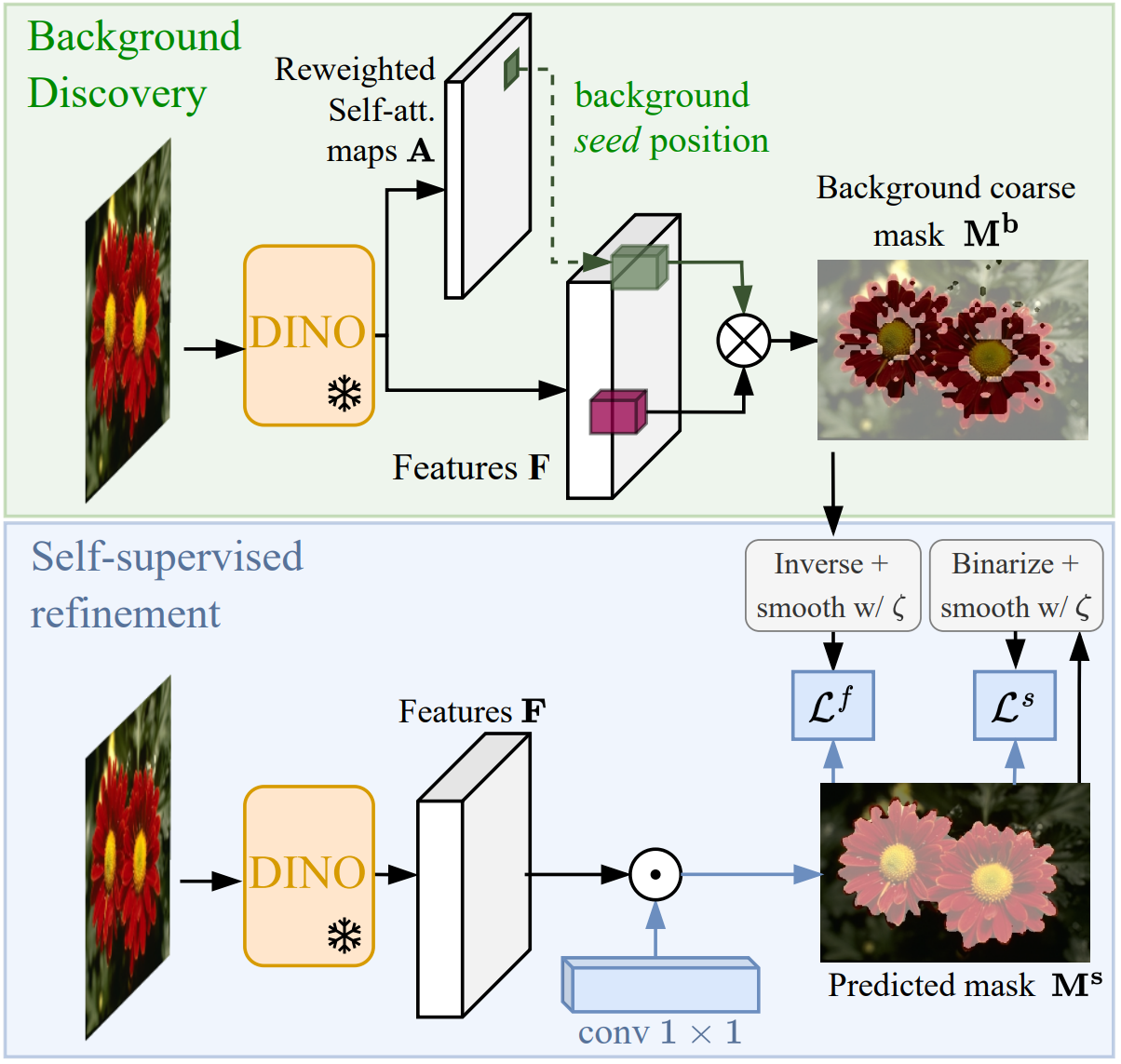

Recent advances in self-supervised visual representation learning have paved the way for unsupervised methods tackling tasks such as object discovery and instance segmentation. However, discovering objects in an image with no supervision is a very hard task; what are the desired objects, when to separate them into parts, how many are there, and of what classes? The answers to these questions depend on the tasks and datasets of evaluation. In this work, we take a different approach and propose to look for the background instead. This way, the salient objects emerge as a by-product without any strong assumption on what an object should be. We propose FOUND, a simple model made of a single conv1×1 initialized with coarse background masks extracted from self-supervised patch-based representations. After fast training and refining these seed masks, the model reaches state-of-the-art results on unsupervised saliency detection and object discovery benchmarks. Moreover, we show that our approach yields good results in the unsupervised semantic segmentation retrieval task.

Video

BibTeX

@inproceedings{simeoni2023found,

title = {Unsupervised Object Localization: Observing the Background to Discover Objects},

author = {Oriane Sim\'eoni and

Chlo\'e Sekkat and

Gilles Puy and

Antonin Vobecky and

{\'{E}}loi Zablocki and

Patrick P\'erez},

booktitle = {CVPR},

year = {2023}

}