Halton Scheduler For Masked Generative Image Transformer

Victor Besnier Mickael Chen David Hurych Eduardo Valle Matthieu Cord

ICLR 2025 (poster)

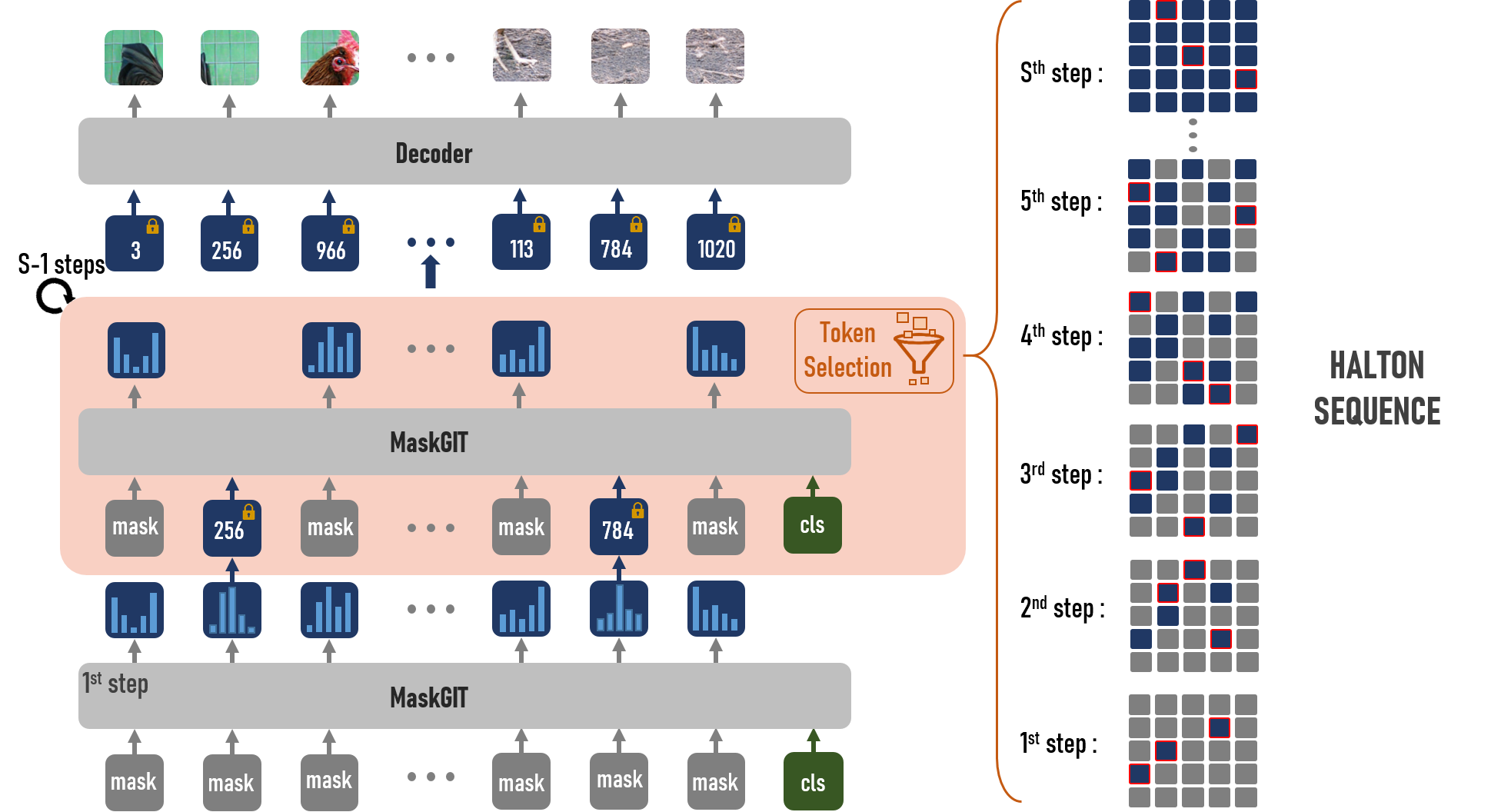

Masked Generative Image Transformers (MaskGIT) have gained popularity for their fast and efficient image generation capabilities. However, the sampling strategy used to progressively "unmask" tokens in these models plays a crucial role in determining image quality and diversity. Our new research paper, introduces the Halton Scheduler—a novel approach that significantly enhances MaskGIT's image generation performance.

From Confidence to Halton: What’s New?

Traditional MaskGIT uses a Confidence scheduler, which selects tokens based on logit distribution but tends to cluster token selection, leading to reduced image diversity. The Halton Scheduler addresses this by leveraging low-discrepancy sequences, the Halton sequence, to distribute token selection more uniformly across the image.

Figure 1: MaskGIT using our Halton scheduler on ImageNet 256.

Key Insights and Benefits

- Improved Image Quality and Diversity: The Halton scheduler reduces clustering of sampled tokens, enhancing image sharpness and background richness.

- No Retraining Required: This scheduler can be integrated as a drop-in replacement for the existing MaskGIT sampling strategy.

- Faster and More Balanced Sampling: By reducing token correlation, the Halton Scheduler allows MaskGIT to progressively add fine details while avoiding local sampling errors.

Figure 2: MaskGIT using our Halton scheduler for text-to-image.

Figure 3: MaskGIT using the Confidence scheduler for text-to-image.

Results: ImageNet and COCO Benchmarks

On benchmark datasets like ImageNet (256×256) and COCO, the Halton Scheduler outperforms the baseline Confidence scheduler:

- Reduced Fréchet Inception Distance (FID): Indicating better image realism.

- Improved Precision and Recall: Reflecting a more diverse image generation.

BibTeX

@inproceedings{besnier2025iclr,

title={Halton Scheduler for Masked Generative Image Transformer},

author={Victor Besnier, Mickael Chen, David Hurych, Eduardo Valle, Matthieu Cord},

booktitle={International Conference on Learning Representations (ICLR)},

year={2025}

}