Eq-vae: Equivariance regularized latent space for improved generative image modeling

Theodoros Kouzelis Ioannis Kakogeorgiou Spyros Gidaris Nikos Komodakis

ICML 2025

Abstract

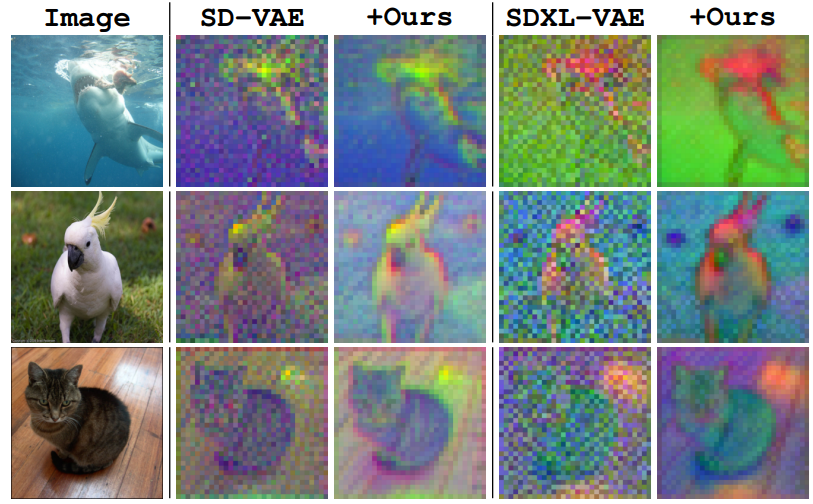

Latent generative models have emerged as a leading approach for high-quality image synthesis. These models rely on an autoencoder to compress images into a latent space, followed by a generative model to learn the latent distribution. We identify that existing autoencoders lack equivariance to semantic-preserving transformations like scaling and rotation, resulting in complex latent spaces that hinder generative performance. To address this, we propose EQ-VAE, a simple regularization approach that enforces equivariance in the latent space, reducing its complexity without degrading reconstruction quality. By finetuning pre-trained autoencoders with EQ-VAE, we enhance the performance of several state-of-the-art generative models, including DiT, SiT, REPA and MaskGIT, achieving a 7 speedup on DiT-XL/2 with only five epochs of SD-VAE fine-tuning. EQ-VAE is compatible with both continuous and discrete autoencoders, thus offering a versatile enhancement for a wide range of latent generative models. Project page and code: https://eq-vae.github.io/.

BibTeX

@inproceedings{Kouzelis2025eqvae,

title={Eq-vae: Equivariance regularized latent space for improved generative image modeling},

author={Kouzelis, Theodoros and Kakogeorgiou, Ioannis and Gidaris, Spyros and Komodakis, Nikos},

booktitle={ICML},

year={2025}

}