Frugal learning

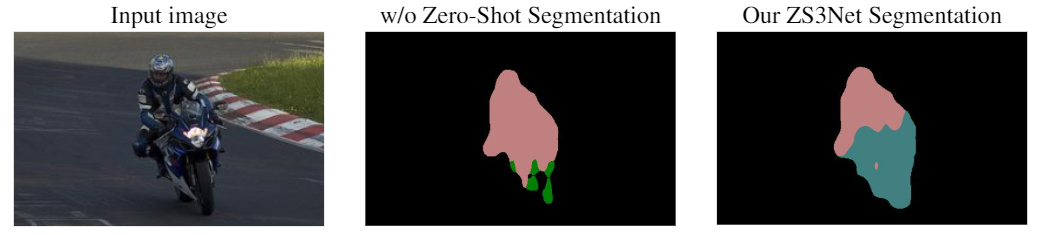

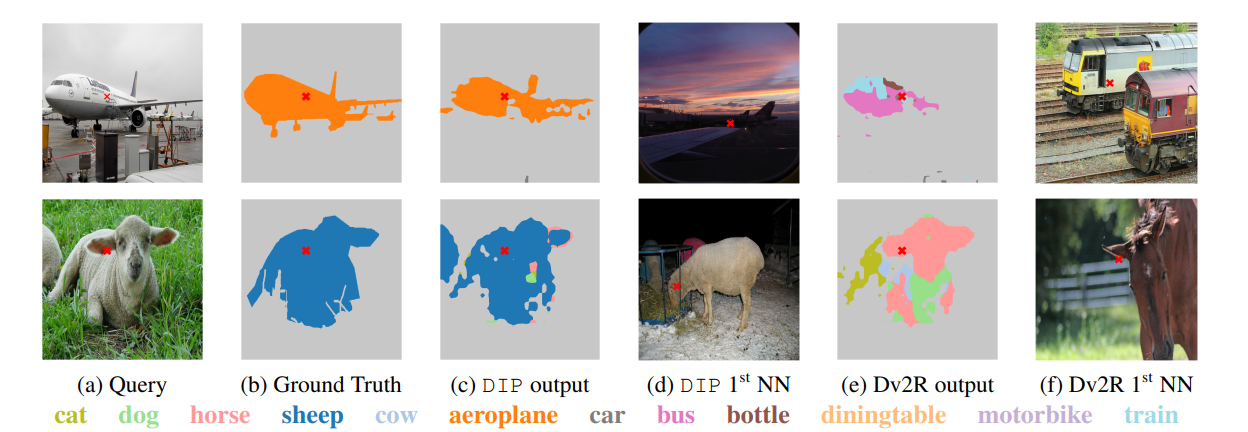

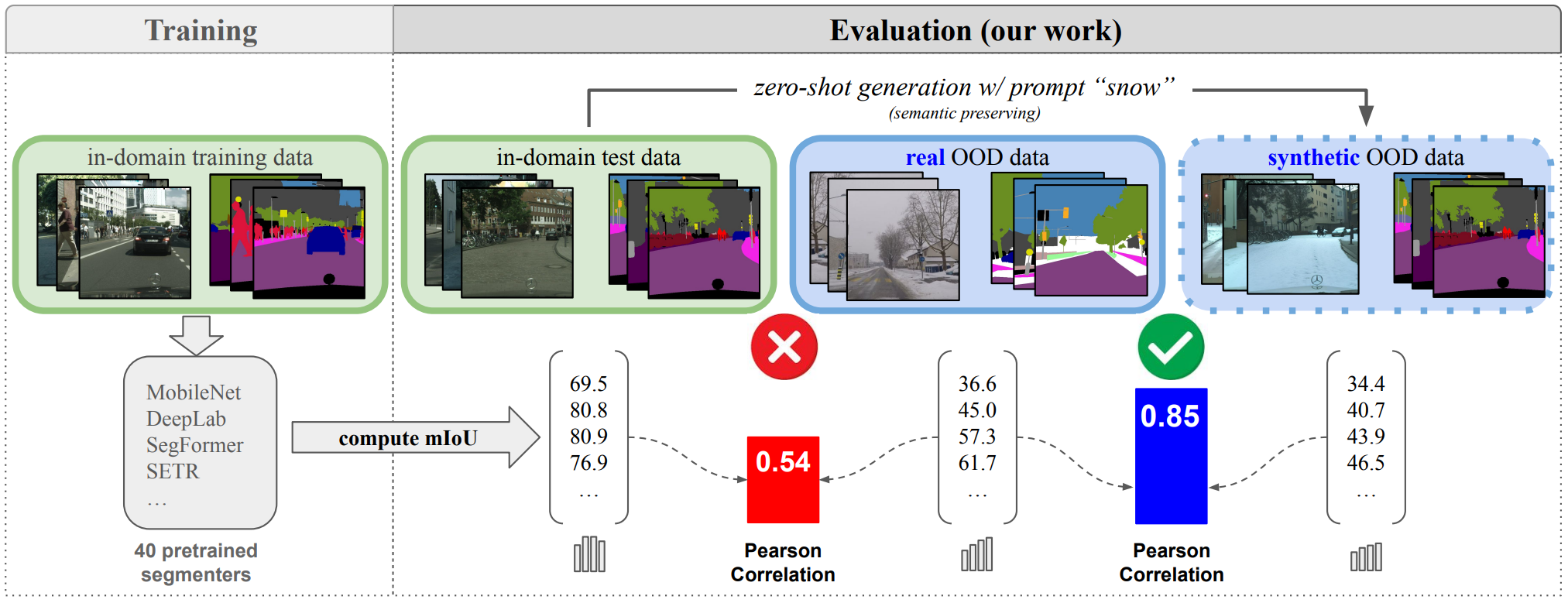

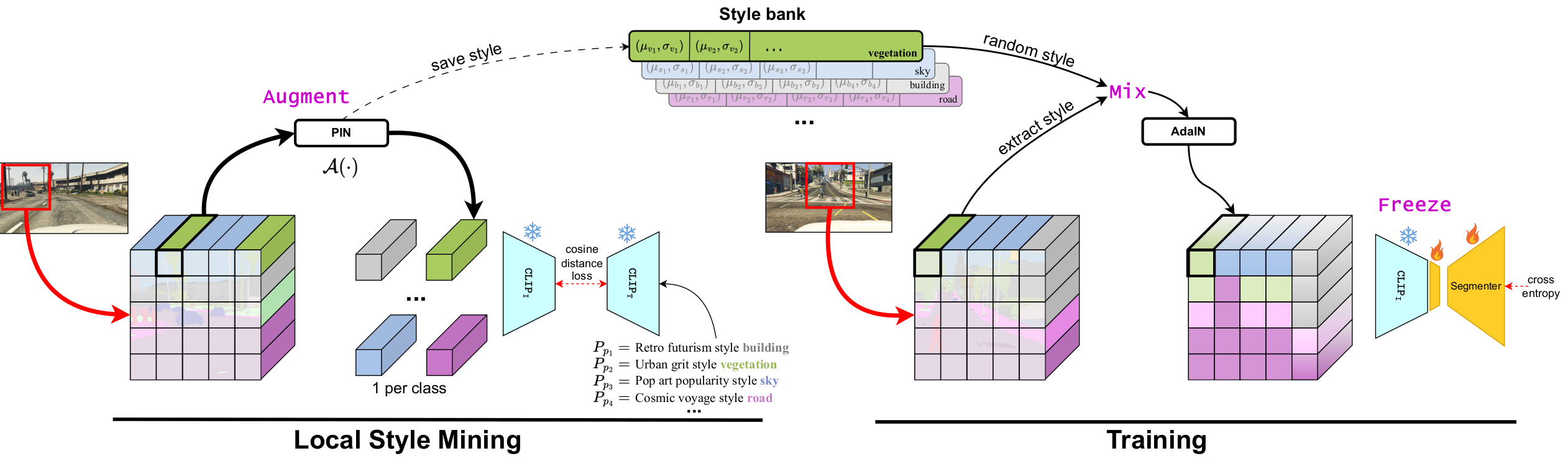

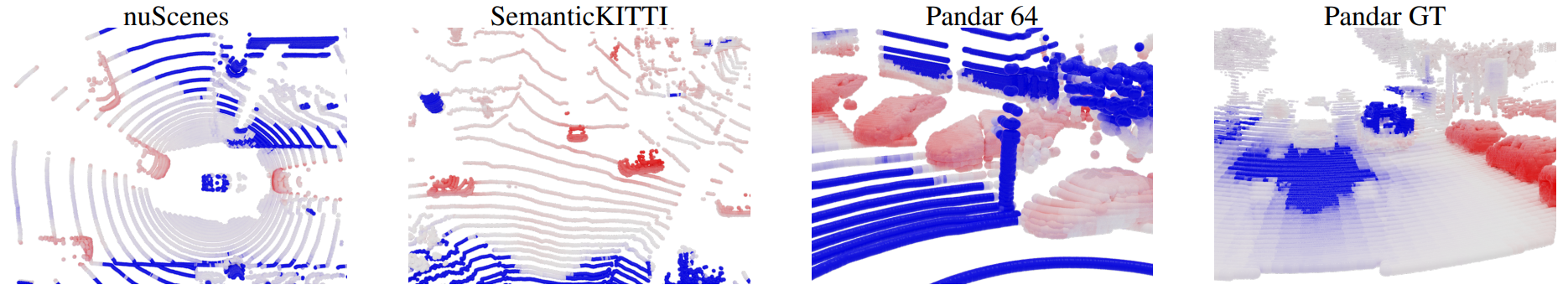

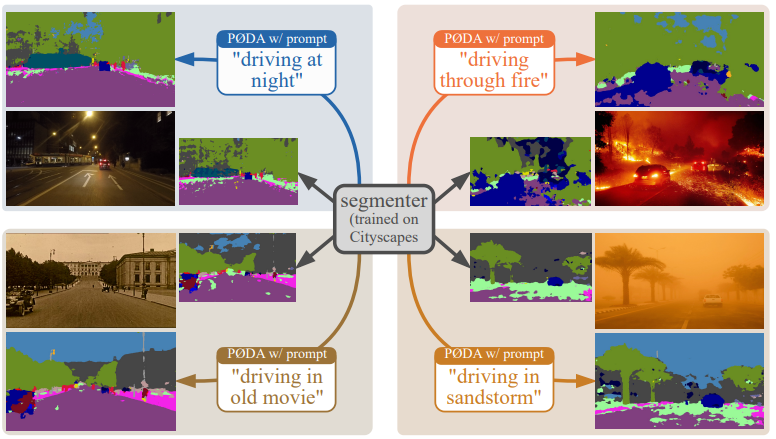

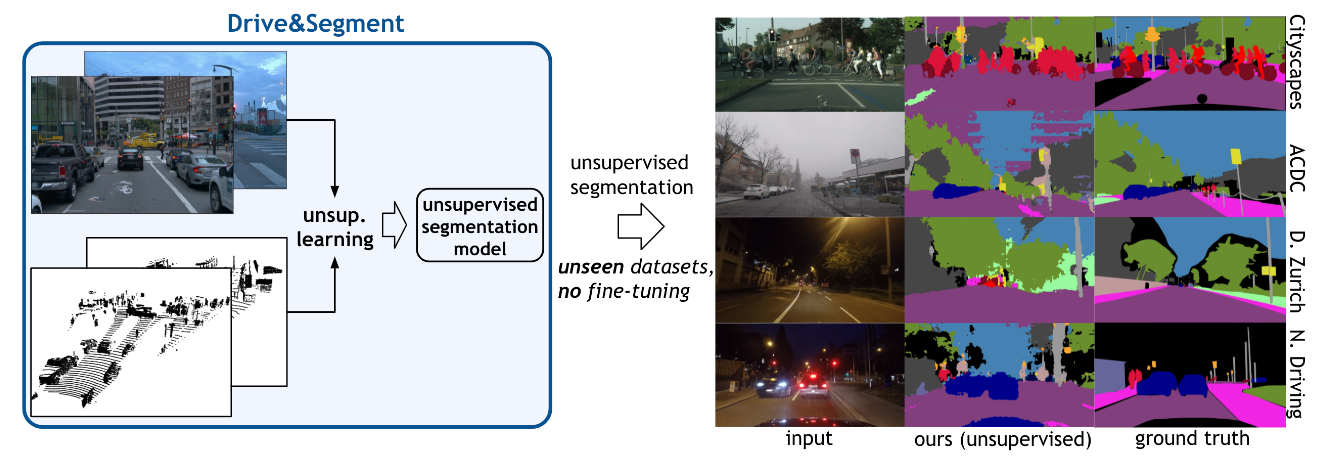

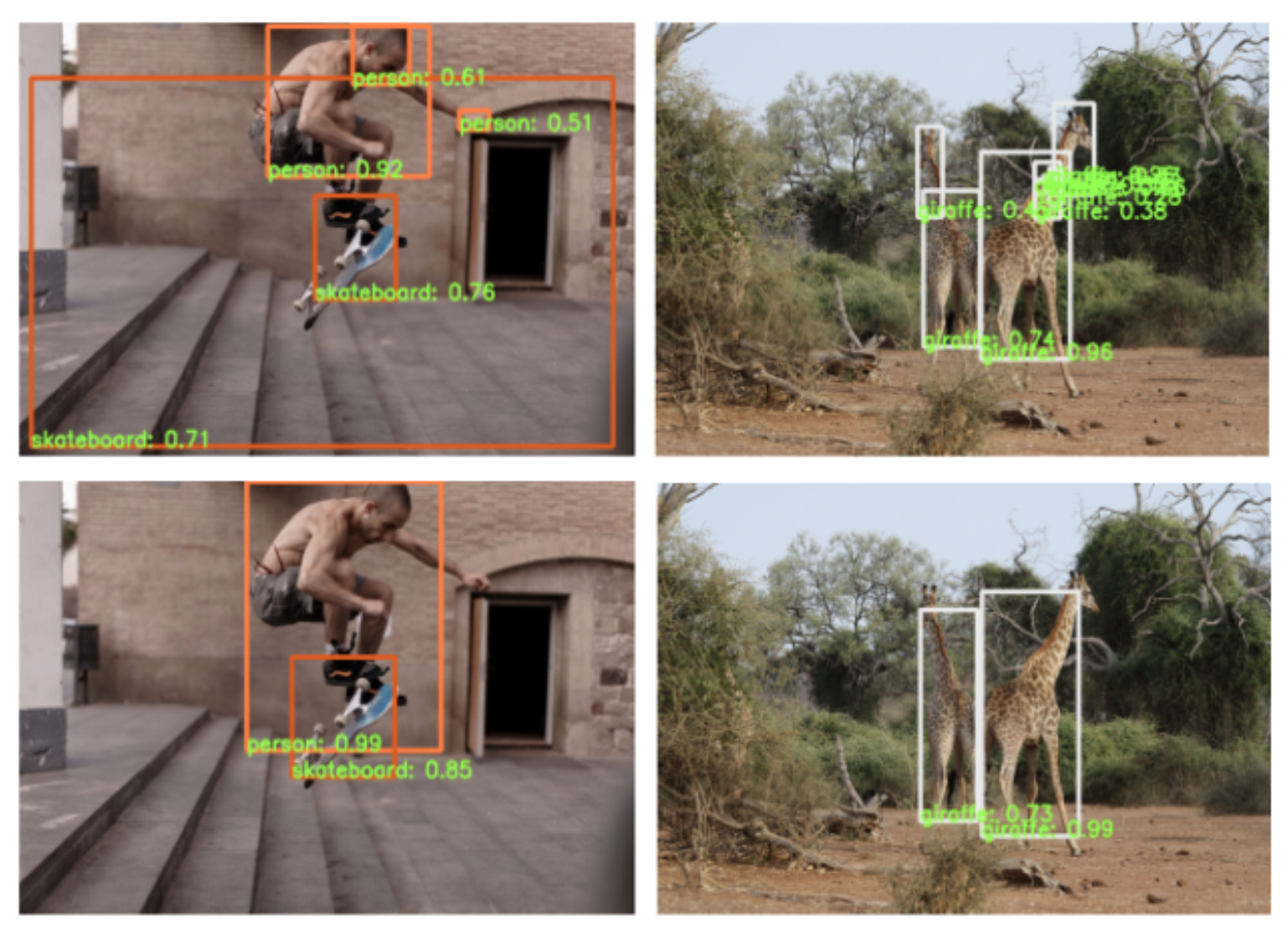

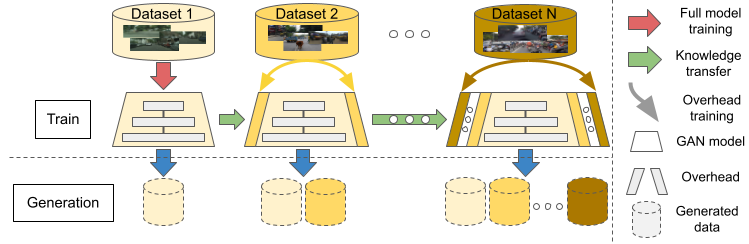

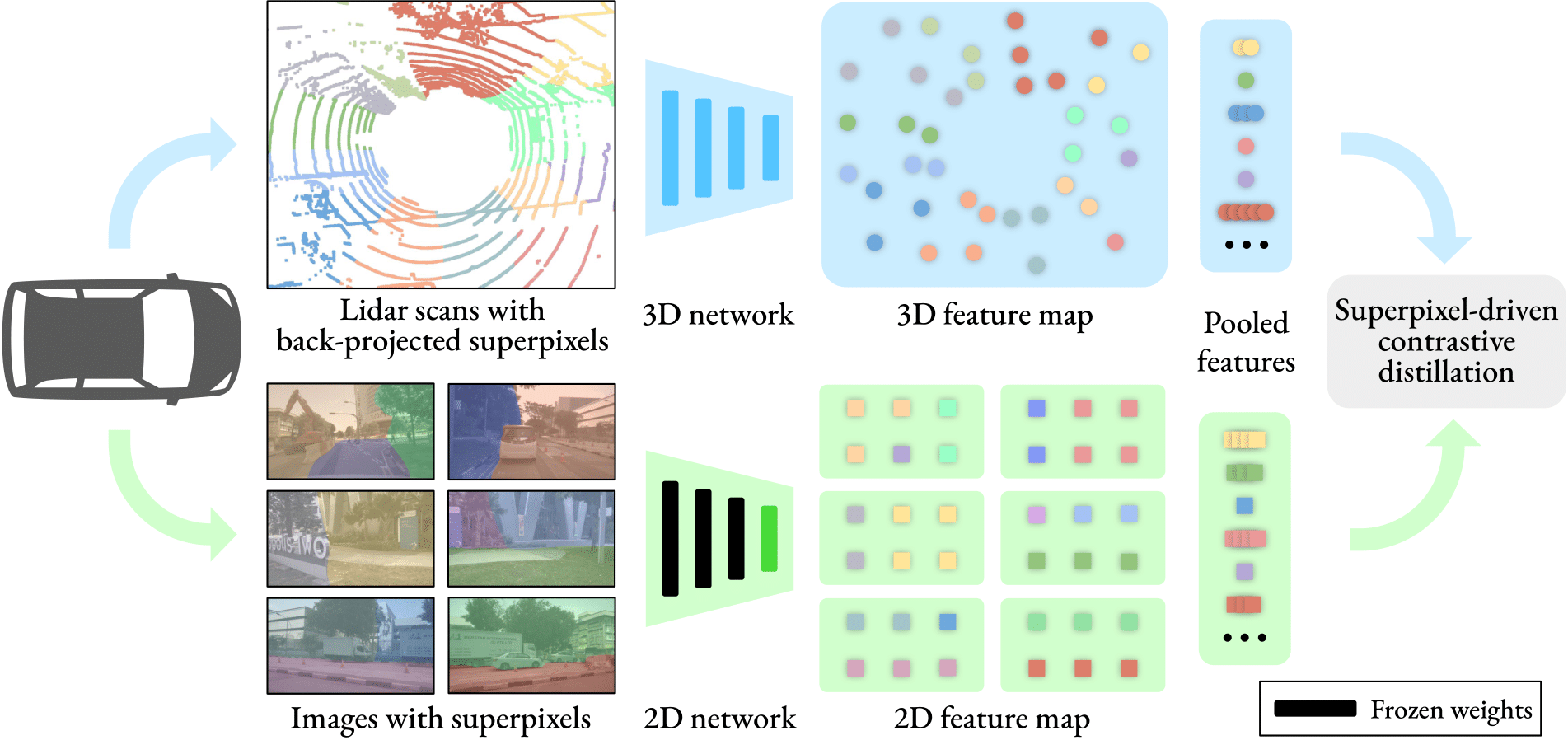

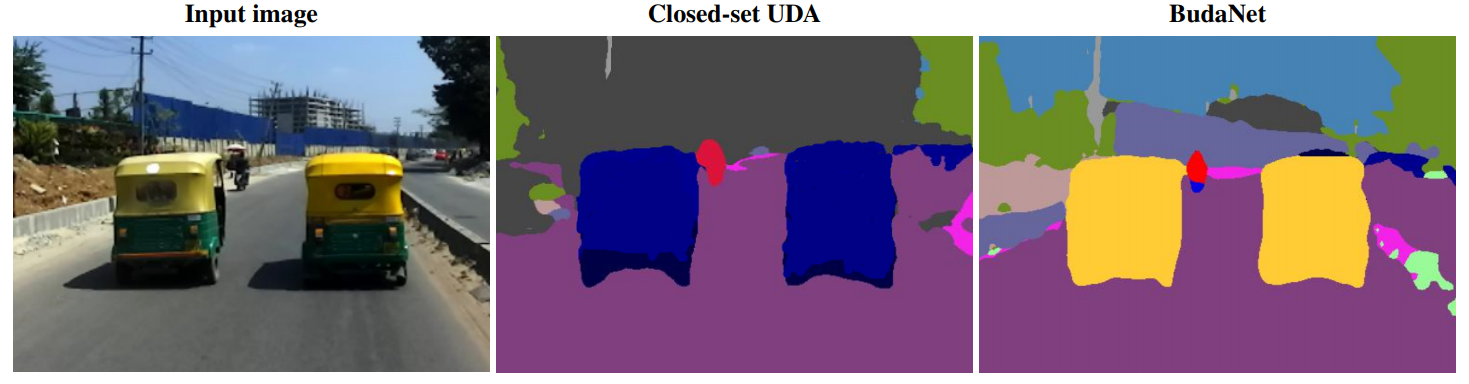

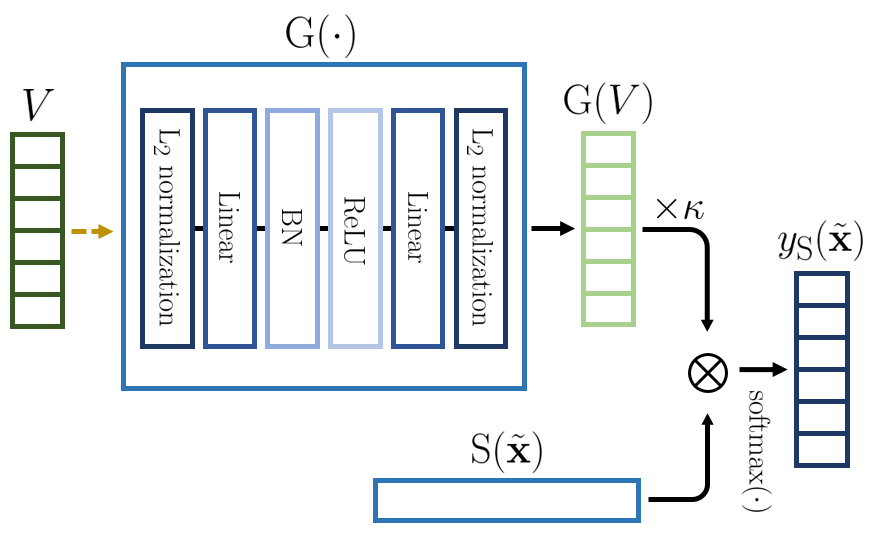

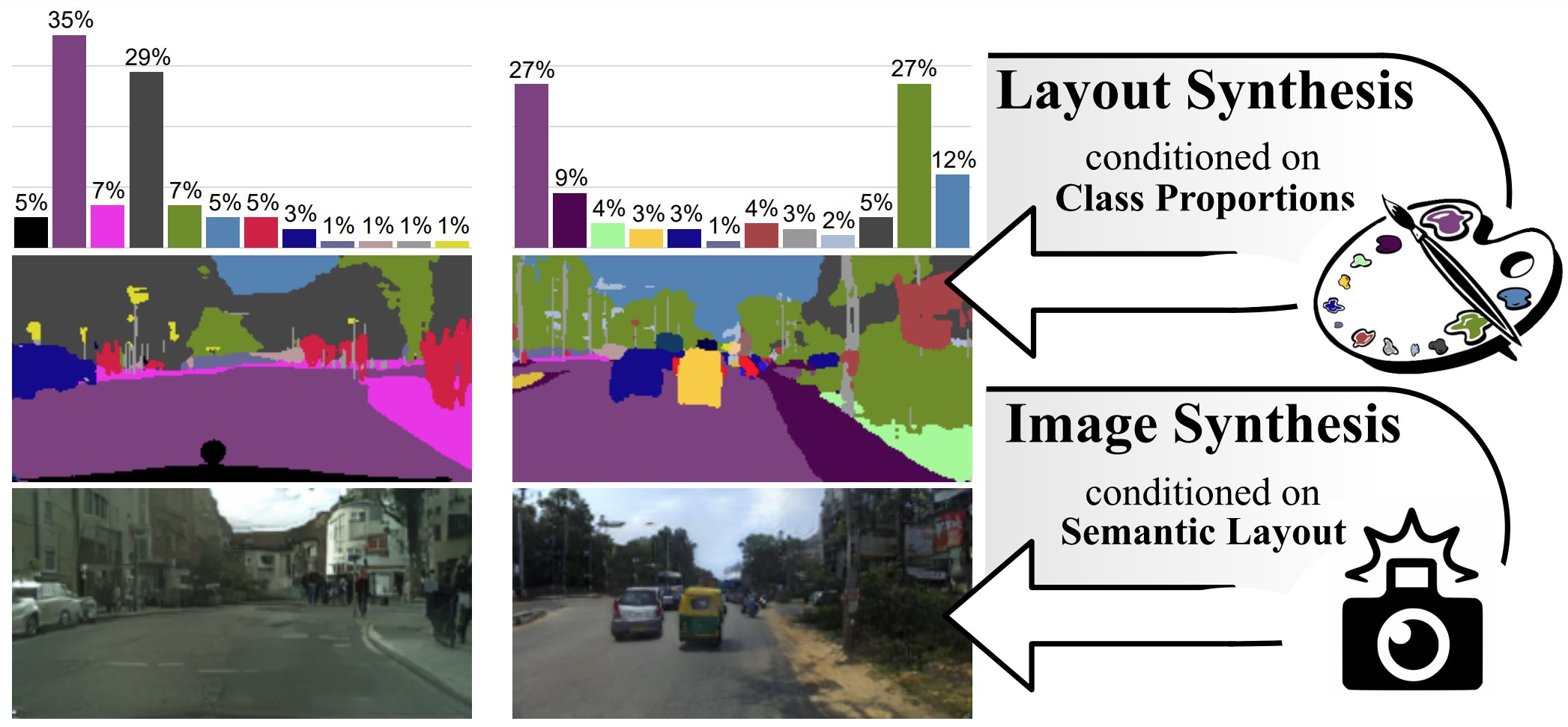

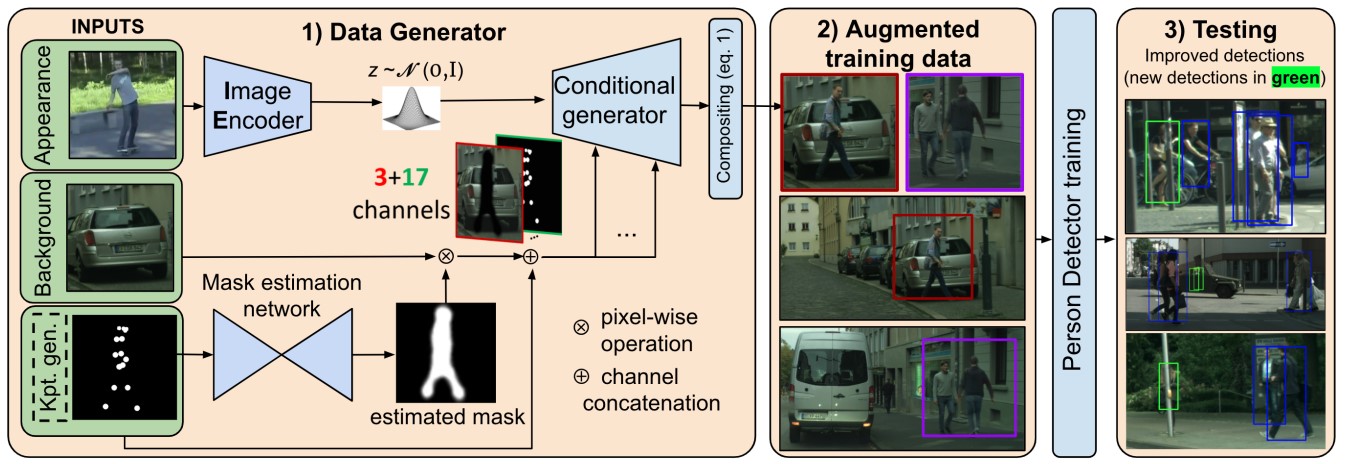

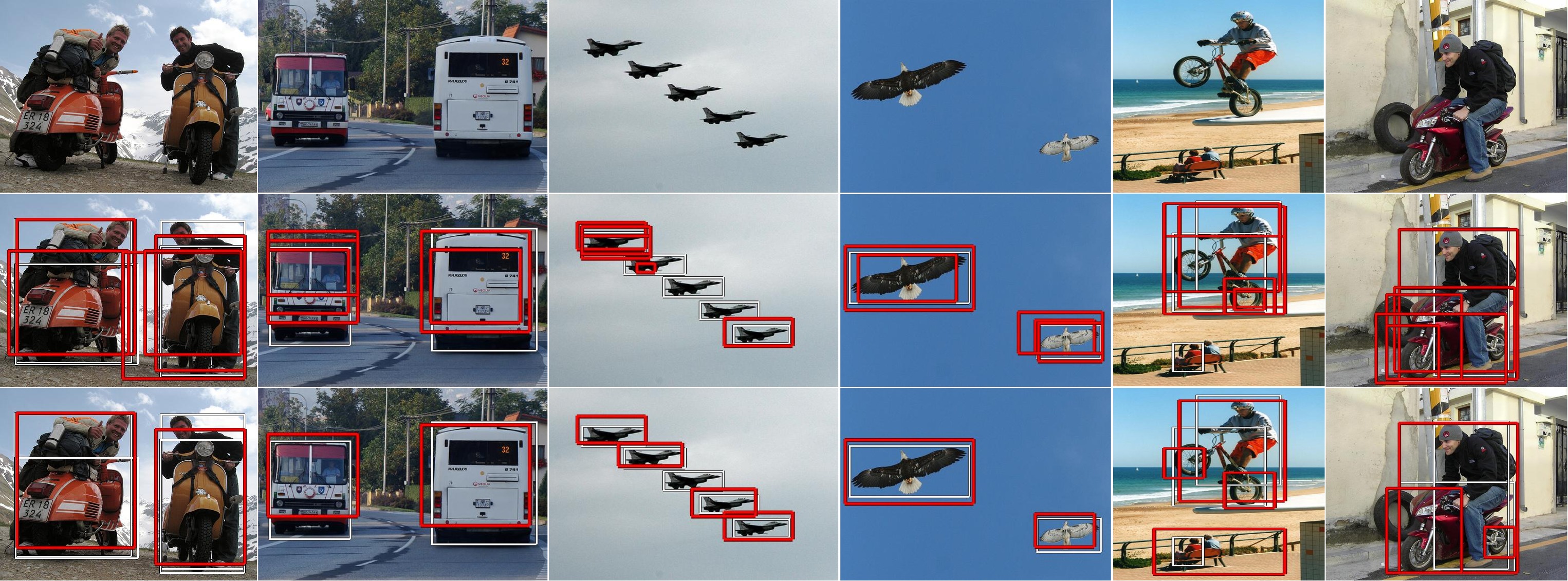

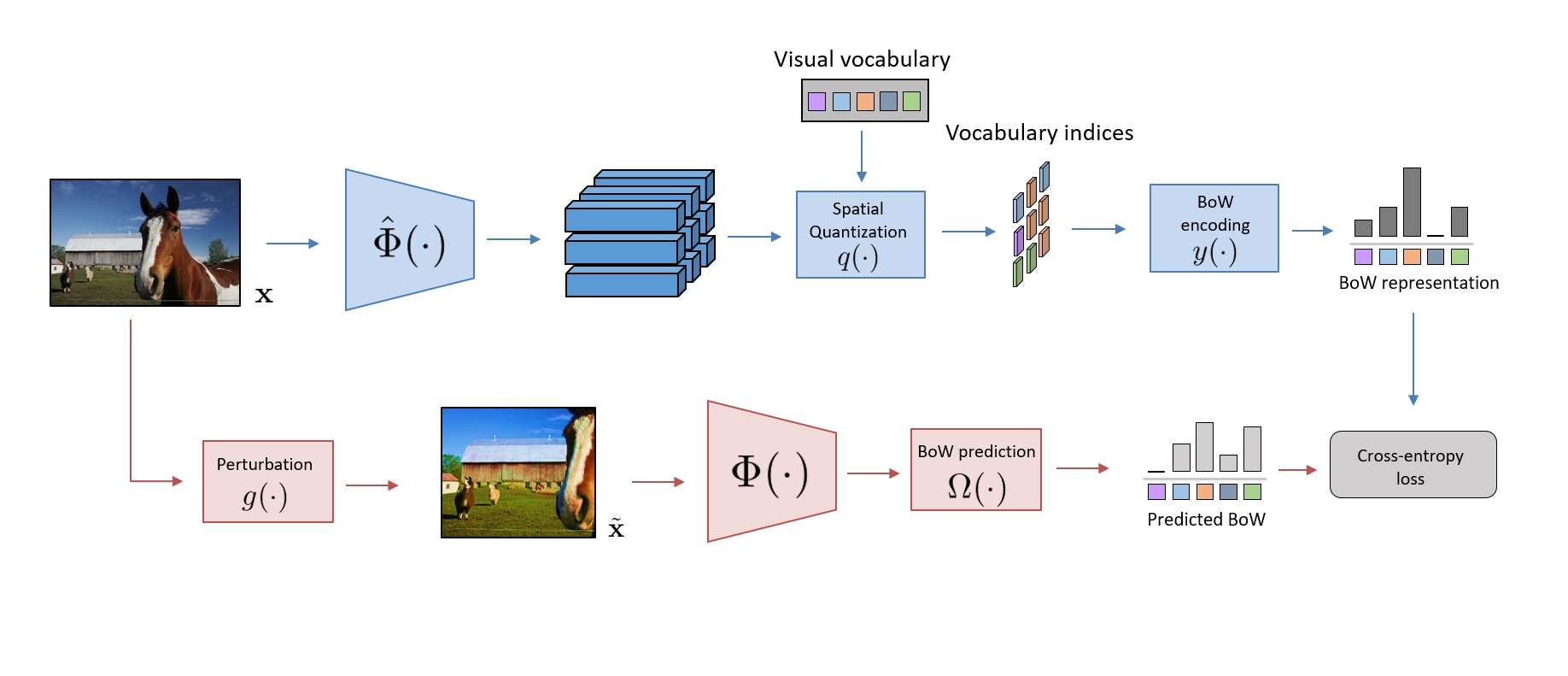

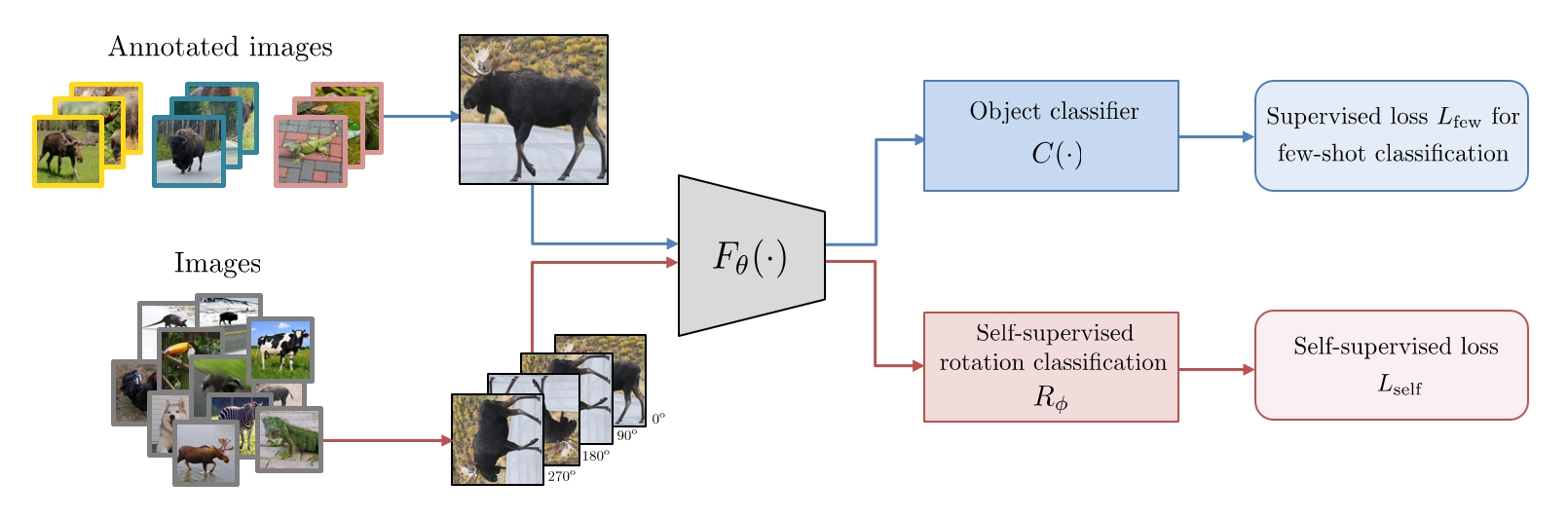

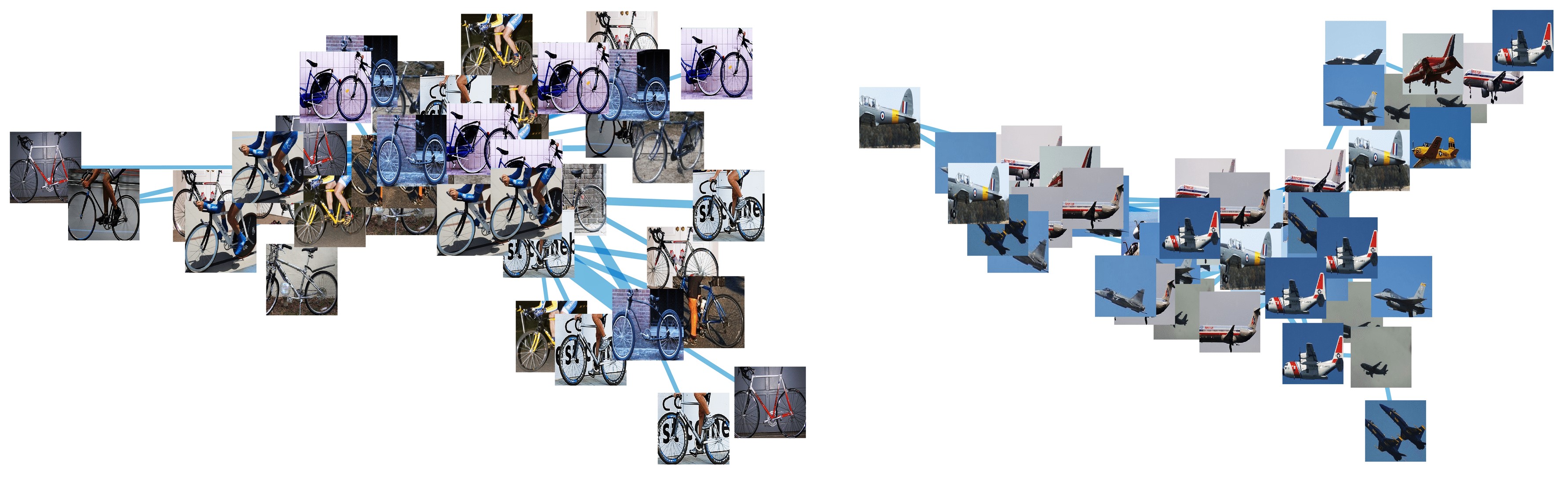

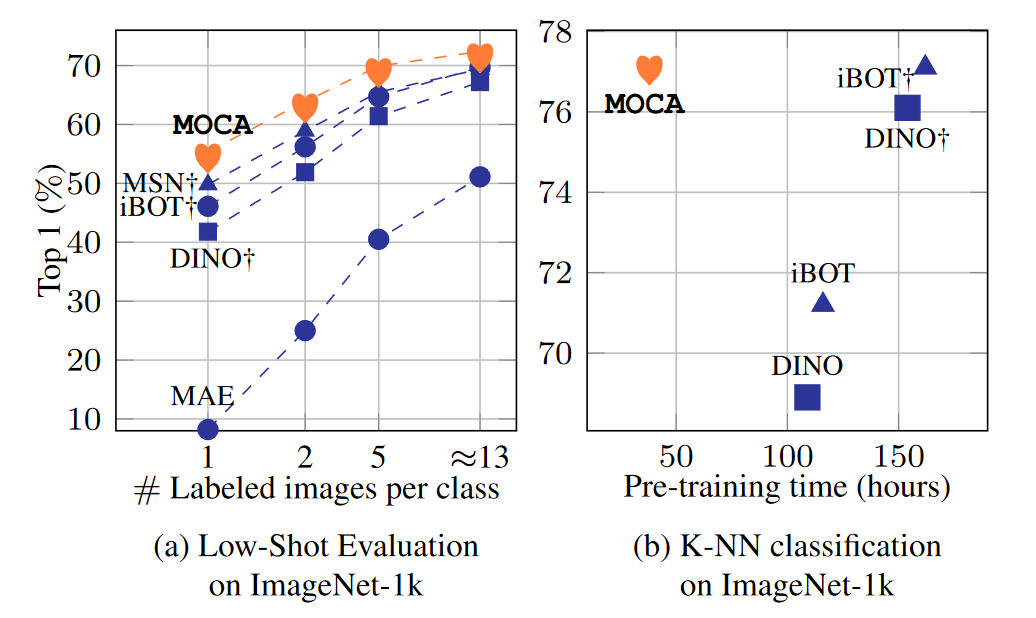

Collecting diverse enough data, and annotating it precisely, is complex, costly and time-consuming. To reduce dramatically these needs, we explore various alternatives to fully-supervised learning, e.g, training that is unsupervised (as rOSD at ECCCV’20), self-supervised (as BoWNet at CVPR’20), semi-supervised, active, zero-shot (as ZS3 at NeurIPS’19) or few-shot. We also investigate training with fully-synthetic data (in combination with unsupervised domain adaptation) and with GAN-augmented data (as Semantic Palette at CVPR’21).

Selected publications

-

-

-

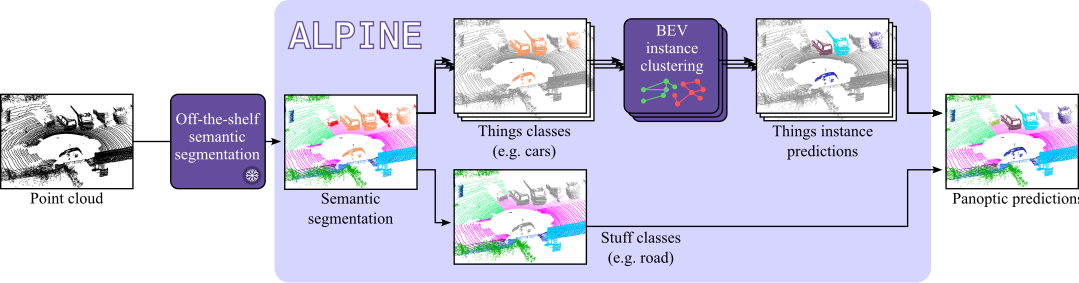

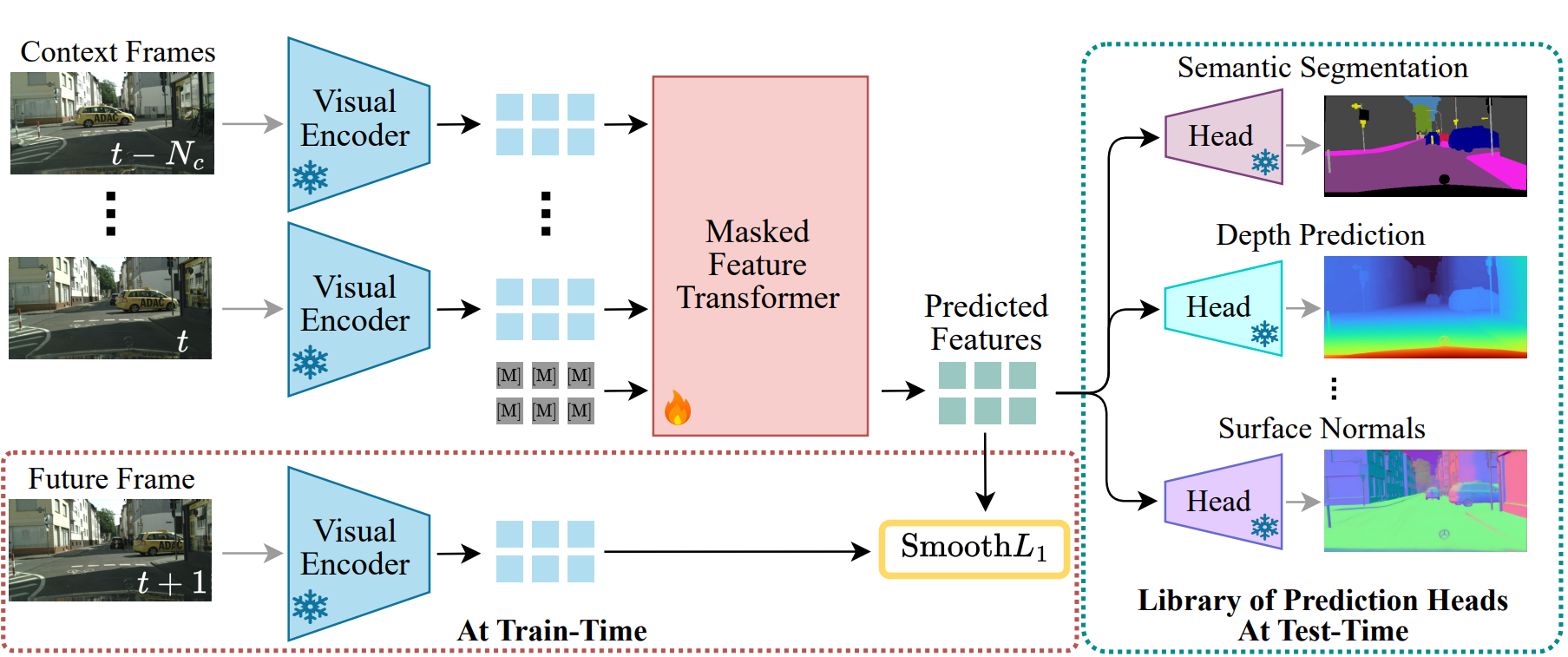

CoRL Workshop, 2025

CoRL Workshop, 2025 -

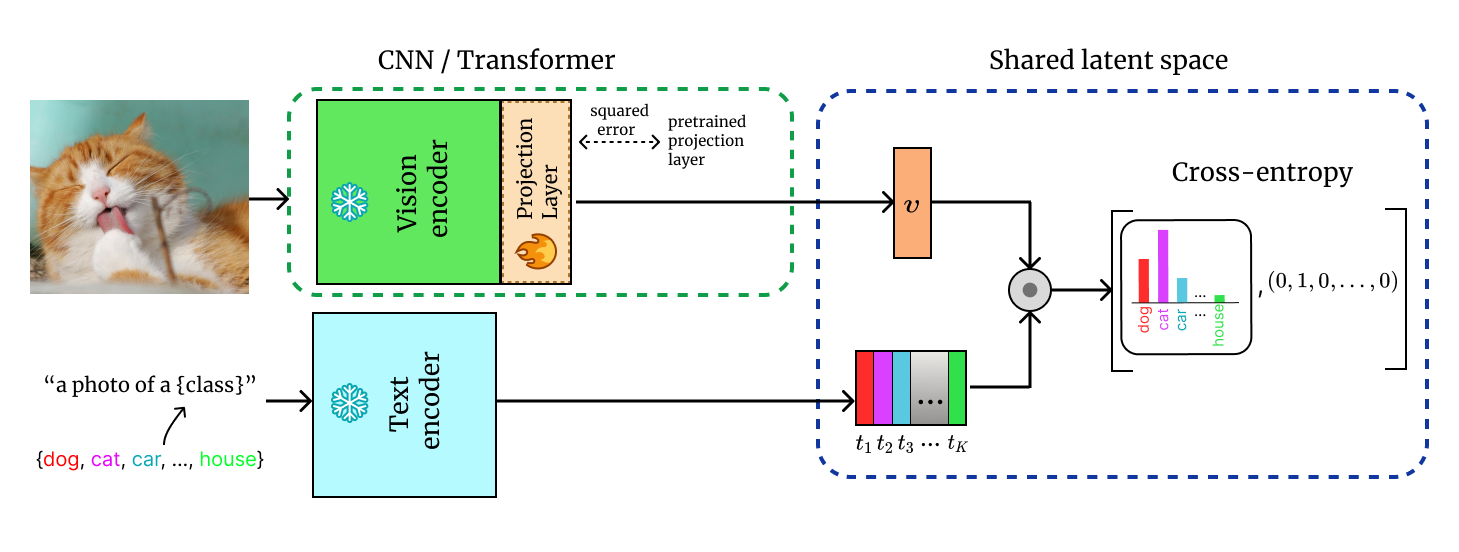

TMLR 2024 and ICLR, 2025

TMLR 2024 and ICLR, 2025 -

-

-