QuEST: Quantized Embedding Space for Transferring Knowledge

Himalaya Jain Spyros Gidaris Nikos Komodakis Patrick Pérez Matthieu Cord

ECCV 2020

Abstract

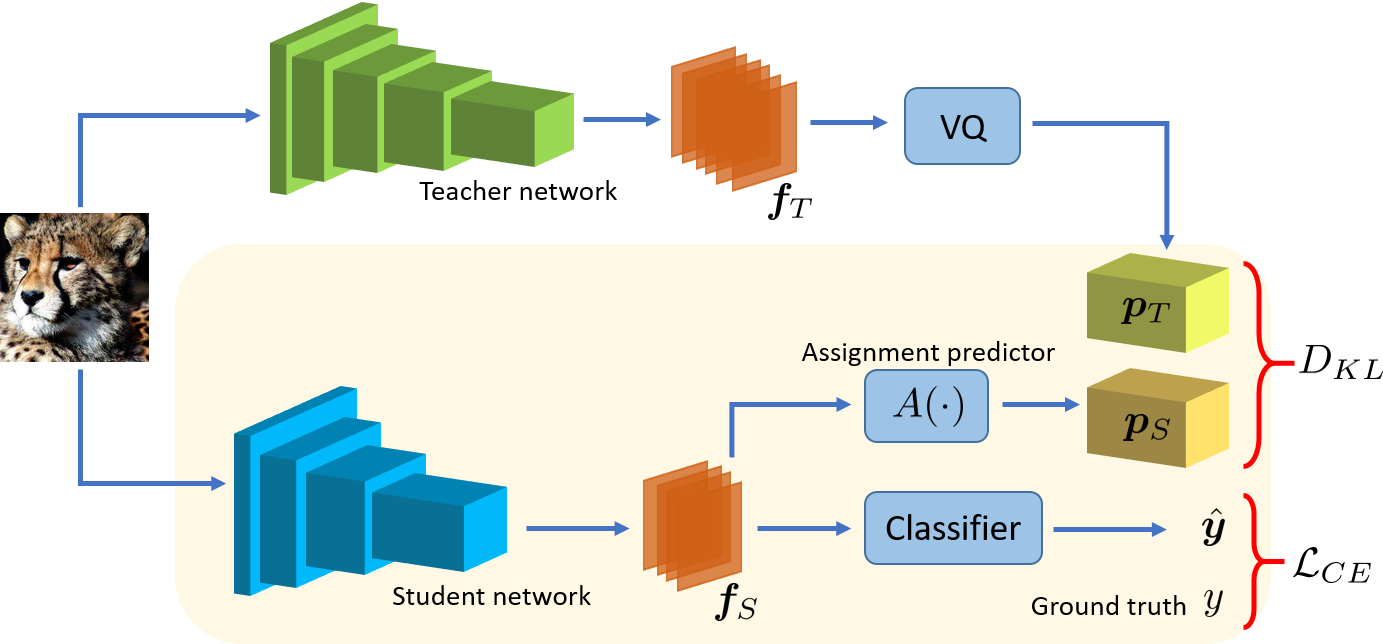

Knowledge distillation refers to the process of training a student network to achieve better accuracy by learning from a pre-trained teacher network. Most of the existing knowledge distillation methods direct the student to follow the teacher by matching the teacher's output, feature maps or their distribution. In this work, we propose a novel way to achieve this goal: by distilling the knowledge through a quantized visual words space. According to our method, the teacher's feature maps are first quantized to represent the main visual concepts (i.e., visual words) encompassed in these maps and then the student is asked to predict those visual word representations. Despite its simplicity, we show that our approach is able to yield results that improve the state of the art on knowledge distillation for model compression and transfer learning scenarios. To that end, we provide an extensive evaluation across several network architectures and most commonly used benchmark datasets.

Video

BibTeX

@article{jain2019quest,

title={QUEST: Quantized embedding space for transferring knowledge},

author={Jain, Himalaya and Gidaris, Spyros and Komodakis, Nikos and P{\'e}rez, Patrick and Cord, Matthieu},

journal={arXiv preprint arXiv:1912.01540},

year={2019}

}